Seekr Grows AI Business with Big Cost Savings on Intel® Tiber™ Developer Cloud

April 10, 2024 | Intel® Tiber® Developer Cloud

Trustworthy AI for content evaluation and generation at reduced costs

Named one of the most innovative companies of 2024 by Fast Company, Seekr is using the Intel® Tiber™ Developer Cloud1 to build, train, and deploy advanced LLMs on cost-effective clusters running on the latest Intel hardware and software, including Intel® Gaudi® 2 AI accelerators. This strategic collaboration to accelerate AI helps Seekr meet the enormous demand for compute capacity while reducing its cloud costs and increasing workload performance.

Named one of the most innovative companies of 2024 by Fast Company, Seekr is using the Intel® Tiber™ Developer Cloud1 to build, train, and deploy advanced LLMs on cost-effective clusters running on the latest Intel hardware and software, including Intel® Gaudi® 2 AI accelerators. This strategic collaboration to accelerate AI helps Seekr meet the enormous demand for compute capacity while reducing its cloud costs and increasing workload performance.

Solution overview at a glance

Two of Seekr’s popular products, Flow and Align, help customers leverage AI to deploy and optimize their content and advertising strategies and to train, build, and manage the entire LLM pipeline using scalable and composable workflows.

This takes immense compute capacity which, historically, would require a significant infrastructure investment and considerable cloud costs.

By moving their production workloads from on-premise to Intel Tiber Developer Cloud, Seekr is now able to employ the power and capacity of Intel hardware and software technologies—including thousands of Intel Gaudi 2 cards—to build its LLMs, and do so at a fraction of the price and with exceptionally high performance.

Read the case study (includes benchmarks)

About Seekr

Seekr builds large language models (LLMs) that identify, score, and generate reliable content at scale; the company’s goal is to make the Internet safer and more valuable to use while solving their customers’ need for brand trust. Its customers include Moderna, SimpliSafe, Babbel, Constant Contact, and Indeed.

1 Formerly “Intel® Developer Cloud”; now part of the Intel® Tiber™ portfolio of enterprise business solutions.

Intel Vision 2024 Unveils Depth & Breadth of Open, Secure, Enterprise AI

April 9, 2024

At Intel Vision 2024, Intel CEO Pat Gelsinger introduced new strategies, next-gen products and portfolios, customers, and collaborations spanning the AI continuum.

Topping the list is Intel® Tiber™, a rich portfolio of complementary business solutions to streamline deployment of enterprise software and services across AI, cloud, edge, and trust and security; and the Intel® Gaudi® 3 accelerator, bringing more performance, openness, and choice to enterprise GenAI.

More than 20 customers showcased their leading AI solutions running on Intel® architecture, with LLM/LVM platform providers Landing.ai, Roboflow, and Seekr demonstrating how they use Intel Gaudi 2 accelerators on the Intel® Tiber™ Developer Cloud to develop, fine-tune, and deploy their production-level solutions.

Specific to collaborations, Intel announced them with Google Cloud, Thales, and Cohesivity, each of whom is leveraging Intel’s confidential computing capabilities—including Intel® Trust Domain Extensions (Intel® TDX), Intel® Software Guard Extensions (Intel® SGX), and Intel® Tiber™ Trust Services1 attestation service—in their cloud instances.

A lot more was revealed, including formation of the Open Platform for Enterprise AI and Intel’s expanded AI roadmap inclusive of 6th Gen Intel® Xeon® processors with E- and P-cores and silicon for client, edge, and connectivity.

“We’re seeing incredible customer momentum and demonstrating how Intel’s open, scalable systems, powered by Intel Gaudi, Xeon, Core Ultra processors, Ethernet-enabled networking, and open software, unleash AI today and tomorrow, bringing AI everywhere for enterprises.”

Highlights

Intel Tiber portfolio of business solutions simplifies the deployment of enterprise software and services, including for AI, making it easier for customers to find complementary solutions that fit their needs, accelerate innovation, and unlock greater value without compromising on security, compliance, or performance. Full rollout is planned in the 3rd quarter of 2024. Explore Intel Tiber now.

Intel Tiber portfolio of business solutions simplifies the deployment of enterprise software and services, including for AI, making it easier for customers to find complementary solutions that fit their needs, accelerate innovation, and unlock greater value without compromising on security, compliance, or performance. Full rollout is planned in the 3rd quarter of 2024. Explore Intel Tiber now.

Intel Gaudi 3 AI accelerator promises 4x more compute and 1.5x increase in memory bandwidth over Gaudi 2 and is projected to outperform NVIDIA H100 by an average of 50% on inference and 60% on power efficiency for LLaMa 7B and 70B and Falcon 180B LLMs. It will be available the 2nd quarter of 2024, including in the Intel Developer Cloud.

Intel Tiber Developer Cloud’s latest release includes new hardware and services that boost compute capacity, including bare metal as a service (BMaaS) options that host large-scale clusters of Gaudi 2 accelerators and Intel® Max Series GPUs, VMs running on Gaudi 2, storage as a service (StaaS) including file storage, and Intel® Kubernetes Service for cloud-native AI workloads.

Find out how Seekr used Intel Developer Cloud to deploy a trustworthy LLM for content generation and evaluation at scale.

Confidential computing collaborations with Thales and Cohesity increase trust and security and decrease risk for enterprise customers.

- Thales, a leading global tech and security provider, announced a data security solution comprised of its own CipherTrust Data Security Platform on Google Cloud Platform for end-to-end data protection and Intel Tiber Trust Services for confidential computing and trusted cloud-independent attestation. This will give enterprises additional controls to protect data at rest, in transit, and in use.

- Cohesity, a leader in AI-powered data security and management, announced the addition of confidential computing capabilities to Cohesity Data Cloud. The solution leverages its Fort Knox cyber vault service for data-in-use encryption, in tandem with Intel SGX and Intel Tiber Trust Services to reduce the risk posed by bad actors accessing data while it’s being processed in main memory. This is critical for regulated industries such as financial services, healthcare, and government.

Explore more

- Intel’s Enterprise Software Portfolio

- Intel Tiber Developer Cloud

- Intel® Confidential Computing Solutions

- Intel TDX

- Intel SGX

1 Formerly Intel® Trust Authority

Just Released: Intel® Software Development Tools 2024.1

March 28, 2024 | Intel® Software Development Tools

Accelerate code with confidence on the world’s first SYCL 2020-conformant toolchain

The 2024.1 Intel® Software Development Tools are now available and include a major milestone for accelerated computing: Intel® oneAPI DPC++/C++ Compiler has become the first compiler to adopt the full SYCL 2020 specification.

Why is this important?

Having a SYCL 2020-conformant compiler means developers can have confidence that their code is future-proof—it’s portable and reliably performant across the diversity of existing and future-emergent architectures and hardware targets, including GPUs.

“SYCL 2020 enables productive heterogeneous computing today, providing the necessary controls to write high-performance parallel software for the complex reality of today’s software and hardware. Intel’s commitment to supporting open standards is again showcased as they become a SYCL 2020 Khronos Adopter.”

Key Benefits

- Code with Confidence & Build Faster – Optimize parallelization for higher performance and productivity in modern C++ code via the Intel oneAPI DPC++/C++ Compiler, now with full SYCL 2020 conformance; explore new multiarchitecture features across AI, HPC, and distributed computing; and access relevant AI Tools faster and more easily with an expanded set of web-based selector options.

- Accelerate AI Workloads & Lower Compute Costs – Achieve performance improvements on new Intel CPUs and GPUs, including up to 14x with oneDNN on 5th Gen Intel® Xeon® Scalable processors1; 10x to 100x out-of-the-box acceleration of popular deep learning frameworks and libraries such as PyTorch* and TensorFlow*2; and faster gradient boosting inference across XGBoost, LightGBM, and CatBoost. Perform parallel computations at reduced cost with Intel® Extension for Scikit-learn* algorithms.

- Increase Innovation & Expand Deployment – Tune once and deploy universally with more efficient code offload using SYCL Graph, now available on multiple SYCL backends in the Intel oneAPI DPC++/C++ Compiler; ease CUDA-to-SYCL migration of more CUDA APIs in the Intel® DPC++ Compatibility Tool; and explore time savings in a CodePin Tech Preview (new SYCLomatic feature) to auto-capture test vectors and start validation immediately after migration. Codeplay adds new support and capabilities to its oneAPI plugins for NVIDIA and AMD GPUs.

The Nuts & Bolts

For those of you interested in diving into the component-level deets, here’s the collection.

Compilers

- Intel oneAPI DPC++/C++ Compiler is the first compiler to achieve SYCL 2020 conformance, giving developers confidence that their SYCL code is portable and reliably performs on the diversity of current and emergent GPUs. Enhanced SYCL Graph allows for seamless integration of multi-threaded work and thread-safe functions with applications and is now available on multiple SYCL backends, enabling tune-once-deploy-anywhere capability. Expanded conformance to OpenMP 5.0, 5.1, 5.2, and TR12 language standards enables increased performance.

- Intel® Fortran Compiler adds more Fortran 2023 language features including improved compatibility and interoperability between C and Fortran code, simplified trigonometric calculations, and predefined data types to improve code portability and ensure consistent behavior; makes OpenMP offload programming more productive; and increases compiler stability.

Performance Libraries

- Intel® oneAPI Math Kernel Library (oneMKL) introduces new optimizations and functionalities to reduce the data transfer between Intel GPUs and the host CPU, enables the ability to reproduce results of BLAS level 3 operations on Intel GPUs from run-to-run through CNR, and streamlines CUDA-to-SYCL porting via the addition of CUDA-equivalent functions.

- Intel® oneAPI Data Analytics Library (oneDAL) enables gradient boosting inference acceleration across XGBoost*, LightGBM*, and CatBoost* without sacrificing accuracy; improves clustering by adding spare K-Means support to automatically identify a subset of the features used in clustering observations.

- Intel® oneAPI Deep Neural Network Library (oneDNN) adds support for GPT-Q to improve LLM performance, fp8 data type in primitives and Graph API, fp16 and bf16 scale and shift arguments for layer normalization, and opt-in deterministic mode to guarantee results are bitwise identical between runs in a fixed environment.

- Intel® oneAPI DPC++ Library (oneDPL) adds a specialized sort algorithm to improve app performance on Intel GPUs, adds transform_if variant with mask input for stencil computation needs, and extends C++ STL style programming with histogram algorithms to accelerate AI and scientific computing.

- Intel® oneAPI Collective Communications Library (oneCCL) optimizes all key communication patterns to speed up message passing in a memory-efficient manner and improve inference performance.

- Intel® Integrated Performance Primitives expands features and support for quantum computing, cybersecurity, and data compression, including XMSS post-quantum hash-based cryptographic algorithm (tech preview), FIPS 140-3 compliance, and updated LZ4 lossless data compression algorithm for faster data transfer and reduced storage requirements in large data-intensive applications.

- Intel® MPI Library adds new features to improve application performance and programming productivity, including GPU RMA for more efficient access to remote memory and MPI 4.0 support for Persistent Collectives and Large Counts.

AI & ML Tools & Frameworks

- Intel® Distribution for Python* expands the ability to develop more future-proof code, including Data Parallel Control (dpctl) library’s 100% conformance to the Python Array API standard and support for NVIDIA devices; Data Parallel Extension for NumPy* enhancements for linear algebra, data manipulation, statistics, data types, plus extended support for keyword arguments; and Data Parallel Extension for Numba* improvements to kernel launch times.

- Intel Extension for Scikit-learn reduces the computational costs on GPUs by making computations only on changed dataset pieces with Incremental Covariance and performing parallel GPU computations using SPMD interfaces.

- Intel® Distribution of Modin* delivers significant enhancements in security and performance, including a robust security solution that ensures proactive identification and remediation of data asset vulnerabilities, and performance fixes to optimize asynchronous execution. (Note: in the 2024.2 release, developers will be able to access Modin through upstream channels.)

Analyzers & Debuggers

- Intel® VTune™ Profiler expands the ability to identify and understand the reasons of implicit USM data movements between Host and GPU causing performance inefficiencies in SYCL applications; adds support for .NET 8, Ubuntu* 23.10, and FreeBSD* 14.0.

- Intel® Distribution for GDB* rebases to GDB 14, staying current and aligned with the latest application debug enhancements; enables the ability to monitor and troubleshoot memory access issues in real time; and adds large General Purpose Register File debug mode support for more comprehensive debugging and optimization of GPU-accelerated applications.

Rendering & Ray Tracing

- Intel® Embree adds enhanced error reporting for SYCL platform and driver to smooth the transition of cross-architecture code; improves stability, security, and performance capabilities.

- Intel® Open Image Denoise fully supports multi-vendor denoising across all platforms: x86 and ARM CPUs (including ARM support on Windows*, Linux*, and macOS*) and Intel, NVIDIA, AMD, and Apple GPUs.

More Resources

- Intel Compiler First to Achieve SYCL 2020 Conformance

- A Dev's Take on the 2024.1 Release

- Download Codeplay oneAPI plugins: NVIDIA GPUs | AMD GPUs

Footnotes

1 Performance Index: 5th Gen Intel Xeon Scalable Processors

2 Software AI accelerators: AI performance boost for free

Gaudi and Xeon Advance Inference Performance for Generative AI

March 27, 2024 | Intel® Developer Cloud, MLCommons

Newest MLPerf results for Intel® Gaudi 2 accelerators and 5th Gen Intel® Xeon® processors demonstrate Intel is raising the bar for GenAI performance.

Today, MLCommons published results of the industry standard MLPerf v4.0 benchmark for inference, inclusive of Intel’s submissions for its Gaudi 2 accelerators and 5th Gen intel Xeon Scalable processors with Intel® AMX.

As the only benchmarked alternative to NVIDIA H100* for large language and multi-model models, Gaudi 2 offers compelling price/performance, important when gauging the total cost of ownership. On the CPU side, Intel remains the only server CPU vendor to submit MLPerf results (and Xeon is the host CPU for many accelerator submissions).

Get the details and results here.

Try them in the Intel® Developer Cloud

You can evaluate 5th Gen Xeon and Gaudi 2 in the Intel Developer Cloud, including running small- and large-scale training (LLM or generative AI) and inference production workloads at scale and managing AI compute resources. Explore the subscription options and sign up for an account here.

Intel Open Sources Continuous Profiler Solution, Automating Always-On CPU Performance Analysis

March 11, 2024 | Intel® Granulate™ Cloud Optimization Software

A continuous, autonomous way to find runtime efficiencies and simplify code optimization.

Today, Intel has released to open source the Continuous Profiler optimization agent, serving as another example of the company’s open ecosystem approach to catalyze innovation and boost productivity for developers.

As its name indicates, Continuous Profiler keeps perpetual oversight on CPU utilization, thereby offering developers, performance engineers, and DevOps an always-on and autonomous way to identify application and workload runtime inefficiencies.

How it works

It combines multiple sampling profilers into a single flame graph, which is a unified visualization of what the CPU is spending time on and, in particular, where high latency or errors are happening in the code.

Why you want it

Continuous Profiler comes with numerous unique features to help teams find and fix performance errors and smooth deployment, is compatible with Intel Granulate’s continuous optimization services, can be deployed cluster-wide in minutes, and supports a range of programming languages without requiring code changes.

Additionally, it’s SOC2-certified and held to Intel's high security standards, ensuring reliability and trust in its deployment, and is used by global companies including Snap Inc. (portfolio includes Snapchat and Bitmoji), ironSource (app business platform), and ShareChat (social networking platform).

Learn more

Intel® Software at KubeCon Europe 2024

February 29, 2024 | Intel® Software @ KubeCon Europe 2024

Intel’s Enterprise Software Portfolio enables K8s scalability for enterprise applications

Intel’s Enterprise Software Portfolio enables K8s scalability for enterprise applications

Meet Intel enterprise software experts at KubeCon Europe 2024 (March 19-22) and discover how you can streamline and scale deployments, reduce Kubernetes costs, and achieve end-to-end security for data.

Plus, attend the session Above the Clouds with American Airlines to learn how one of the world’s top airlines achieved 23% cost reductions for their largest cloud-based workloads using Intel® Granulate™ software.

Why Intel Enterprise Software for K8s?

Because its Enterprise Software portfolio is purpose-built to accelerate cloud-native applications and solutions more efficiently, at scale, paving a faster way to AI. Meaning you can run production-level Kubernetes workloads the right way—easier to manage, secure, and efficiently scalable.

In a nutshell, you get:

- Optimized performance with reduced costs

- Better models with streamlined workflow

- Confidential computing that’s safe, secure, and compliant

Stop by Booth #J17 to have a conversation about the depth and breadth of Intel’s enterprise software solutions.

Explore Intel @ KubeCon EU 2024 →

More resources

Prediction Guard Offers Customers LLM Reliability and Security via Intel® Developer Cloud

February 22, 2024 | Intel® Developer Cloud

AI startup Prediction Guard is now hosting its LLM API in the secure, private environment of Intel Developer Cloud, taking advantage of Intel’s resilient computing resources to deliver peak performance and consistency in cloud operations for its customers’ GenAI applications.

AI startup Prediction Guard is now hosting its LLM API in the secure, private environment of Intel Developer Cloud, taking advantage of Intel’s resilient computing resources to deliver peak performance and consistency in cloud operations for its customers’ GenAI applications.

Prediction Guard’s AI platform enables enterprises to harness the full potential of large language models while mitigating security and trust issues such as hallucinations, harmful outputs, and prompt injections.

By moving to Intel Developer Cloud, the company can offer its customers significant and reliable computing power as well as the latest AI hardware acceleration, libraries, and frameworks: it’s currently leveraging Intel® Gaudi® 2 AI accelerators, the Intel/Hugging Face collaborative Optimum Habana library, and Intel extensions for PyTorch and Transformers.

“For certain models, following our move to Intel Gaudi 2, we have seen our costs decrease while throughput has increased by 2x.”

Learn more

Prediction Guard is part of the Intel® Liftoff for Startups, a free program for early-stage AI and machine learning startups that helps them innovate and scale across their entrepreneurial journey.

New Survey Unpacks the State of Cloud Optimization for 2024

February 20, 2024 | Intel® Granulate™ software

A newly released global survey conducted by the Intel® Granulate™ cloud-optimization team assessed key trends and strategies in cloud computing among DevOps, Data Engineering, and IT leaders at 413 organizations spanning multiple industries.

Among the findings, the #1 and #2 priorities for the majority of organizations (over 2/3) were cloud cost reduction and application performance improvement. And yet, 54% do not have a team dedicated to cloud-based workload optimization.

Get the report today to learn more trends, including:

- Cloud optimization priorities and objectives

- Assessment of current optimization efforts

- The most costly and difficult-to-optimize cloud-based workloads

- Optimization tools used in the tech stack

- Innovations for 2024

Download the report →

Request a demo →

American Airlines Achieves 23% Cost Reductions for Cloud Workloads using Intel® Granulate™

January 29, 2024 | Intel® Granulate™ Cloud Optimization Software

American Airlines (AA) partnered with Intel Granulate to optimize its most challenging workloads, which were stored in a Databricks data lake, and also mitigate the challenges of an untenable data-management price tag.

After deploying the Intel Granulate solution, which delivers autonomous and continuous optimization with no code changes or development efforts required, AA was able to free up engineering teams to process and analyze data at optimal pace and scale, run job clusters with 37% fewer resources, and reduce costs across all clusters by 23%.

Read the case study →

Request a demo →

Intel, the Intel logo, and Granulate are trademarks of Intel Corporation or its subsidiaries

Now Available: the First Open Source Release of Intel® SHMEM

January 10, 2024 | Intel® SHMEM [GitHub]

V1.0.0 of this open source library extends the OpenSHMEM programming model to support Intel® Data Center GPUs using the SYCL cross-platform C++ programming environment.

OpenSHMEM (SHared MEMory) is a parallel programming library interface standard that enables Single Program Multiple Data (SPMD) programming of distributed memory systems. This allows users to write a single program that executes many copies of the program across a supercomputer or cluster of computers.

Intel® SHMEM is a C++ library that enables applications to use OpenSHMEM communication APIs with device kernels implemented in SYCL. It implements a Partitioned Global Address Space (PGAS) programming model and includes a subset of host-initiated operations in the current OpenSHMEM standard and new device-initiated operations callable directly from GPU kernels.

Feature Highlights

- Supports the Intel® Data Center GPU Max Series

- Device and host API support for OpenSHMEM 1.5-compliant point-to-point RMA, Atomic Memory Operations, Signaling, Memory Ordering, and Synchronization Operations

- Device and host API support for OpenSHMEM collective operations

- Device API support for SYCL work-group and sub-group level extensions of Remote Memory Access, Signaling, Collective, Memory Ordering, and Synchronization Operations

- Support of C++ template function routines replacing the C11 Generic selection routines from the OpenSHMEM spec

- GPU RDMA support when configured with Sandia OpenSHMEM with suitable Libfabric providers for high-performance networking services

- Choice of device memory or USM for the SHMEM Symmetric Heap

Read the blog for all the details

(written by 3 Sr. Software Engineers @ Intel)

More resources

Updated: Codeplay oneAPI Plugins for NVIDIA GPUs

December 23, 2023

The recent release of 2024.0.1 Intel® Software Development Tools, comprised of oneAPI and AI tools, include noteworthy additions and improvements to Codeplay’s oneAPI plugins for NVIDIA GPUs.

The highlights:

- Bindless Images – a SYCL extension that represents a significant overhaul of the current SYCL 2020 images API.

- Users gain more flexibility over their memory and images.

- Enables hardware sampling and fetching capabilities for various image types like mipmaps and new ways to copy images like sub-region copies.

- Offers interoperability features with external graphics APIs like Vulkan and image-manipulation flexibility for integration with Blender.

- SYCL Support

- Non-uniform groups – allows developers to perform synchronization operations across some subset of the work items in a workgroup or subgroup.

- Peer-to-peer access – in a multi-GPU system, this may result in lower latency and/or better bandwidth in memory accesses across devices.

- Experimental version of SYCL-Graph – lets developers define ahead of time the operations they want to submit to the GPU, improving performance and saving time.

Additionally, the AMD plugin continues on the path of beta and toward production release in 2024.

Get the plugins

More resources

Intel’s Newest AI Acceleration CPUs + 2024.0 Software Development Tools = Innovation at Scale

December 14, 2023 | AI Everywhere keynote replay, Intel® Software Developer Tools 2024.0

Powering and optimizing AI workloads across data center, cloud, and edge.

Today marks the official launch of Intel’s latest AI acceleration platforms: 5th Gen Intel® Xeon® Scalable processors (codenamed Emerald Rapids) and Intel® Core™ Ultra processors (codenamed Meteor Lake). Announced by Pat Gelsinger at the “AI Everywhere” event this morning from Nasdaq in NYC, these systems provide developers and data scientists flexibility and choice for accelerating AI innovation at scale.

And the newly released Intel® Software Development Tools 2024.0 are ready to support applications and solutions targeting these platforms.

Here are some of the ways:

Targeting 5th Gen Intel® Xeon® Scalable processors

The 5th Gen is an evolution of the 4th Gen Intel Xeon platform and delivers impressive performance per watt plus outsized performance and TCO in AI, database, networking, and HPC.

Intel’s 2024.0 release of optimized tools, libraries, and AI frameworks powered by oneAPI give developers the keys to maximizing application performance by activating the advanced capabilities of Xeon—both 4th and 5th Gen, as well as Intel® Xeon® CPU Max Series:

- Intel® Advanced Matrix Extensions (Intel® AMX) built-in AI accelerator

- Intel® QuickAssist Technology (Intel® QAT) integrated workload accelerator

- Intel® Data Streaming Accelerator (Intel® DSA) for high-bandwidth, low-latency data movement

- Intel® In-Memory Analytics Accelerator (Intel® IAA) for very high throughput compression and decompression + primitive analytic functions

Software Tools for 4th & 5th Gen Intel Xeon & Max Series Processors

Targeting Intel Core Ultra processors

This combined CPU, GPU, and NPU (neural processing unit) platform is built on the new Intel 4 process and delivers an optimal balance of power efficiency and performance, immersive experiences, and dedicated AI acceleration for gaming, content creation, and productivity on the go.

Intel’s 2024.0 release helps ISVs, developers, and professional content creators optimize gaming, content creation, AI, and media applications by putting into action the new platform’s cutting-edge features, including:

- Intel® AVX-512

- Intel® AI Boost and inferencing acceleration

- AV1 encode/decode

- Ray-traced hardware acceleration

Software Tools for Intel Core Ultra Processor

Learn more

- Watch the keynote replay

- Read the press release

- Access a new quick start guide: Accelerate AI with Intel® AMX using PyTorch and TensorFlow optimizations, and OpenVINO™ toolkit

Now Available: 2024 Release of Intel Development Tools

November 20, 2023 | Intel® Software Development Tools

Expanding Multiarchitecture Performance, Porting & Productivity for AI & HPC

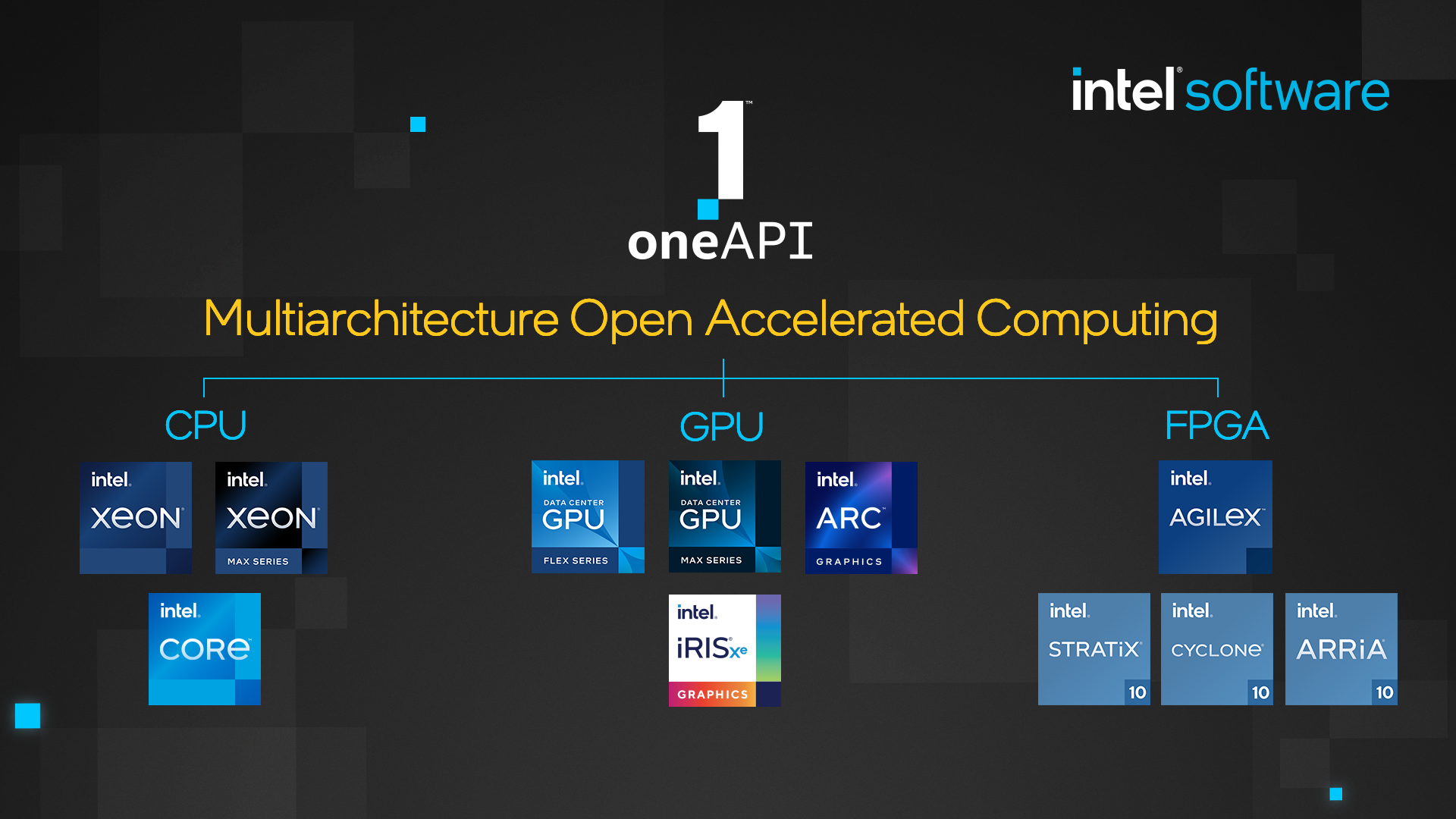

The 2024 Intel® Software Development Tools are available, bringing to developers even more multiarchitecture capabilities to accelerate and optimize AI, HPC, and rendering workloads across Intel CPUs, GPUs, and AI accelerators. Powered by oneAPI (now driven by the Unified Acceleration Foundation), the tools are based on open standards and broad coverage for C++, OpenMP, SYCL, Fortran, MPI and Python.

5 Key Benefits

(There are many, many more. See all the deets here. Read the blog here.)

- Future-Ready Programming – Accelerates performance on the latest Intel GPUs including added support for Python, Modin, XGBoost, and rendering; supports upcoming 5th Gen Intel® Xeon® Scalable and Intel® Core™ Ultra CPUs; and expands AI and HPC capabilities via broadened standards coverage across multiple tools.

- AI Acceleration – Speeds up AI and machine learning on Intel CPUs and GPUs with native support through Intel-optimized PyTorch and TensorFlow frameworks and improvements to data-parallel extensions in Python.

- Vector Math Optimizations – oneMKL integrates RNG offload on target devices for HPC simulations, statistical sampling, and more on x86 CPUs and Intel GPUs, and supports FP16 datatype on Intel GPUs.

- Expanded CUDA-to-SYCL Migration – Intel® DPC++ Compatibility Tool (based on open source SYCLomatic) adds CUDA library APIs and 20 popular applications in AI, deep learning, cryptography, scientific simulation, and imaging.

- Advanced Preview Features – These evaluation previews include C++ parallel STL for easy GPU offload, dynamic device selection to optimize compute node resource usage, SYCL graph for reduced GPU offload overhead thread composability to prevent thread oversubscription in OpenMP, and profile offloaded code to NPUs.

Discover the Power of Intel CPUs & GPUs + oneAPI

- The ATLAS Experiment achieves performance gains by implementing heterogeneous particle reconstruction on Intel GPUs optimized by Intel software tools, including benchmarking of SYCL and CUDA code on Intel and NVIDIA GPUs.

- STAC-A2 Benchmark implementation for oneAPI sets records on Intel GPUs versus NVIDIA.

- VMware and Intel deliver jointly validated AI stack to unlock private AI everywhere for model development and deployment.

Super News! HPCwire just announced its editors’ and readers choice awards for 2023—and Intel oneAPI software development tools and libraries landed the top readers’ choice for the best HPC Programming Tool or Technology. With developers and HPC experts driving new levels of HPC and AI innovation, this honor voted by them validates the importance of open, standards-based multiarchitecture programming. oneAPI has received either the editors’ or readers’ award each year since 2020. Thank you for this highly-esteemed accolade.

Accelerate & Scale AI Workloads in Intel® Developer Cloud

September 20, 2023 | Intel® Developer Cloud

Built for developers : access the latest Intel® CPUs, GPUs, and AI accelerators

As announced at Intel Innovation 2023, Intel® Developer Cloud is now publicly available. The platform offers developers, data scientists, researchers, and organizations a development environment with direct access to current and, in some cases, pre-release Intel hardware plus software services and tools, all in service to help them build, test, and optimize products and solutions for the newest tech features and bring them to market faster.

Both free and paid subscription tiers are available.

The current complement of hardware and software includes:

- Hardware

- 4th Gen Intel® Xeon® Scalable processors (single-node and multiarchitecture platforms and clusters)

- Intel® Xeon® CPU Max Series (for high bandwidth memory workloads)

- Intel® Data Center GPU Max Series (targeting the most demanding computing workloads)

- Habana® Gaudi®2 AI accelerator (for deep learning tasks)

- Software & Services

- Run small- and large-scale AI training, model optimization, and inference workloads such as Meta AI Llama 2, Databricks Dolly, and more

- Utilize small to large VMs, full systems, or clusters

- Access software tools including the Intel® oneAPI Base, HPC, and Rendering toolkits; Intel® Quantum SDK; AI tools and optimized frameworks such as Intel® OpenVINO™ toolkit, Intel-optimized TensorFlow and PyTorch, Intel® Neural Compressor, Intel® Distribution for Python, and several more

And more will be added all the time.

Intel, the Intel logo and Gaudi are trademarks of Intel Corporation or its subsidiaries.

Intel Innovation 2023 At a Glance

September 20, 2023 | Intel® Innovation

Intel’s premier 2-day developer event was attended by nearly 2,000 attendees who participated in a wealth of sessions—keynotes from CEO Pat Gelsinger, other Intel leaders, and industry luminaries; hands-on labs; tech-insights panels; training sessions; and more—focused on the latest breakthroughs in AI spanning hardware, software, services, and advanced technologies.

There were many highlights and announcements. Here are 6 of them:

- Welcome to the “Siliconomy”. Pat introduced the term in his opening—a new era of global expansion where computing is foundational to a bigger opportunity and better future for every person on the planet—and its role in a world where AI is delivering a generational shift in computing. Read his Siliconomy editorial [PDF]

- Intel® Developer Cloud general availability. Developers can accelerate and scale AI in this free and paid development environment with access to the latest Intel hardware and software to build, test, optimize, and deploy AI and HPC applications and workloads. Includes a depth and breadth of hardware and software tools & services such as 4th Gen Intel® Xeon® Scalable & Max Series processors, Intel® Data Center GPU Max Series processors, Habana® Gaudi®2 AI accelerators, oneAPI tools and Intel-optimized AI tools and frameworks, and SaaS options such as Hugging Face BLOOM, Meta AI Llama 2, Databricks Dolly, and many more. Explore Intel Developer Cloud.

- Intel joins the Unified Acceleration (UXL) Foundation. An evolution of the oneAPI open programming model, the Linux Foundation formed the UXL Foundation to establish cross-industry collaboration on an open-standard accelerator programming model that simplifies development of cross-platform applications. Read the blogs from Sanjiv Shah (GM Developer Software @ Intel) and Rod Burns (VP Ecosystem @ Codeplay)

- Intel® Certified Developer – MLOps Professional. This new certification program, taught by MLOps experts, uses self-paced modules, hands-on labs, and practicums to teach you how to incorporate compute awareness into the AI solution design process, maximizing performance across the AI pipeline. Explore the program.

- Intel® Trust Authority. This suite of trust and security services provides customers with assurance that their apps and data are protected on the platform of their choice, including multiple cloud, edge, and on-premises environments. Explore Intel Trust Authority | Start a 30-day free trial.

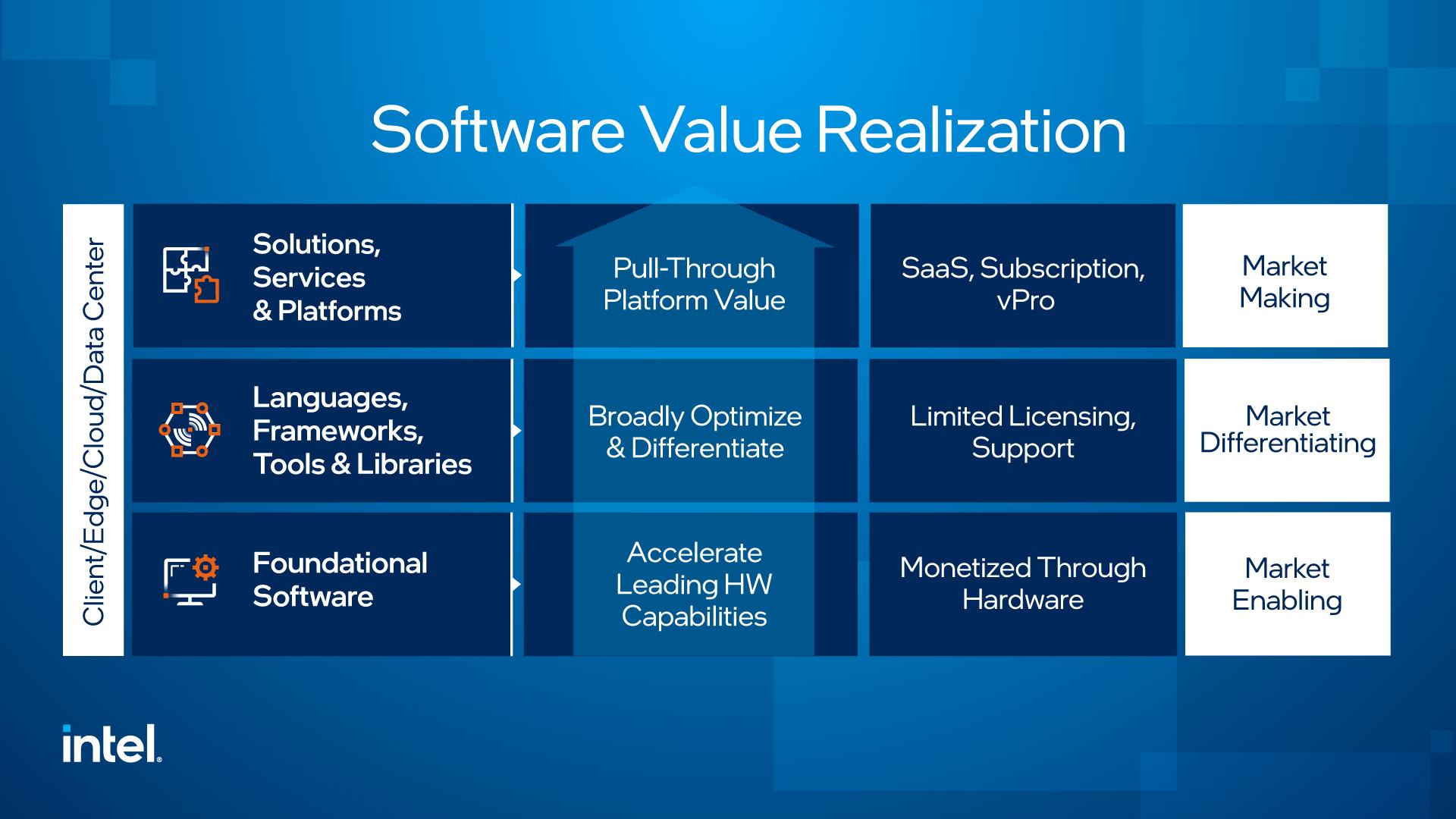

- New Enterprise Software & Services portfolio. The new collection is designed to solve some of the biggest enterprise challenges by delivering a scalable, sustainable tech stack with built-in, silicon-based security. Includes products that simplify security [Intel Trust Authority], deliver enterprise AI with more ROI [Intel Developer Cloud + Cnvrg.io], and improve application performance with real-time autonomous workload optimization [Intel® Granulate].

More to explore:

Intel, the Intel logo and Gaudi are trademarks of Intel Corporation or its subsidiaries.

Unified Acceleration Foundation Forms to Drive Open, Accelerated Compute & Cross-Platform Performance

September 19, 2023 | Unified Acceleration Foundation

Today, the Linux Foundation announced the formation of the Unified Acceleration (UXL) Foundation, a cross-industry group committed to delivering an open-standard, accelerator programming model that simplifies development of performant, cross-platform applications.

An evolution of the oneAPI initiative, the UXL Foundation marks the next critical step in driving innovation and implementing the oneAPI specification across the industry. It includes a distinguished list of participating organizations and partners, including Arm, Fujitsu, Google Cloud, Imagination Technologies, Intel and Qualcomm Technologies, Inc., and Samsung. These industry leaders have come together to promote open source collaboration and development of a cross-architecture, unified programming model.

“The Unified Acceleration Foundation exemplifies the power of collaboration and the open-source approach. By uniting leading technology companies and fostering an ecosystem of cross-platform development, we will unlock new possibilities in performance and productivity for data-centric solutions.”

More resources

- Our kid’s graduating from college!, Sanjiv Shah, GM of Developer Software Engineering, Intel

- Announcing the Unified Acceleration (UXL) Foundation, Rod Burns, VP Ecosystem @ Codeplay Software

Pre-set AI Tool Bundles Deliver Enhanced Productivity

August 21, 2023 | AI Tools Selector (beta)

Choose the tools you need with new, flexible AI tool installation service

Intel's AI Tools Selector (beta) is now available, delivering streamlined package installation of popular deep learning frameworks, tools, and libraries. Install them individually or in pre-set bundles for data analytics, classic machine learning, deep learning, and inference optimization.

The tools:

- Deep learning frameworks:

- Intel® Extension for TensorFlow

- Intel® Extension for PyTorch

- Tools & libraries:

- Intel® Optimization for XGBoost

- Intel® Optimization for Scikit-learn

- Intel® Distribution of Modin

- Intel® Neural Compressor

- SDKs & Command-line Interfaces (CLIs):

- cnvrg.io SDK v2 in Python

All are available via conda, pip, or Docker package managers.

Bookmark the AI Tools Selector (beta) →

Speed Up AI & Gain Productivity with Advances in Intel AI Tools

August 11, 2023 | Intel® AI Analytics Toolkit, oneDAL, oneDNN, oneCCL

Calling all AI practitioners, performance engineers, and framework builders ...

Speed up deep learning and machine learning on Intel® CPUs and GPUs with the just-released 2023.2 Intel® AI Analytics Toolkit and updated oneAPI libraries.

The latest advances in these tools help improve performance, enhance productivity, and increase cross-platform code portability for end-to-end data science and analytics pipelines.

The Highlights

Improved Performance

- Faster deep learning with PyTorch 2.0 compatibility and experimental support for Intel® Arc™ A-Series Graphics cards with Intel® Extension for PyTorch. If TF is more your jam, Intel® Extension for TensorFlow makes it easier to take full advantage of new CPU optimizations to streamline execution, memory allocation, and task scheduling.

- Faster, classic machine learning with Intel® Extension for Scikit-learn, now featuring CPU optimizations for extremely random trees and Intel® oneAPI Data Analytics Library (oneDAL) distributed algorithms. For GPUs, the Intel® Optimization for XGBoost now supports Intel® Data Center GPU Max Series.

- Accelerated data preprocessing with pandas 2.0 support in Intel® Distribution for Modin, which combines faster memory-efficient operations with the scaling benefits of parallel and distributed computing.

Enhanced Productivity

- New model compression automation in Intel® Neural Compressor delivers streamlined quantization, easier accuracy debugging, validation for popular new LLMs, and better framework compatibility with PyTorch, TensorFlow, and ONNX-Runtime.

- Improved prediction accuracy for training & inference with new missing values support when using daal4py Model Builders to convert gradient boosting models to use optimized algorithmic building blocks found in oneDAL.

Increased Portability

- Expanded hardware choice including support for ARM, NVIDIA, and AMD platforms as well as new performance optimizations in Intel CPUs and GPUs such as simpler debug and diagnostics and an experimental Graph compiler backend. All available using Intel® oneAPI Deep Neural Network Library (oneDNN).

- Enhanced scaling efficiency in the cross-platform Intel® oneAPI Collective Communication Library (oneCCL) features new support for Intel® Data Streaming Accelerator, found in 4th Gen Intel® Xeon® Scalable processors.

Learn More

Download the Intel AI Analytics Toolkit →

Explore the release notes for more details

Advancing AI Everywhere: Intel Joins the PyTorch Foundation

August 10, 2023 | PyTorch Optimizations from Intel

Intel has just joined the PyTorch Foundation as a Premier member and will take a seat on its Governing Board to help accelerate the development and democratization of PyTorch.

According to its website, the Foundation “is a neutral home for the deep learning community to collaborate on the open source PyTorch framework and ecosystem.” Its mission is “to drive adoption of AI and deep learning tooling by fostering and sustaining an ecosystem of open source, vendor-neutral projects with PyTorch.”

It’s a good fit. Intel has been contributing to the framework since 2018, an effort precipitated by the vision of democratizing access to AI through ubiquitous hardware and open software. As an example, the newest Intel PT optimizations and features are regularly released in the Intel® Extension for PyTorch before they’re upstreamed into stock PyTorch. This advanced access to pre-stock-version enhancements helps data scientists and software engineers maintain a competitive edge, developing AI applications that take advantage of the latest hardware technologies.

Download the Intel® Extension for PyTorch

Proven Performance Improvements with Intel/Accenture AI Reference Kits

July 24, 2023 | AI Reference Kits

These Pre-Configured Kits Simplify AI Development

Likely you’ve seen mention of them here—a total of 34 free, drop-in solutions for AI workloads spanning consumer products, energy and utilities, financial services, health and life sciences, manufacturing, retail, and telecommunications.

The new news is that multiple industries are seeing measurable benefits from leveraging the code and capabilities inherent in them.

Here’s a sampling:

- Using the AI reference kit designed to set up interactions with an enterprise conversational AI chatbot was found to inference in batch mode up to 45% faster with oneAPI optimizations.1

- The AI reference kit designed to automate visual quality control inspections for Life Sciences demonstrated training up to 20% faster and inferencing 55% faster for visual defect detection with oneAPI optimizations.2

To predict utility-asset health and deliver higher service reliability, there is an AI reference kit that provides up to a 25% increase in prediction accuracy.3

Now Available: 2023.2 Release of Intel® oneAPI Tools

July 20, 2023 | Intel® oneAPI Tools

Extending & strengthening software development for open, multiarchitecture computing.

The just-released 2023.2 Intel® oneAPI tools bring the freedom of multiarchitecture software development to Python, simplify migration from CUDA to open SYCL, and ramp performance on the latest GPU and CPU hardware.

Benefits of the 2023.2 Release

If you haven’t updated your tools to the oneAPI multiarchitecture versions—or if you haven’t tried them at all—here are 5 benefits of doing so with this release:

- Simplified Migration from CUDA to Performant SYCL – Developers now can experience streamlined CUDA-to-SYCL migration for popular applications such as AI, deep learning, cryptography, scientific simulation, and imaging; plus, the new release supports additional CUDA APIs, the latest version of CUDA, and FP64 for broader migration coverage.

- Faster & More Accurate AI Inferencing – The addition of NaN (Not a Number) values support during inference streamlines pre-processing and boosts prediction accuracy for models trained on incomplete data.

- Accelerated AI-based Image Enhancement on GPUs – Intel® Open Image Denoise ray-tracing library now supports GPUs from Intel and other vendors, providing hardware choice for fast, high-fidelity, AI-based image enhancements.

- Faster Python for AI & HPC – This release introduces the beta version Data Parallel Extensions for Python, extending numerical Python capabilities to GPUs for NumPy and cuPy functions, including Numba compiler support.

- Streamlined Method to Write Efficient Parallel Code – Intel® Fortran Compiler extends support for DO CONCURRENT Reductions, a powerful feature that allows the compiler to execute loops in parallel and significantly improve code performance while making it easier to write efficient and correct parallel code.

2023.2 Highlights at the Tool Level

Compilers & SYCL Support

- Intel® oneAPI DPC++/C++ Compiler sets the immediate command lists feature as its default, benefitting developers looking to offload computation to Intel® Data Center GPU Max Series.

- Intel® oneAPI DPC++ Library (oneDPL) improves performance of the C++ STD Library sort and scan algorithms when running on Intel® GPUs; this speeds up these commonly used algorithms in C++ applications.

- Intel® DPC++ Compatibility Tool (based on the open source SYCLomatic project) adds support for CUDA 12.1 and more function calls, streamlines migration of CUDA to SYCL across numerous domains (AI, cryptography, scientific simulation, imaging, and more), and adds FP64 awareness to migrated code to ensure portability across Intel GPUs with and without FP64 hardware support.

- Intel® Fortran Compiler adds support for DO CONCURRENT Reduction, a powerful feature that can significantly improve the performance of code that performs reductions while making it easier to write efficient parallel code.

AI Frameworks & Libraries

- Intel® Distribution of Python introduces Parallel Extensions for Python (beta) which extends the CPU programming model to GPU and increases performance by enabling CPU and GPU for NumPy and CuPy.

- Intel® oneAPI Deep Neural Network Library (oneDNN) enables faster training & inference for AI workloads; simpler debug & diagnostics; support for graph neural network (GNN) processing; and improved performance on a multitude of processors such as 4th Gen Intel® Xeon® Scalable processors and GPUs from Intel and other vendors.

- Intel® oneAPI Data Analytics Library (oneDAL) Model Builder feature adds missing values for NaN support during inference, streamlining pre-processing and boosting prediction accuracy for models trained on incomplete data.

Performance Libraries

- Intel® oneAPI Math Kernel Library (oneMKL) drastically reduces kernel launch time on Intel Data Center GPU Max and Flex Series processors; introduces LINPACK benchmark for GPU.

- Intel® MPI Library boosts message-passing performance for 4th Gen Intel Xeon Scalable and Max CPUs, and adds important optimizations for Intel GPUs.

- Intel® oneAPI Threading Building Blocks (oneTBB) algorithms and Flow Graph nodes now can accept new types of user-provided callables, resulting in a more powerful and flexible programming environment.

- Intel® Cryptography Primitives Library multi-buffer library now supports XTS mode of the SM4 algorithm, benefitting developers by providing efficient and secure ways of encrypting data stored in sectors, such as storage devices.

Analysis & Debug

- Intel® VTune™ Profiler delivers insights into GPU-offload tasks and execution, improves application profiling support for BLAS level-3 routines on Intel GPUs, and identifies Intel Data Center GPU Max Series devices in the platform diagram.

- Intel® Distribution for GDB rebases to GDB 13, staying current and aligned with the latest enhancements supporting effective application debug and debug for Shared Local Memory (SLM).

Learn More

- Explore Intel oneAPI & AI tools →

- New to SYCL? Get started here →

- Bookmark the oneAPI Training Portal – Learn the way you want to with learning paths, tools, on-demand training, and opportunities to share and showcase your work.

Notices and Disclaimers

Codeplay is an Intel company.

Performance varies by use, configuration and other factors. Learn more at www.Intel.com/PerformanceIndex. Results may vary.

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates.

No product or component can be absolutely secure. Your costs and results may vary.

Intel technologies may require enabled hardware, software or service activation.

Intel does not control or audit third-party data. You should consult other sources to evaluate accuracy

Blender 3.6 LTS Includes Hardware-Accelerated Ray Tracing through Intel® Embree on Intel® GPUs

June 29, 2023 | Intel® Embree, Blender 3.6 LTS

Award-winning Intel® Embree is now part of the Blender 3.6 LTS release. With this addition of Intel’s high-performance ray tracing library, content creators can now take advantage of hardware-accelerated rendering for Cycles on Intel® Arc™ GPUs and Intel® Data Center Flex and Max Series GPUs while significantly decreasing rendering times with no loss in fidelity.

The 3.6 release also includes premier AI-based denoising through Intel® Open Image Denoise. Both tools are part of the Intel® oneAPI Rendering Toolkit (Render Kit), a set of open source rendering and ray tracing libraries for creating high-performance, high-fidelity visual experiences.

- Read the blog (includes benchmarks)

- Watch the demo [6:20]

- Download Blender 3.6 LTS

- Download the Render Kit

UKAEA Makes Fusion a Reality using Intel® Hardware and oneAPI Software Tools

June 29, 2023 | Intel® oneAPI Tools

Using Intel® hardware, oneAPI tools, and distributed asynchronous object storage (DAOS), the UK Atomic Energy Authority and the Cambridge Open Zettascale Lab are developing the next-generation engineering tools and processes necessary to design, certify, construct, and regulate the world’s first fusion powerplants in the United Kingdom. This aligns with the U.K.’s goals to accelerate the roadmap to commercial fusion power by the early 2040s.

The UKAEA team used supercomputing and AI to design the fusion power plant virtually. It will subsequently run a number of HPC workloads on a variety of architectures, including 4th Gen Intel® Xeon® processors as well as multi-vendor GPUs and FPGAs.

Why This Matters

Being able to program once for multiple hardware is key. By using oneAPI open, standards-based, multiarchitecture programming, the UKAEA team can overcome barriers of code portability and deliver performance and development productivity without vendor lock-in.

Learn more:

Resources:

Introducing the oneAPI Construction Kit

June 5, 2023

Codeplay brings open, standards-based SYCL programming to new, custom, and specialist hardware

Today Codeplay announced the latest extension of the oneAPI ecosystem with an open source project that allows code written in SYCL to run on custom architectures for HPC and AI.

The oneAPI Construction Kit includes a reference implementation for RISC-V vector processors but can be adapted for a range of processors, making it easy to access a wealth of supported SYCL libraries.

A benefit for users of custom architectures, rather than having to learn a new custom language, they can instead use SYCL to write high-performance applications efficiently – using a single codebase that works across multiple architectures. This means less time spent on porting efforts and maintaining separate codebases for different architectures, and more time for innovation.

What’s Inside the New Kit:

- A framework for bringing oneAPI support to new and innovative hardware – such as specialized AI accelerators

- Support for x86, ARM, and RISC-V targets

- Documentation

- Reference Design

- Tutorials

- Modular Software Components

Learn More & Get It

- Get it free at developer.codeplay.com

- Watch the demo [2:32]

- Read the blog from Codeplay Principal SW Engineer, Colin Davidson

- Get the documentation

Intel Delivers AI-Accelerated HPC Performance, Uplifted by oneAPI

May 22, 2023 | Intel® oneAPI Tools

ISC’23 takeaway: Broadest, most open HPC+AI portfolio powers performance, generative AI for science

Intel’s keynote at International Super Computing 2023 underscored how the company is making multiarchitecture programming easier for an open ecosystem, as well as driving competitive performance for diverse HPC and AI workloads based on a broad product portfolio of CPUs, GPUs, AI accelerators, and oneAPI software.

Here are the highlights.

Hardware:

- Independent software vendor Ansys showed the Intel® Data Center GPU Max Series outperforms NVIDIA H100 by 50% on AI-accelerated HPC applications, in addition to an average improvement of 30% over H100 on diverse workloads.*

- The Habana Gaudi 2 deep learning accelerator delivers up to 2x faster AI performance over NVIDIA A100 for DL training and inference.*

- Intel® Xeon CPUs (including the Max Series and 4th Gen) deliver, respectively, 65% speedup over AMD Genoa for bandwidth-limited problems and 50% average speed-up over AMD Milan.*

Software:

- Worldwide, about 90% of all developers benefit from or use software developed for or optimized by Intel.*

- oneAPI has been demonstrated on diverse CPU, GPU, FPGA and AI silicon from multiple hardware providers, addressing the challenges of single-vendor accelerated programming models.

- New features in the latest oneAPI tools—such as OpenMP GPU offload, extended support for OpenMP and Fortran, and optimized TensorFlow and PyTorch frameworks and AI tools—unleash the capabilities of Intel’s most advanced HPC and AI CPUs and GPUs.

- Real-time, ray-traced scientific visualization with hardware acceleration is now available on Intel GPUs, and AI-based denoising completes in milliseconds.

The oneAPI SYCL standard implementation has been shown to outperform NVIDIA native system languages; case in point: DPEcho SYCL code run on Max Series GPU outperformed by 48% the same CUDA code run on NVIDIA H100.

Intel is committed to serving the HPC and AI community with products that help customers and end-users make breakthrough discoveries faster. Our product portfolio spanning Xeon Max Series CPUs, Max Series GPUs, 4th Gen Xeon and Gaudi 2 are outperforming the competition on a variety of workloads, offering energy and total cost of ownership advantages, democratizing AI and providing choice, openness and flexibility.

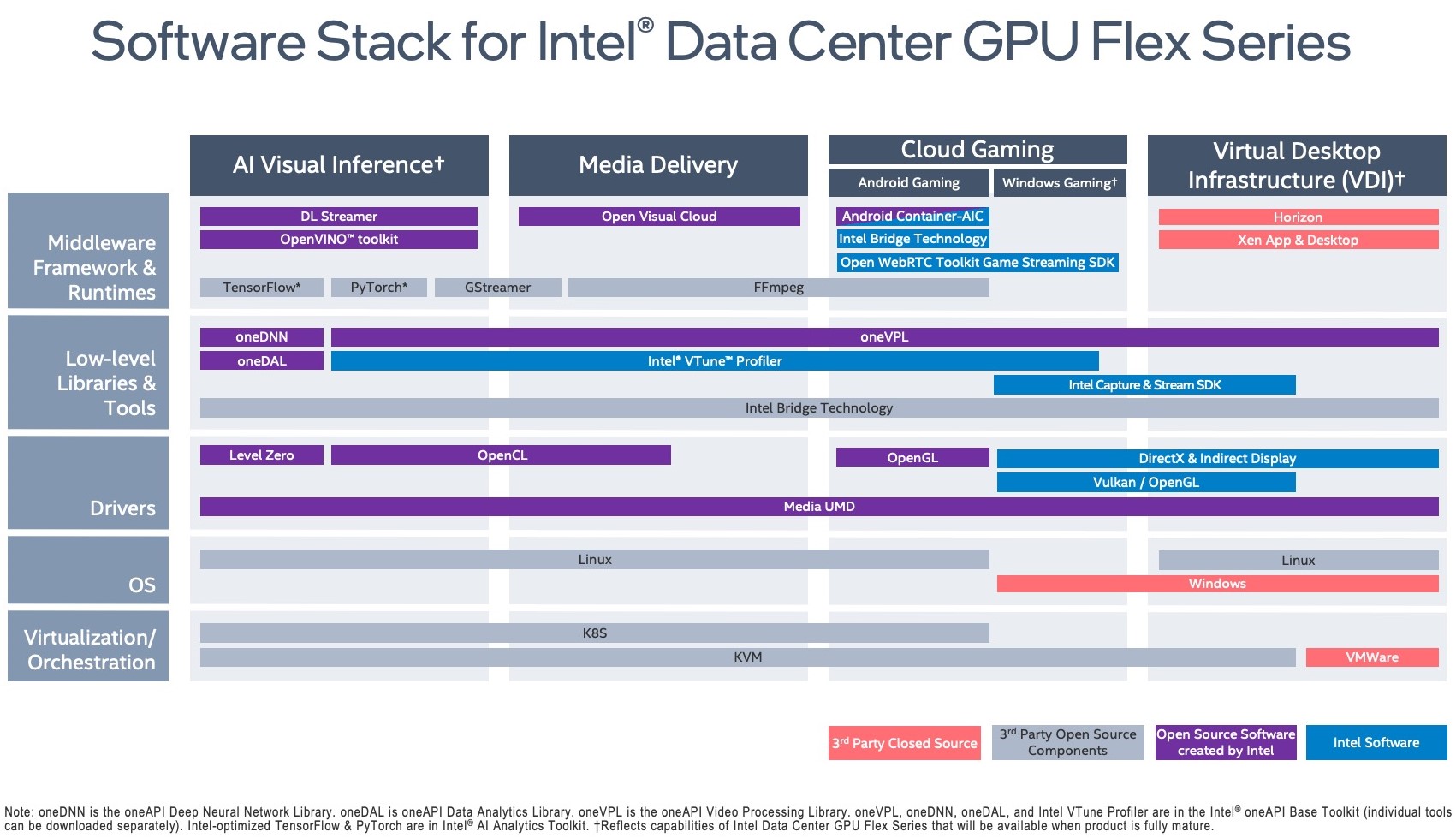

Intel Flex Series GPUs Expanded with Open Software Stack

May 18, 2023 | Software for Intel® Data Center GPU Flex Series

New software updates optimize workloads for cloud gaming, AI inference, media acceleration & digital content creation

Introduced as a flexible, general-purpose GPU for the data center and the intelligent visual cloud, the Intel® Data Center GPU Flex Series was expanded with new production-level software to optimize workloads for cloud gaming, AI inference, media acceleration, digital content creation, and more. This GPU platform has an open and full software stack, no licensing fees, and a unified programming model for CPUs and GPUs for performance and productivity via oneAPI.

New Software Capability Highlights:

- Windows Cloud Gaming – Tap into the GPU’s power for remote gaming with a new reference stack.

- AI Inference – Boost deep learning and visual inference in applications used for smart city, library indexing and compliance, AI-guided video enhancement, intelligent traffic management, smart buildings and factories, and retail.

- Digital Content Creation – Deliver real-time rendering tapping into dedicated hardware acceleration, complete AI-based denoising in milliseconds.

- Autonomous Driving – Utilize Unreal Engine 4 to advance training and validation of AD systems.

Learn what comprises the open software stack, available tools, and how to get started with pre-configured containers.

2023.1.1 Release of Intel AI Analytics Toolkit Includes New Features & Fixes

May 3, 2023 | Intel® AI Analytics Toolkit

The latest release of the AI Kit continues to help AI developers, data scientists, and researchers accelerate end-to-end data science and analytics pipelines on Intel® architecture.

Highlights

- Intel® Neural Compressor optimizes auto- and multi-node tuning strategy and large language model (LLM) memory.

- Intel® Distribution of Modin introduces a new, experimental NumPy API that provides basic support for distributed numerical calculations.

- Model Zoo for Intel® Architecture now supports Intel® Data Center GPU Max Series and extends support for dataset downloader and data connectors.

- Intel® Extension for TensorFlow now supports TensorFlow 2.12 and adds Ubuntu 22.04 and Red Hat Enterprise Linux 8.6 to the list of supported platforms.

- Intel® Extension for PyTorch is now compatible with Intel® oneAPI Deep Neural Network Library (oneDNN) 3.1, which improves on PyTorch 1.13 operator coverage.

See the AI KIt release notes for full details.

More References

Explore Ready-to-Use Code Samples for CPUs, GPUs, and FPGAs

April 20, 2023 | oneAPI & AI Code Samples

Intel’s newly launched Code Samples portal provides direct access to a sizable (and always growing) collection of open source, high-quality, ready-to-use code that can be used to develop, offload, and optimize multiarchitecture applications.

Each sample is purpose-built to help any developer at any level understand concepts and techniques for adapting parallel programming methods to heterogeneous compute; they span high-performance computing, code and performance optimization, AI and machine learning, and scientific or general graphics rendering.

No matter their experience level, developers can find a variety of useful samples—all resident in the GitHub repository—with helpful instructions and commented code.

VMWare-Intel Collaboration Delivers Video and Graphics Acceleration via AV1 Encode/Decode on Intel® GPUs

April 11, 2023 | Intel® Arc™ Graphics, Intel® Data Center GPU Flex Series

Next-gen, multimedia codec offers more compression efficiency and performance

The latest release of VMware Horizon supports Intel® GPUs and provides media acceleration enabled by Intel® oneAPI Video Library (oneVPL). With Intel GPU support, VMware customers have greater choice, flexibility, and cost options on a wider range of hardware systems for deployment without being locked to a single GPU vendor. Running VMware Horizon on systems with Intel GPUs does not require license server setup, licensing costs, or ongoing support costs.

This Horizon release for desktops and servers utilizes AV1 encoding, optimized by oneVPL, on both Intel® Arc™ graphics and Intel® Data Center GPU Flex Series. The solution also delivers fast hardware encoding on supported Intel® Xe architecture-based and newer GPUs (integrated and discrete). With a GPU-backed virtual machine (VM), users can have a better media experience with improved performance, reduced latency, more consistent frames per second, and lower CPU utilization.

Now Available: Intel® oneAPI 2023.1 Tools

April 4, 2023 | Intel® oneAPI and AI Tools

Delivering new performance and code-migration capabilities

The just-released Intel® oneAPI 2023.1 tools augment the latest Intel® architecture features with high-bandwidth memory analysis, photorealistic ray tracing and path guiding, and extended CUDA-to-SYCL code migration support. Additionally, they continue to support the latest update of Codeplay’s oneAPI plugins for NVIDIA and AMD that make it easier to write multiarchitecture SYCL code. (These free-to-download plugins deliver quality improvements, support Joint_matrix extension and CUDA 11.8/testing 12, and enable gfx1032 for AMD. The AMD plugin backend now works with ROCm 5.x driver.)

2023.1 Highlights:

Compilers & SYCL Support

- Intel® oneAPI DPC++/C++ Compiler delivers AI acceleration with BF16 full support, auto-CPU dispatch, and SYCL kernel properties, and adds more SYCL 2020 and OpenMP 5.0 and 5.1 features to improve productivity and boost CPU and GPU performance.

- Intel® oneAPI DPC++ Library (oneDPL) improves performance of the sort, scan, and reduce algorithms.

- Intel® DPC++ Compatibility Tool (based on the open source SYCLomatic project) delivers easier CUDA-to-SYCL code migration with support for the latest release of CUDA’s headers, and adds more equivalent SYCL language and oneAPI library mapping functions such as runtime, math, and neural network domains.

Performance Libraries

- Intel® oneAPI Math Kernel Library (oneMKL) improves data center GPU performance via new real FFTs, plus 1D and 2D optimizations, random number generators, and Sparse BLAS and LAPACK inverse optimizations.

- Intel® MPI Library enhances performance for collectives using GPU buffers and default process pinning on CPUs with E-cores and P-cores.

- Intel® oneAPI Threading Building Blocks (oneTBB) improves robustness of thread-creation algorithms on Linux and provides full support of Thread Sanitizer on macOS and full-hybrid Intel® CPU support.

- Intel® oneAPI Data Analytics Library (oneDAL) is reduced in size by 30%.

- Intel® oneAPI Collective Communications Library (oneCCL) improves scaling efficiency of the Scaleup algorithms for Alltoall and Allgather and adds collective selection for scaleout algorithm for device (GPU) buffers.

- Intel® Integrated Performance Primitives (Intel® IPP) expands cryptography offerings with CCM/GCM modes, which enables Crypto Multi-Buffer for greater performance compared to scalar implementations, and adds support for asymmetric cryptographic algorithm SM2 for key exchange protocol and encryption/decryption APIs.

Analysis & Debug

- Intel® VTune™ Profiler identifies the best profile to gain performance utilizing high-bandwidth memory (HBM) on Intel® Xeon® Processor Max Series. It displays Xe Link cross-card traffic issues such as CPU/GPU imbalances, stack-to-stack traffic, and throughput and bandwidth bottlenecks on Intel® Data Center GPU Max Series.

- Intel® Distribution for GDB adds debug support for Intel® Arc™ GPUs on Windows and improves the debug performance on Linux for Intel discrete GPUs.

Rendering & Visual Computing

- Intel® Open Path Guiding Library (Intel® Open PGL) is integrated in Blender and Chaos V-Ray and provides state-of-the-art path-guiding methods for rendering.

- Intel® Embree supports Intel Arc GPUs and Intel® Data Center GPU Flex Series, and delivers performance increases on 4th Gen Intel® Xeon® processors per Phoronix benchmarks.

- Intel® OSPRay Studio add functionality from open Tiny EXR, Tiny DNG (for .tiff files), and Open Image IO.

oneAPI tools drive ecosystem innovation

oneAPI tools adoption is ramping multiarchitecture programming on new accelerators, and the ecosystem is rapidly pioneering unique solutions using the open, standards-based, unified programming model. Here are the most recent:

- Cross-platform: Purdue University launched a oneAPI Center of Excellence to advance AI and HPC teaching in the United States.

- Cloud: University of Tennessee launched oneAPI Center-of-Excellence Research which enabled a cloud-based Rendering as a Service (RaaS) learning environment for students.

- AI: Hugging Face accelerated PyTorch Transformers on 4th Gen Intel Xeon processors (explore part 1 and part 2), and HippoScreen increased AI performance by 2.4x to improve efficiency and build deep learning models.

- Graphics & Ray Tracing: Thousands of artists, content creators, and 3D experts can easily access advanced ray tracing, denoising, and path guiding capabilities through Intel rendering libraries integrated in popular renderers including Blender, Chaos V-Ray, and DreamWorks open source MoonRay.

Learn More

- Explore Intel oneAPI & AI tools >

- New to SYCL? Get started here >

- Bookmark the oneAPI Training Portal – Learn the way you want to with learning paths, tools, on-demand training, and opportunities to share and showcase your work.

Notices and Disclaimers

Codeplay is an Intel company.

Performance varies by use, configuration and other factors. Learn more at www.Intel.com/PerformanceIndex. Results may vary.

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates.

No product or component can be absolutely secure. Your costs and results may vary.

Intel technologies may require enabled hardware, software or service activation.

Intel does not control or audit third-party data. You should consult other sources to evaluate accuracy

Purdue Launches oneAPI Center of Excellence to Advance AI & HPC Teaching in the U.S.

March 27, 2023 | oneAPI, Intel® oneAPI Toolkits

Building oneAPI multiarchitecture programming concepts into the ECE curriculum

Purdue University will establish a oneAPI Center of Excellence on its West Lafayette campus. Facilitated through Purdue University’s Elmore Family School of Electrical and Computer Engineering (ECE), the center will take students’ original AI and HPC research projects to the next level through teaching oneAPI in the classroom.

The facility will use curated content from Intel including teaching kits and certified instructor courses, and students will have access to the latest Intel® hardware and software via the Intel® Developer Cloud.

“Purdue’s track record as one of the most innovative universities in America with its world-changing research, programs and culture of inclusion is a perfect fit for the oneAPI Center of Excellence. By giving Purdue students access to the latest AI software and hardware, we’ll see the next generation of developers, scientists and engineers delivering innovations that will change the world. We’re excited to assist Purdue in embracing the next giant leap in accelerated computing.”

Just Released the 6 Final AI Reference Kits

March 24, 2023 | AI Reference Kits

A Total of 34 Kits to Streamline AI Solutions

The final six AI reference kits, powered by oneAPI, are now available to help data scientists and developers more easily and quickly develop and deploy innovative business solutions with maximum performance on Intel® hardware:

- Visual Process Discovery for detecting UI elements in real time from inputted website screenshots (e.g., buttons, links, texts, images, headings, fields, labels, iframes) that users interacted with.

- Text Data Generation for generating synthetic text, such as the provided source dataset, using a large language model (LLM).

- Image Data Generation for generating synthetic images using generative adversarial networks (GANs).

- Voice Data Generation for translating input text data to generate speech using transfer learning with VOCODER models.

- AI Data Protection for minimizing challenges with PII (personally identifiable information) in the design and development stages such as data masking, data de-identification, and anonymization.

- Engineering Design Optimization for helping manufacturing engineers generate realistic designs whilst reducing manufacturing costs and accelerating product development processes.

Learn more about the AI ref kits

DreamWorks Animation’s Open Source MoonRay Software Optimized via Intel® Embree

March 15, 2023 | Intel® oneAPI Rendering Toolkit

Advancing Open Rendering Innovation

DreamWorks Animation’s production renderer is now open source, with photo-realistic ray-tracing acceleration provided by Intel® Embree, a high-performance ray-tracing library that’s part of the oneAPI Rendering Toolkit.

Formerly an in-house Monte Carlo ray tracer, Dreamworks’ MoonRay team worked with beta testers to adapt the code base—including enhancements and features—so it could be built and run outside of the company’s pipeline environment.

“As part of this release and in collaboration with DreamWorks, MoonRay users have access to Intel® technologies, Intel Embree, and oneAPI tools, as building blocks for an open and performant rendering ecosystem.”

2023.1 Release of Intel® AI Analytics Toolkit Supports Newest Intel® GPUs & CPUs

February 10, 20232 | Intel® AI Analytics Toolkit (AI Kit), AI Reference Kits

Powered by oneAPI to Maximize Multiarchitecture Performance

Today Intel launched the newest release of its AI Kit, with tools optimized to set free the full power of the latest GPUs (Intel® Data Center GPU Max Series and Intel® Data Center GPU Flex Series) and CPUs (4th Gen Intel® Xeon® Scalable and Intel® Xeon® Max Series processors).

Using the latest Toolkit, developers and data scientists can more effectively and efficiently accelerate end-to-end training and inference of their AI workloads, particularly on the new hardware.

Download the 2023.1 Intel AI Analytics Toolkit

New Software Features and Hardware Support

Here are some of the highlights. Get the full details in the release notes.

- Build DL models optimized for improved inference and performance with quantization and distillation; includes support for Intel® Extension for TensorFlow v1.1.0, Intel® Extension for PyTorch v1.13.0, PyTorch 1.13, and TensorFlow 2.10.

- Enable tuning strategy refinement, training for sparsity (block wise) enhancements, and Neural Coder integration.

- In Intel Xeon processors, deliver superior DL performance by enabling advanced capabilities (including Intel® AMX, Intel® AVX-512, VNNI, and bfloat16).

- In Data Center GPUs, deliver the same with Intel® XMX.

Model Zoo for Intel® Architecture [github]

- New precisions—BF32 and FP16 for PyTorch BERT Large + Intel Neural Compressor INT8 quantized models—support TensorFlow image-recognition topologies ResNet50, ResNet101, MobileNetv1, and Inception v3).

- Supports Intel® Data Center Flex Series for Intel Optimization for PyTorch and Intel Extension for TensorFlow.

Intel® Extension for TensorFlow [github]

- Supports Intel Data Center GPUs and includes Intel® Optimization for Horovod v0.4 to support distributed training on the new GPU Max Series.

- Co-works with stock TensorFlow 2.11 and 2.10.

Intel® Optimization for PyTorch

- Improve training and inference with native Windows support for ease-of-use/integration and BF16 and INT8 operator optimizations with oneDNN quantization backend.

- Improve performance on the new Intel CPUs and GPUs when used with Intel’s PyTorch extension.

- oneDNN and optimized deep learning frameworks, including TensorFlow and PyTorch, enable Intel® Xe Matrix Extensions (Intel® XMX) on the data center GPUs delivering increased, competitive performance across a wide range of market segments.

- Additional performance gains are provided by Intel’s extensions for TensorFlow and PyTorch, both of which have native GPU support.

Learn More

- Build, Deploy & Scale AI Solutions across the Enterprise

- Intel® AI & Machine Learning Tools

- Explore workload types, oneAPI tools, and other resources for the new GPUs and CPUs

- AI Analytics Code Samples [github]

- Intel Extension for PyTorch [github]

- Intel Extension for TensorFlow [github]

Now Available: 6 New AI Reference Kits

February 10, 20232 |AI Reference Kits

Next 6 AI Reference Kits Bolster AI Acceleration Across Multiple Industries and Architectures… FREE

Since the fall of 2022, Intel has collaborated with Accenture to introduce AI reference kits covering industries such as energy & utilities, financial services, health & life sciences, retail, semiconductor, and telecommunications.

Today, 6 more join the list (almost 30 total!). All are powered by oneAPI and can be applied freely to an increasing complement of AI workloads.

Learn more and download the AI Ref Kits

The Rundown

Below is an overview of the next AI Ref Kits available, which are powered by oneAPI including optimized frameworks and oneAPI libraries, tools, and other components to maximize AI performance on Intel® hardware:

- Traffic Camera Object Detection for developing a computer vision model to predict the risk of vehicle accidents by analyzing images from traffic cameras in real time.

- Computational Fluid Dynamics for developing a deep learning model to numerically solve equations calculating fluid-flow profiles.

- AI Structured Data Generation for developing a model to synthetically generate structured data, including numeric, categorical, and time series.

- Structural Damage Assessment for developing a computer vision model using satellite images to assess the severity of damage caused by natural disasters.

- Vertical Search Engine for developing a natural language processing (NLP) model for semantic search through documents.

- Data Streaming Anomaly Detection for developing a deep learning model to help detect anomalies in sensor data that monitors equipment conditions.

Learn More

- Intel Releases AI Reference Kits [press release]

- Intel Releases Open Source Reference Kits [blog]

Just Launched: New Intel® CPUs and GPUs

January 10, 2023 | Intel® oneAPI and AI Tools

Today, Intel marked one of the most important product launches in company history with the unveiling of its highly anticipated CPU and GPU architectures:

- 4th Gen Intel® Xeon® Scalable processors (code-named Sapphire Rapids)

- Intel® Xeon® CPU Max Series (code-named Sapphire Rapids HBM)

- Intel® Data Center GPU Max Series (code-named Ponte Vecchio)

These feature-rich product families bring scalable, balanced architectures that integrate CPU and GPU with the oneAPI open software ecosystem, delivering a leap in data center performance, efficiency, security, and new capabilities for AI, the cloud, the network, and exascale.

Scale a Single Code Base across Even More Architectures

When coupled with the 2023 Intel® oneAPI and AI tools, developers can create single source, portable code that fully activates the advanced capabilities and built-in acceleration features of the new hardware.

- 4th Gen Intel Xeon & Intel Max Series (CPU) processors provide a range of features for managing power and performance at high efficiency, including these instruction sets and built-in accelerators: Intel® Advanced Matrix Extensions, Intel® QuickAssist Technology, Intel® Data Streaming Accelerator, and Intel® In-Memory Analytics Accelerator.1

- Activate Intel® AMX support for int8 and bfloat16 data types using oneAPI performance libraries such as oneDNN, oneDAL, and oneCCL.

- Drive orders of magnitude for training and inference into TensorFlow and PyTorch AI frameworks which are powered by oneAPI and already optimized to enable Intel AMX.

- Deliver fast HPC applications that scale with techniques in vectorization, multithreading, multi-node parallelization, and memory optimization using the Intel® oneAPI Base Toolkit and Intel® oneAPI HPC Toolkit.

- Deliver high-fidelity applications for scientific research, cosmology, motion pictures, and more that leverage all of the system memory space for even the largest data sets using the Intel® oneAPI Rendering Toolkit.

- Explore workload types, oneAPI tools, and other resources for these new CPUs >

- Intel Data Center GPU Max Series is designed for breakthrough performance in data-intensive computing models used in AI and HPC such as physics, financial, services, and life sciences. This is Intel’s highest performing, highest density discrete GPU—it has more than 100 billion transistors and up to 128 Xe cores.

- Activate the hardware’s innovative features—Intel® Xe Matrix Extensions, vector engine, Intel® Xe Link, data type flexibility, and more—and realize maximum performance using oneAPI and AI Tools.

- Migrate CUDA* code to SYCL* for easy portability across multiple architectures—including the new GPU as well as those from other vendors—with code migration tools to simplify the process.

- Explore workload types, oneAPI tools, and other resources for the new GPU >

“The launch of 4th Gen Xeon Scalable processors and the Max Series product family is a pivotal moment in fueling Intel’s turnaround, reigniting our path to leadership in the data center, and growing our footprint in new arenas.” – Sandra Rivera, Intel Executive VP and GM of Datacenter and AI Group

Learn More

- Get the details

- New Intel oneAPI 2023 Tools Maximize Value of Upcoming Intel Hardware

- Compare CPUs, GPUs, and FPGAs for oneAPI Compute Workloads

- [Programming Guide] Port Intel® C++ Compiler Classic to Intel® oneAPI DPC++/C++ Compiler

- [On-Demand Webinar] Tune Applications on CPUs & GPUs with an LLVM*-Based Compiler from Intel

1The Intel Max Series processor (CPU) also offers 64 gigabytes of high bandwidth memory (HBM2e), significantly increasing data throughput for HPC and AI workloads.

Intel’s 2023 oneAPI & AI Tools Now Available in the Intel® Developer Cloud

December 16, 2022 | Intel® oneAPI and AI Tools, Intel® Developer Cloud, oneAPI initiative