High performance computing (HPC) users are often required to deal with different software and/or package versions, dependencies and configurations for performance, compatibility, and accuracy testing. Such efforts are time-consuming, so an efficient packing tool called Supercomputer PACKage manager (Spack*) was developed at Lawrence Livermore National Laboratory. Spack has gained traction in the HPC community because of its many advantages (e.g.: It is open source, offers a simple spec-based syntax, and provides package authors/maintainers a Pythonic interface to handle different builds of the same package). It is worth noting that Spack recently won the 2023 HPCwire Editor’s Choice Award for Best HPC Programming Tool or Technology.

This article provides Spack recipes and guidelines to build optimal executables for three critical HPC applications that may not be readily configured to properly use Intel® tools, libraries, and configurations to obtain the highest performance on Intel® hardware. Therefore, the term “optimal executables” in current work refers to the proper use of such tools through Spack, providing Intel® HPC customers and partners with the best-known methods to easily build targeted open-source applications while achieving the optimal performance on Intel® Xeon® Scalable processors. These recipes have been tested on four generations of Intel Xeon Scalable processors, running on Amazon Web Services* (AWS*) and Google Cloud Platform* (GCP*), obtaining on-par performance to the reference Intel-optimized, manually built binaries (hereinafter referred to as “the reference build”).

Target Applications

HPC applications solve complex problems in science, engineering, and business. The Spack list of supported HPC packages is continually growing. However, the following applications, across different HPC verticals, have been chosen for current work due to their widespread use in industry, research, and academic fields:

- Weather Research and Forecasting Model (WRF*) is a numerical weather prediction model designed for atmospheric modeling and operational forecasting. WRF’s build process is considered “fairly” complicated because it includes a series of dependencies. Therefore, it will assess Spack’s ability to simplify the build.

- Large-scale Atomic/Molecular Massively Parallel Simulator (LAMMPS) is a classical molecular dynamics simulator with a focus on materials modeling, though it can serve as a generic particle simulator at different scales. LAMMPS’s build process is straightforward, but its performance is sensitive to the choice of compiler, instruction sets, performance libraries, etc. Therefore, it will help track any potential performance degradation introduced during build process.

- Open Source Field Operation and Manipulation (OpenFOAM*) is an open-source toolbox suite for numerical modeling, and pre- and post-processing of continuum mechanics, mostly for computational fluid dynamics. It has a relatively long compile process with cross-compiler dependencies. Therefore, it is a useful application for assessing Spack’s ability to handle cross-compiler dependencies and long compilations.

System Configuration

Cloud service providers (CSPs) (e.g., AWS and GCP) offer on-demand, open-access, scalable computing resources to the user to handle their application and data, while avoiding upfront cost and difficulties associated with on-premises systems. In this article, publicly available CSP instances are used to evaluate the performance of the Spack recipes, facilitating the reproducibility of such recipes for the reader. Tables 1 and 2 summarize the AWS and GCP instances, respectively. Between the two CSPs, four different generations of Intel Xeon processors are included to ensure that the provided Spack guidelines are comprehensive, useful, and tested across different Intel architectures.

|

Configuration |

AWS c5n.18xl |

AWS c6i.32xl |

|---|---|---|

|

Test by |

Intel Corporation |

Intel Corporation |

|

CSP/Region |

AWS us-east-2 |

AWS us-east-2 |

|

Instance Type |

c5n.18xl |

c6i.32xl |

|

# vCPUs |

72 |

128 |

|

Sockets |

2 |

2 |

|

Threads per core |

2 |

2 |

|

NUMA Nodes |

2 |

2 |

|

Iterations and result choice |

Median of top 3 iterations |

Median of top 3 iterations |

|

Architecture |

Skylake |

Ice Lake |

|

Memory Capacity |

192 GB 2666MT/s |

256 GB 3200MT/s |

|

SKU |

Intel Xeon 8124M |

Intel Xeon 8375C |

|

Storage Type |

Amazon FSx Luster |

Amazon FSx Luster |

|

OS |

CentOS Linux 7 (Core) |

CentOS Linux 7 (Core) |

|

Kernel |

kernel-3.10.0-1160.88.1.el7.x86_64 |

kernel-3.10.0-1160.88.1.el7.x86_64 |

|

Reference |

Table 1. Detailed system configurations of AWS* instances

|

Configuration |

GCP c2-stand-60 |

GCP c3-highcpu-176 |

|---|---|---|

|

Test by |

Intel Corporation |

Intel Corporation |

|

CSP/Region |

GCP us-central1 |

GCP us-central1 |

|

Instance Type |

c2-stand-60 |

c3-highcpu-176 |

|

# vCPUs |

30 |

176 |

|

Sockets |

2 |

2 |

|

Threads per core |

2 |

2 |

|

NUMA Nodes |

2 |

4 |

|

Iterations and result choice |

Median of top 3 iterations |

Median of top 3 iterations |

|

Architecture |

Cascade Lake |

Sapphire Rapids |

|

Memory Capacity |

240 GB |

352 GB |

|

SKU |

Intel Xeon CPU @ 3.10GHz |

Intel Xeon 8481C @ 2.70GHz |

|

Storage Type |

Standard Persistent Disk |

Standard Persistent Disk |

|

OS |

CentOS Linux 7 (Core) |

CentOS Linux 7 (Core) |

|

Kernel |

3.10.0-1160.81.1.el7.x86_64 |

3.10.0-1160.81.1.el7.x86_64 |

|

Reference |

Table 2. Detailed system configurations of GCP* instances

Performance Analysis

Performance of 13 workloads across three applications (discussed here) were evaluated with the following considerations:

- While comparing Spack binaries to the reference build counterparts, the changes in the software stack have been minimized by leveraging similar (or the same) compiler and/or application versions.

- The same benchmark runner, environment variables/settings, workload type/version, input datasets, etc. were used to measure the performance of each build.

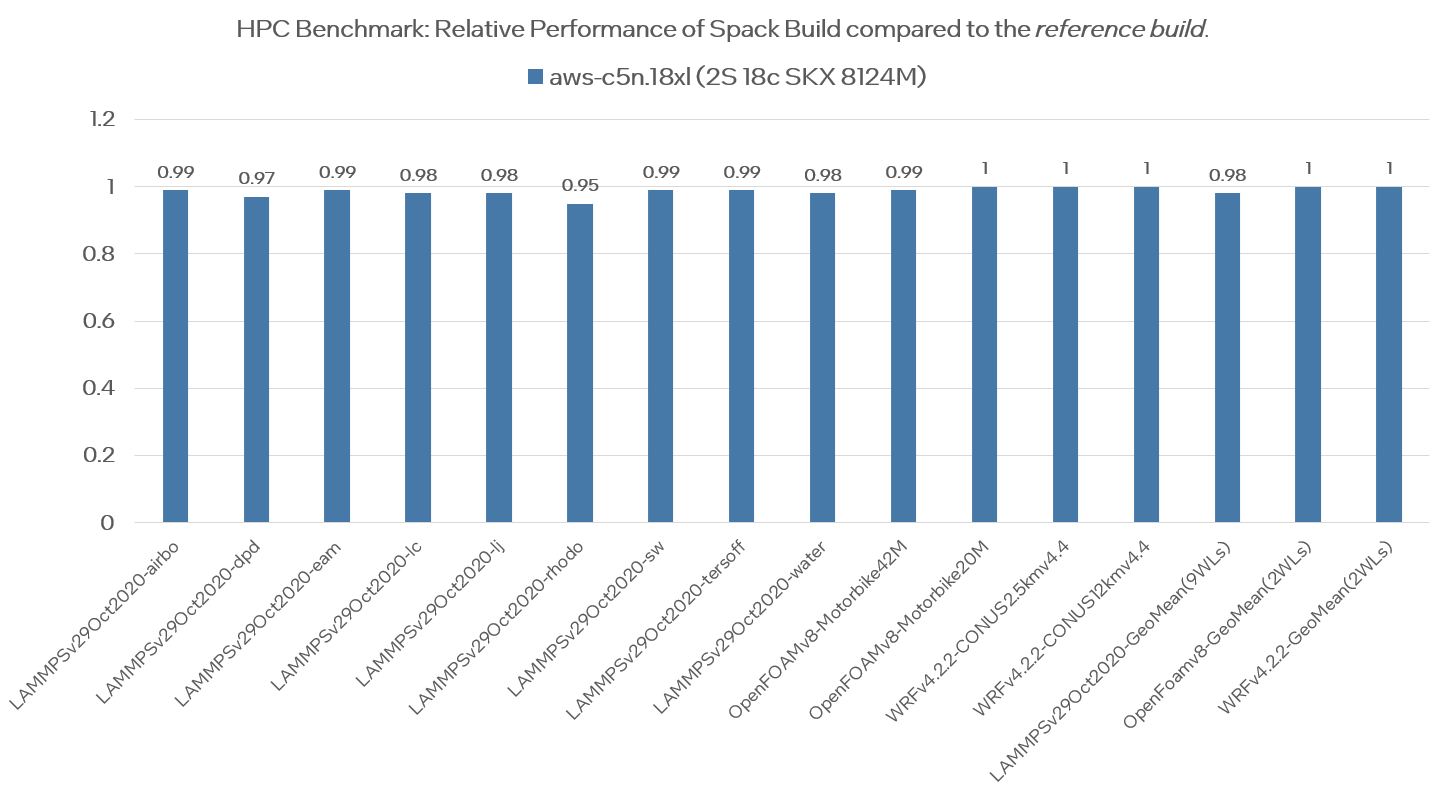

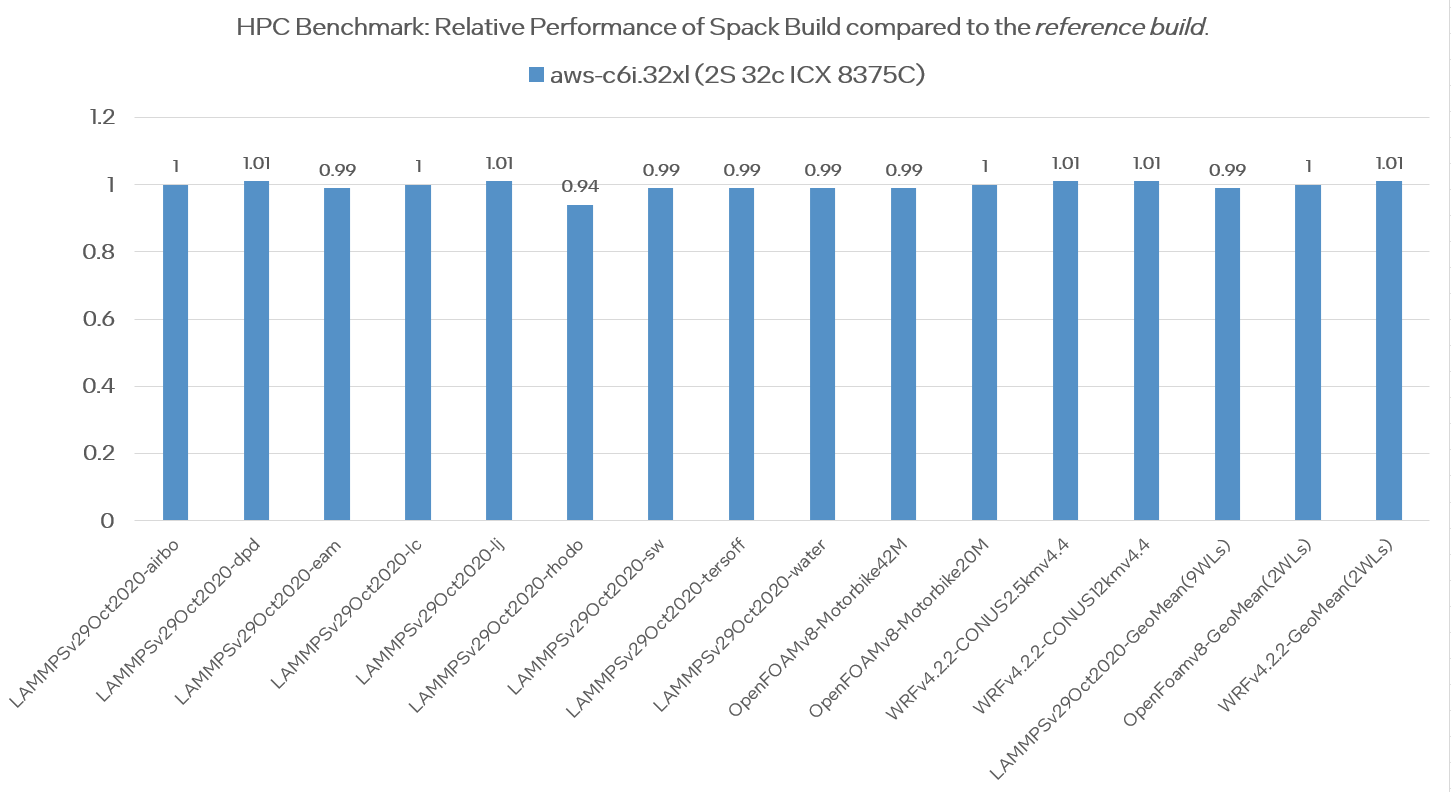

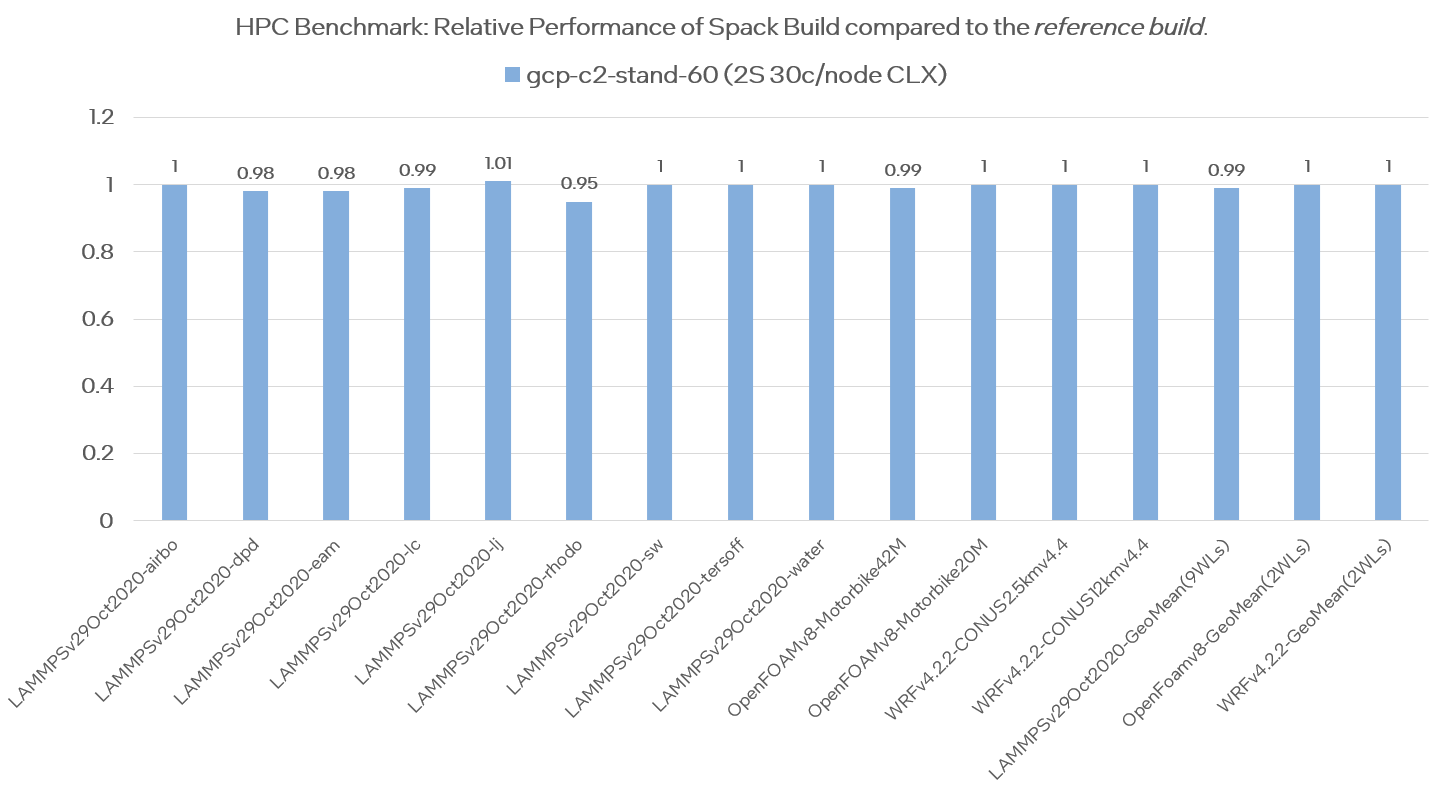

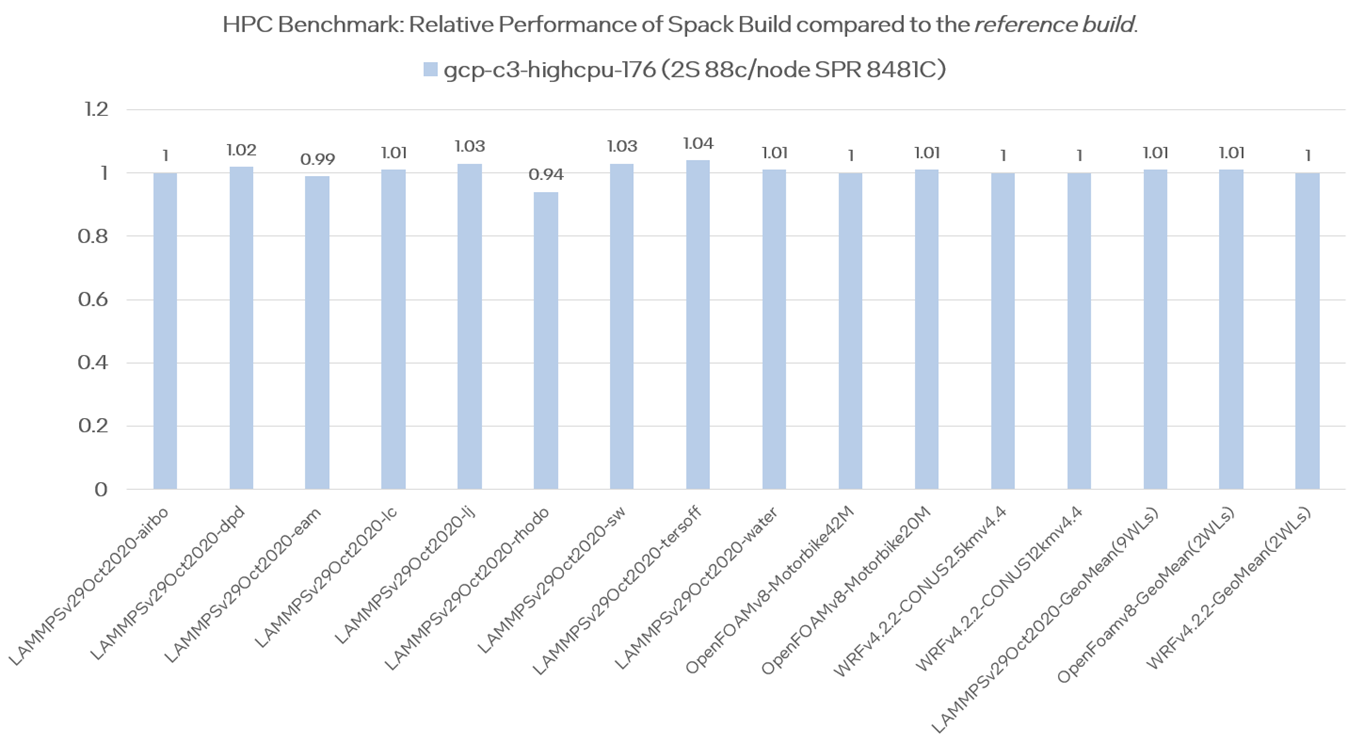

Figure 1 shows the performance of the Spack build compared to the reference build counterparts on the AWS instances. Considering 10% error margin associated with run-to-run variations, it is shown that the expected performance of the reference build has been (almost) identically replicated using Spack builds among all studied workloads. The same tests and execution process was conducted on the GCP instances, and the corresponding performance results are shown in Figure 2. Once again, the performance of reference build and Spack counterparts are nearly identical.

Figure 1. Relative performance of Spack* build vs reference build for HPC benchmarks tested on AWS*: (a) c5n.18xlarge, (b) c6i.32xlarge

Figure 2. Relative performance of Spack* build vs reference build for HPC benchmarks tested on GCP*: (a) c2-stand-60, (b) c3-highcpu-176

It should be noted that there is not an objectively defined “baseline” configuration for the studied HPC applications to compare the reference build to (i.e., WRF compile provides >70 options to choose from) and therefore, no “Intel optimized vs baseline” comparison has been made to avoid any subjective conclusions.

Spack Recipes

The end-to-end installation of HPC applications depends on the system architecture, availability of their dependencies, application compile time, etc. Spack provides “ease of use” by streamlining such installation (and all the required dependencies) without any end-to-end time overhead compared to manual process. Tables 3–5 outline the Spack recipes used to obtain the results given in the Performance Analysis section for LAMMPS, OpenFOAM, and WRF using v0.20.0 release. It is recommended to use these Spack recipes (or similar) to build optimal binaries for these critical HPC applications. It should be noted that the prerequisites to use current Spack recipes are basic Spack installation and the installation of Intel® oneAPI packages.

|

Application |

LAMMPS |

|---|---|

|

Build |

$spack install lammps@20201029 +asphere +class2 +kspace +manybody +misc +molecule +mpiio +opt +replica +rigid +user-omp +user-intel %intel@2021.6.0 ^intel-oneapi-mkl ^intel-oneapi-mpi target=skylake_avx512 |

|

Version and Workloads |

V20201029. airebo, dpd, eam, lc, lj, rhodo, sw, tersoff, water. |

|

Notes |

No changes to Spack source code have been made. |

Table 3. Detailed Spack* recipes for optimal build of LAMMPS

|

Application |

OpenFOAM |

|---|---|

|

Build |

$spack install openfoam-org@8%intel@2021.6.0 ^intel-oneapi-mpi target=skylake_avx512 ^scotch%gcc |

|

Version and Workloads |

V8. MotorBike 20M (250 iteration), MotorBike 42M (250 iteration). |

|

Notes |

No changes to Spack source code have been made. |

Table 4. Detailed Spack* recipes for optimal build of OpenFOAM*

|

Application |

WRF |

|---|---|

|

Build |

$spack install wrf@4.2.2%intel@2021.6.0 build_type=dm+sm ^intel-oneapi-mpi ^perl%intel ^libxml2%intel ^tar%oneapi@2022.1.0 ^jasper@2.0.32 ^m4%gcc@8.5.0 |

|

Version and Workloads |

V4.2.2. CONUS-12km (v4.4), CONUS-2.5km (v4.4). |

|

Notes |

These suggested options to improve WRF’s timing can be mirrored in a Spack source code (here). Please note such suggestions could have accuracy impact (details). |

Table 5. Detailed Spack* recipes for optimal build of WRF*

Conclusions

HPC applications are instrumental in solving some of the most complex computational problems, but the process of building and testing such applications can be tedious, costly, and time-consuming. Spack provides a simple, open-source, spec-based interface for HPC package users for automated building and dependency tracking. In the present work, it was demonstrated that Spack can be used to build critical HPC applications simply and efficiently while achieving optimal performance. Such performance analysis was quantified on four different generations of Intel Xeon processors running on AWS and GCP. It is recommended to use the Spack recipes provided in this article (or similar) to build optimal binaries for the studied HPC applications.