For Simics® training and demo purposes, we often use Linux* running on the virtual platforms. Linux is free, open-source, easy to get, and it has always been easy to work with. In the demos and training, we do things like customizing Linux setups; automating target operations using the command line; adding device drivers and kernel modules; inspecting the target hardware from the shell; accessing target hardware directly from user-level code; and debugging the kernel and drivers (directly in source code). In the early days of Simics and embedded Linux, we built our own minimal configurations by hand to run on simple target systems. Most recently, we changed our Linux default demo and training setup to use Clear Linux*. This change showed us just how sophisticated modern Linux setups are – which is good in general, but it also can make some low-level details more complicated.

I remember crafting Linux bootable file systems from scratch, using loop-back mounts on a host Linux. I built my own cross-compilers for building the kernel and target software on the demo and training systems we used at the time. Once the compiler was available, I built a minimal target libc, copied it into the file system, crafted /etc/passwd and other system init files by hand, and added a busybox binary for some common commands. Getting this to work was a real team effort, as it was rather complicated to work through. In the end, we had a real Linux running with a very compact disk image only needing a few megabytes.

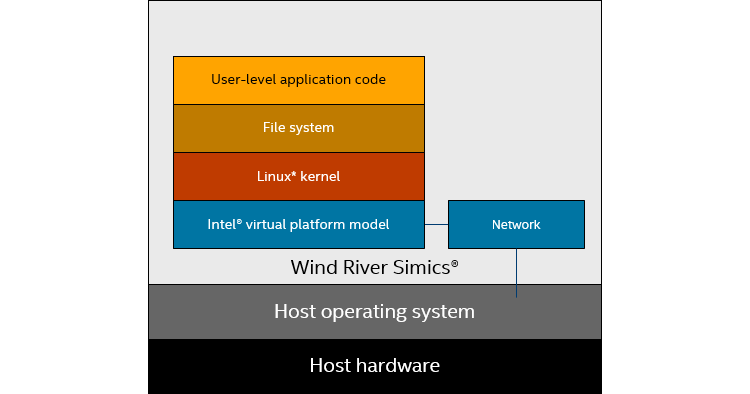

Figure 1 shows how things stack up: the Linux inside of Simics has nothing to do with the host operating system, it only sees the target virtual platform.

Figure 1: Target Linux running on a virtual platform

Since these days, Linux has changed significantly and added innumerable features and capabilities. As Simics is perfectly capable of simulating full-size Intel® Architecture (IA) machines, it makes sense to run a standard Linux created for real hardware rather than creating our own setup from scratch. This has happened in several steps, using increasingly larger and more common Linuxes.

The first step was to buildroot to create a minimal embedded Linux with a small busybox-based file system, with a 2.6 kernel. The next step was to use a Yocto* Linux 1.8 setup with a 3.14 kernel. Using Yocto, we built the kernel, libraries, and disk images from scratch, but with the Yocto tools that automated the process and provided easy configuration and easy repeatability of the setup.

Recently, we moved to C lear Linux, a standard Linux distribution (distro), not a build-your-own-Linux kit like our previous approaches. A Linux distro reduces the work to set up a Linux to just downloading and running an installer like you would do on your own PC. The version of Clear Linux we used put us on the 4.20 kernel, as well as a much more fully-featured and rich file system and system configuration. Using a modern Linux distribution is rather different from the Linux setups we used previously.

lear Linux, a standard Linux distribution (distro), not a build-your-own-Linux kit like our previous approaches. A Linux distro reduces the work to set up a Linux to just downloading and running an installer like you would do on your own PC. The version of Clear Linux we used put us on the 4.20 kernel, as well as a much more fully-featured and rich file system and system configuration. Using a modern Linux distribution is rather different from the Linux setups we used previously.

Building a Driver

The use of custom drivers for custom hardware is a key part of most of our demo and training process flows. After all, what fun is using a virtual platform if we cannot create custom hardware and access it from software? Once upon a time, this was a simple matter of writing bare-metal code to directly manipulate the virtual hardware, without any BIOS or operating system at all. Next, it turned into a Linux device driver, and on Intel Architecture (IA), it turned into writing PCI(e) drivers. PCI might sound scary, but the PCI and PCI Express (PCIe) subsystem in Linux is really easy to work with, and modeling PCIe devices in Simics is very easy, too.

An advantage of the old Linux setups was that they all relied on cross compilation outside of Simics (even building a Yocto Linux for IA on an IA host counts as cross compilation). This meant that the disk images could be kept small, which is a big benefit when distributing the Linux setups to lots of people. However, this is not an option when using a Linux distribution, as-is.

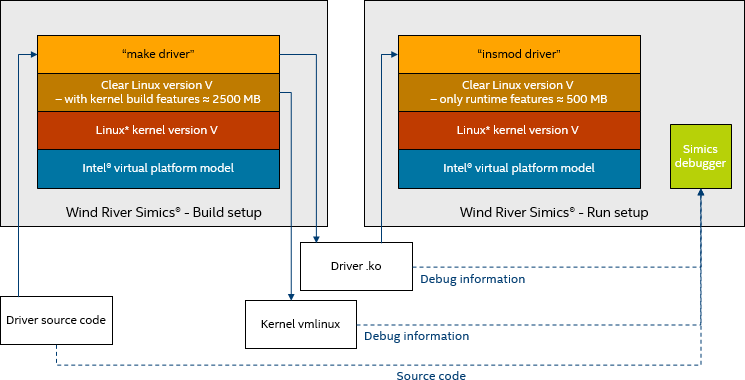

Instead, we ended up building the device driver on the target system, itself (which is not a problem given Simics performance). The size of the Linux image needed to build a driver is about five times that of a basic runtime image, and thus we ended up with a system as shown in Figure 2, where the driver is built on a full-size development and build setup, and the resulting .ko file saved to the host. Then, a new Simics session is started from the runtime image (without the build tools), and the driver is loaded from the host. In this way, we get the driver into the runtime image without including the overhead of the build setup.

Figure 2: Kernel Driver Build Flow

Figure 2: Kernel Driver Build Flow

Keeping the source code on the host also facilitates driver debug. However, debugging the driver was more complex.

Technical side-note: in previous setups, the Linux device drivers had the “traditional” split into .text, .data, .bss that would be familiar to anyone who has ever played with linking Unix applications. These sections have to be relocated in order to make the debug information align with the location that the kernel actually loaded the module to. For Clear Linux, relocating the .text section was insufficient to set breakpoints on anything interesting. Almost all the code had been moved out to the .text.unlikely section, instead. The Linux driver build system assumes that any code that calls kernel logging functions is error-handling code, and thus unlikely to be executed, which in turn means that it goes into a separate section with the goal of increasing code locality for the common case. Since I pepper my kernel driver code with log messages for training purposes, it was all considered exceptional-case code.

Loading a Driver on the Target

Before the driver could be used and debugged, it had to be loaded. This also presented an issue: the default configuration of Clear Linux does not allow unsigned drivers to be loaded. That makes total sense for a Linux deployed in any real setting—in the cloud, on a server, or a desktop. It is not necessary for a training setup however, running safely within a virtual platform, with no way to inflict damage on the host. We had to turn off this check to enable a simple user workflow—which is just a matter of setting up some configuration files and having systemd regenerate the kernel command-line (all documented on the Clear Linux site).

It was also a pain fiddling around with sudo to run code that accessed the driver, and it ended up being simpler to just log in as root for these kinds of exercises. Running as root sets a bad example, but trying to get a cat to /dev through sudo with the help of tee, and explaining to students just what is going on, is not worth the effort. It would take more time to explain the details of Linux command line permissions-handling than how Simics works.

Debugging the Kernel

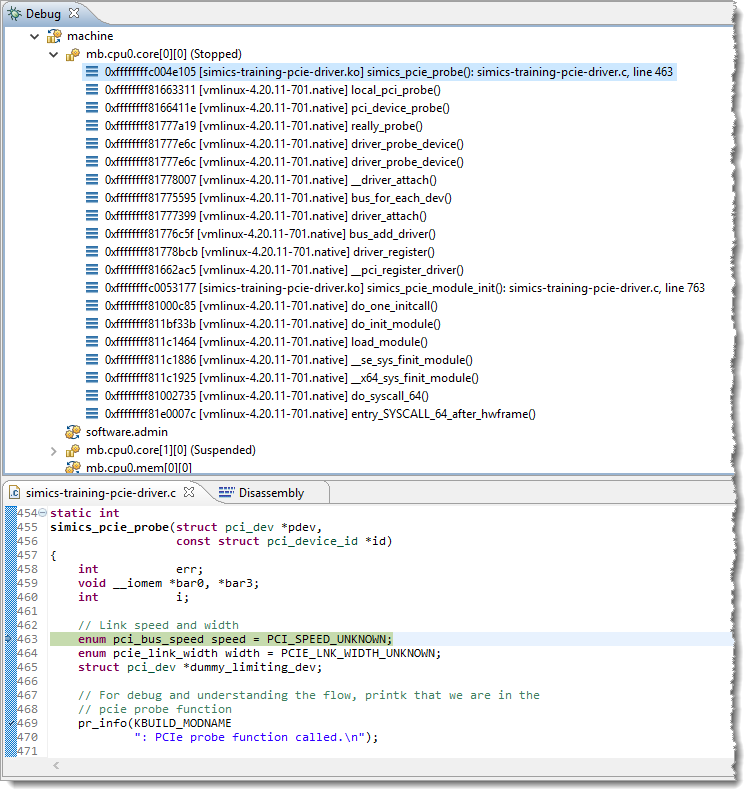

When debugging a driver, it is useful to also know which parts of the Linux kernel are involved when the driver is invoked. Even without source code, this can be very handy, as shown in Figure 3 where the PCIe probe function is called immediately upon it being registered in the module init function.

Figure 3. Stack trace showing how the demo driver simics_pcie_probe() function gets called.

Figure 3. Stack trace showing how the demo driver simics_pcie_probe() function gets called.

Getting to a useful stack trace as shown in Figure 3 required turning off Kernel Address Space Layout Randomization (KASLR) in the Linux kernel. I could not find out how the kernel was relocated and figure out the offset to make the debug information align with the kernel. That seems to be the point of KASLR, in the first place. Thus, I followed the best known method for Linux kernel development and turned off KASLR.

Debugging the kernel is also a clear case that shows the difference between cross compilation on the host and using a pre-built Linux distro. When building the kernel and file system on the host, it is trivial to get hold of the vmlinux file with debug information about the kernel. But when using a distro, I had to download the vmlinux binary to the target file system as part of setting up for the driver build (using Clear Linux swupd). The vmlinux file was then saved to the host and used in the debug setup, as shown in Figure 2.

Conveniences of Clear Linux

Using a modern Linux distro also came with some real conveniences. For example, Clear Linux is capable of automatically detecting that it is running behind a network proxy and loading the network proxy setup scripts. This removed a manual step that is otherwise necessary to connect to the outside world from Linux running on top of Simics, inside most enterprise networks.

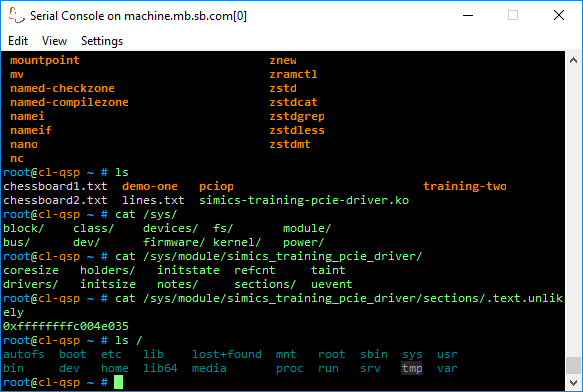

The command-line environment is rich and full-featured. Adding new software is as simple as using the swupd command to add new bundles. The command-line interface is also full of color. The Linux kernel and standard shells assume you have at least 8-bit color in your terminal, even when connecting over a serial port. Figure 4 shows an example:

Figure 4: Example of the colorful nature of the Linux command-line

Figure 4: Example of the colorful nature of the Linux command-line

Final Notes

This blog is more about Linux than it is about virtual platforms. We observed this many years ago, when Simics started to mature: user questions changed from being about the simulator to being about how to use the software stacks that ran on top. Whenever this happens, it indicates that the virtual platform is solid.

Moving to Clear Linux was really all about how to configure and use a modern Linux, and very little about Simics. That is how things should be. In the end, the purpose of the virtual platform is to make software development and testing easier, earlier, and better—not just to play with the virtual platform.

Related Content

Ecosystem Partners Shift Left with Intel for Faster Time-to-Market: Intel’s Pre-Silicon Customer Acceleration program scales innovation across all operating environments using the Simics® virtual platform.

Simics® Software Automates “Cyber Grand Challenge” Validation: Simics® virtual platforms automated the vetting of competitors’ submissions to the DARPA Cyber Grand Challenge, where automated cyber-attack and cyber-defense systems were pitted against each other.

Containerizing Wind River Simics® Virtual Platforms (Part 1): The technology of containers and how to use them with Simics.

Using Wind River Simics® with Containers (Part 2): the applications and workflows enabled by containerizing Simics.

Author

Dr. Jakob EngblomJ is a product management engineer for the Simics virtual platform tool, and an Intel® Software Evangelist. He got his first computer in 1983 and has been programming ever since. Professionally, his main focus has been simulation and programming tools for the past two decades. He looks at how simulation in all forms can be used to improve software and system development, from the smallest IoT nodes to the biggest servers, across the hardware-software stack from firmware up to application programs, and across the product life cycle from architecture and pre-silicon to the maintenance of shipping legacy systems. His professional interests include simulation technology, debugging, multicore and parallel systems, cybersecurity, domain-specific modeling, programming tools, computer architecture, and software testing. Jakob has more than 100 published articles and papers, and is a regular speaker at industry and academic conferences. He holds a PhD in Computer Systems from Uppsala University, Sweden.

Dr. Jakob EngblomJ is a product management engineer for the Simics virtual platform tool, and an Intel® Software Evangelist. He got his first computer in 1983 and has been programming ever since. Professionally, his main focus has been simulation and programming tools for the past two decades. He looks at how simulation in all forms can be used to improve software and system development, from the smallest IoT nodes to the biggest servers, across the hardware-software stack from firmware up to application programs, and across the product life cycle from architecture and pre-silicon to the maintenance of shipping legacy systems. His professional interests include simulation technology, debugging, multicore and parallel systems, cybersecurity, domain-specific modeling, programming tools, computer architecture, and software testing. Jakob has more than 100 published articles and papers, and is a regular speaker at industry and academic conferences. He holds a PhD in Computer Systems from Uppsala University, Sweden.