Every once in a while, something interesting just pops out of the Simics* development labs here at Intel. As part of the usual course of development, cool stuff just happens to … well … happen. Here is a recent example of such an event: simulating an Intel® Server configured with 6 terabytes of RAM, using a host with a measly 256 gigabytes—only one twenty-fourth of the target machine.

To put this in perspective, it’s like taking a model train—commonly 1:24 scale to the real thing—and using it to simulate the power and performance of Japan’s mighty Shinkansen railway lines. Sounds unintuitive, right? Maybe even ridiculous.

But it worked just fine! So how did we achieve that?

Tweet This: With Simics it's like using a model train to simulate the performance of Japan's mighty bullet train

Simulating a monster (platform, that is)

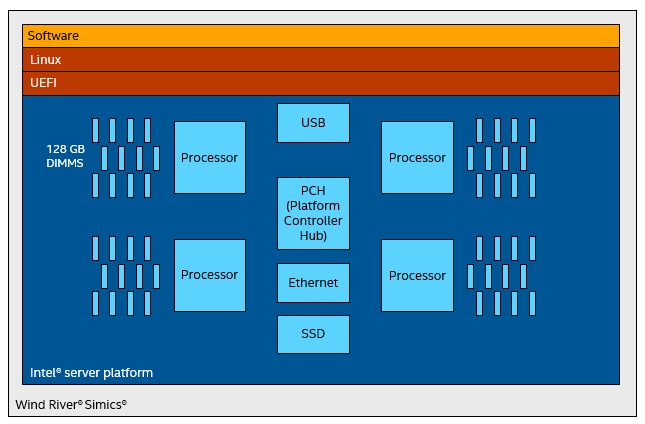

While experimenting with a model of an Intel Server created in Wind River* Simics, Intel® Software engineers set up a configuration featuring 4 processor sockets. For each socket, the model was configured with 12 populated DIMM slots (dual in-line memory modules). Each DIMM slot contained a virtual 128 GB DIMM. This gives a total of 6 terabytes of memory, 4 x 12 x 128 GB, or 6442450944 kilobytes. (It’s a bizarrely large number of kilobytes that makes me think hard when parsing it—my first computer had 64 KB of ROM+RAM… this is about 100 million times bigger.)

The system setup looks like the below picture (which honestly ignores a ton of connections between the chips as well as the small pieces that litter a real server board, focusing on the big items):

What is cool about this monster of a target platform is that it can be simulated using a much smaller host than the target. Since Simics uses a lazy memory representation (meaning it doesn’t allocate any real memory, but justvirtual memory), we can actually simulate a memory size much larger than the RAM of the host machine. The lazy representation means that as long as the target does not actually use a piece of memory, we do not have to represent its contents. Simics just notes that there is supposed to be memory at a certain range of addresses, and until a processor core accesses it, it will not use any host memory.

In addition, even when target memory has been accessed by a processor, as long as the active working set of the target system fits in the host RAM, performance will be OK. Simics will keep the memory pages that actively being used by the target in RAM and swap the other pages out to disk. This means that I can, in principle, instantiate this 6-terabyte server setup on my little laptop that contains a mere 16 GB of RAM (however, I would not be able to boot it very far before starting to swap so much that progress would be snail-like).

Enabling today’s and tomorrow’s big data systems

The purpose of the exercise was to check how the target software behaves when presented with 128 GB DIMMs. I looked around, and it seems that getting a hold of such DIMMs is still very difficult. At the time we did the experiment, we could not find a single place that sold 128GB DIMMs! Even today, a few months later, getting a price for such a beast is difficult!

However, we absolutely want the UEFI (Unified Extensible Firmware Interface) firmware and operating system (OS) to accept and work correctly with 128 GB DIMMs when they do appear in a real system, and Simics makes this very easy to test. There is no need to chase down exotic memory modules costing tens of thousands of dollars. Instead, we just create a simulation model, insert the simulated DIMMs with appropriate metadata into the simulated platform, and turn on the virtual power supply to boot.

Short story: it worked. The UEFI identified the DIMMs and the OS booted happily.

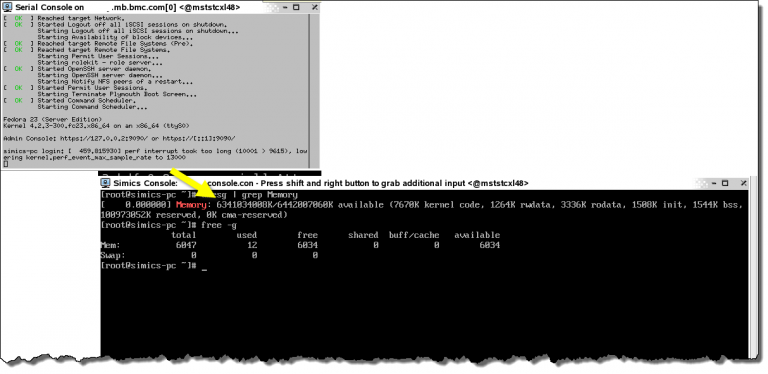

Here is the target system serial and graphics console following the completed boot:

Once the system had booted to a Linux prompt, the target system was using a bit more than 100 GB of its RAM. Most of that was the page tables that Linux sets up to manage the physical memory of the target machine. While 100 GB of page tables might sound very large, it is a tiny part of the target memory, less than 2 percent. Terabytes are big. (A terabyte in this context equals 1024 gigabytes – or to be really correct, a tebibyte equals 1024 gibibytes)

Looking back, this test reminds me of something we did way back in the very early days of Simics (right after the turn of the Millennium), where we populated a 64-bit memory space. The purpose was to check that the OS did not consider the amount of memory to be a signed value somewhere. So we created a setup that had 2 to the power of 64 bytes of RAM. At the time, that much memory would have cost more than the entire U.S. defense budget(see footnote for details), not to mention the infeasibility of cramming it all into a machine.

Things have certainly progressed since then. Today, this 48 DIMM system with 6 TB of RAM is far from the largest possible configuration you can buy. If you wanted to test something larger, Simics can still be used to simulate the real-world behavior. You can also make it smaller, with fewer processors, DIMM slots, and memory. Each such configuration is just a few lines of code to set up—an enormous savings of time, resources, and budgets. That is one way that we use Simics at Intel to test software before the hardware exists—and what we help our external partners do.

Virtually exploring endless technology-fueled possibilities

This exercise is a testament to the power of simulation. Using powerful software such as Wind River Simics, you can go beyond the limits of what is available in physical form and in the real world today, to test configurations from tomorrow and explore possible scenarios with very little effort.

With a simulator, you have much more flexibility to set up different configurations. You are not limited by the hardware available in the lab, and you can try hardware that does not yet exist or is not yet generally available (like the 128 GB DIMMs). You can create more different configurations, allowing broader exploration of the behavior of the system software.

Tweet This: Wind River Simics lets devs test configurations from tomorrow. Explore the endless possibilities!

A physical development board or hardware prototype represents a particular configuration of a system. But a virtual development board can be configured with different socket counts, core counts inside each processor, and different memory and disk configurations. Add in different PCIe* cards and network topologies outside the board … and the possibilities are really endless!

Follow me @__jengblom for news on my latest blog posts.

Reading tip: For more about how Simics works and the power of the Simics memory simulation system, get the book (you can download Chapter 1 free). Also, see http://windriver.com/products/simics/.

Footnotes

In 2001, a 256 MB DIMM cost between 50 and 150 USD. To fill up a 64-bit memory space, you would need some 68,719,476,736 DIMMs, for a total cost of at least 3,435,973,836,800 USD, or roughly 3 trillion USD (3 * 10^12). The US defense budget in 2001 was just some 335 billion dollars, which is an order of magnitude smaller.

*Other names and brands may be claimed as the property of others.

No computer system can be absolutely secure. Intel technologies’ features and benefits depend on system configuration and may require enabled hardware, software or service activation. Learn more at intel.com, or from the OEM or retailer.

"