Deep learning neural network models are available in multiple floating point precisions. For Intel® OpenVINO™ toolkit, both FP16 (Half) and FP32 (Single) are generally available for pre-trained and public models.

This article explores these floating point representations in more detail, and answer questions such as which precision are compatible with different hardware.

Compatible sizes for CPU, GPU, HDDL-R, or NCS2 target hardware devices

| Central Processing Unit CPU | CPU supports FP32, Int8 CPU plugin - Intel Math Kernel Library for Deep Neural Networks (MKL-DNN) and OpenMP. |

|

| Graphics Processing Unit GPU | GPU supports FP16, FP32 FP16 preferred | |

| 8 Vision Processing Units (MYRIAD) HDDL-R |

FP32 and FP16 | |

| Vision Processing Unit (MYRIAD) VPU |

VPU supports FP16 |

Why do some precisions work with a certain type of hardware and not with the others?

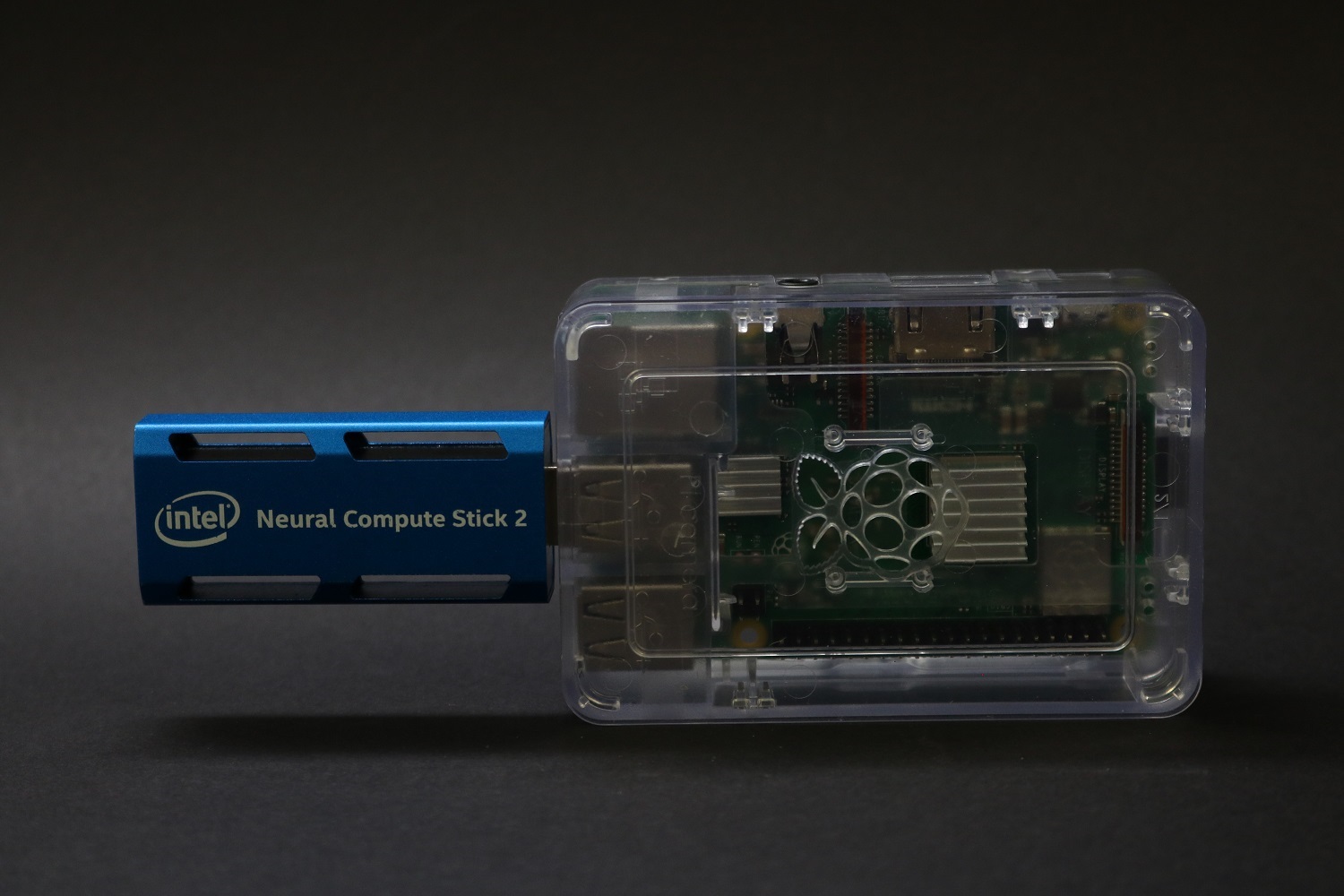

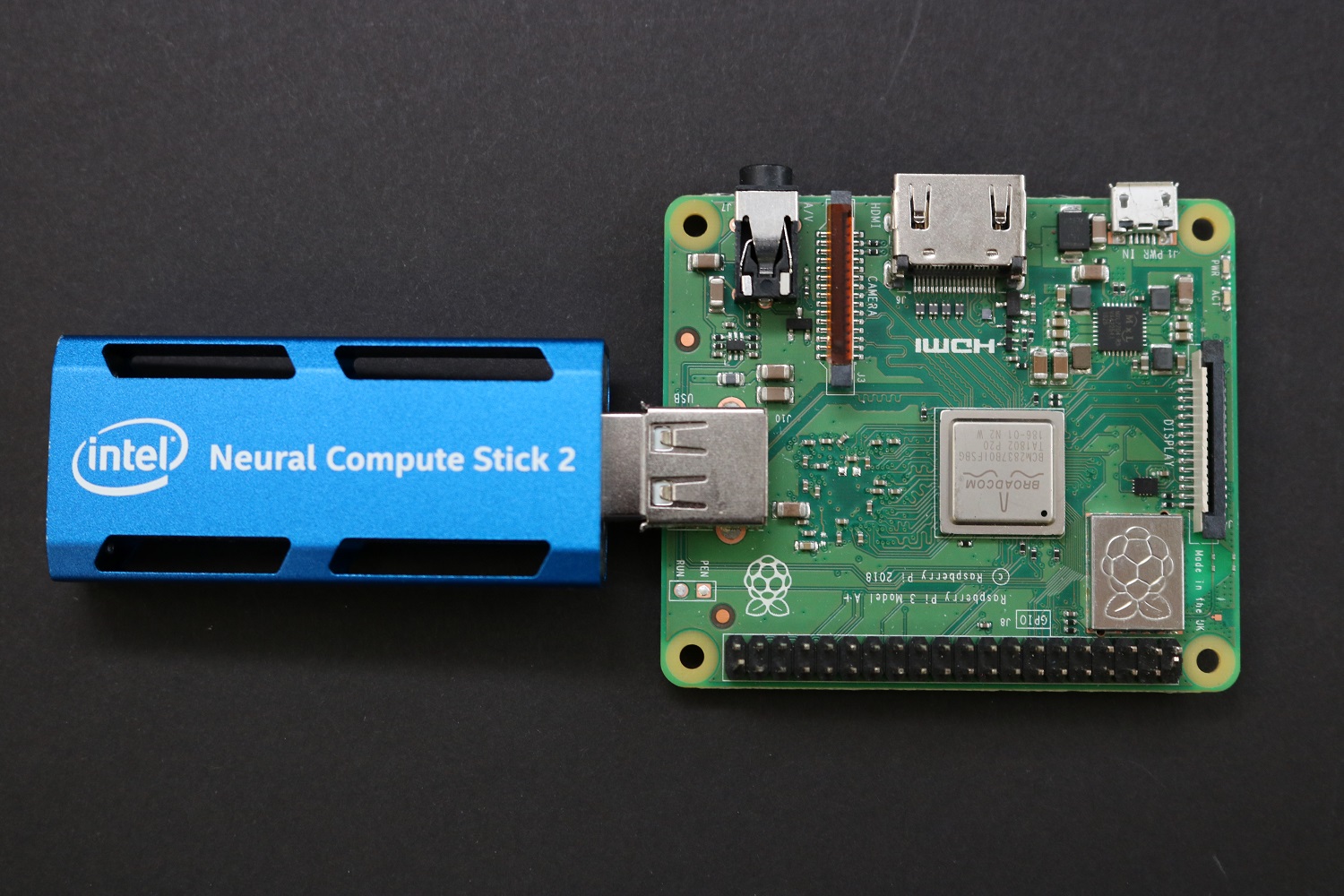

When developing for Intel® Neural Compute Stick 2 (Intel® NCS 2), Intel® Movidius VPUs, and Intel® Arria® 10 FPGA, you want to make sure that you use a model that uses FP16 precision.

The Open Model Zoo, provided by Intel and the open-source community as a repository for publicly available pre-trained models, has nearly three dozen FP16 models that can be used right away with your applications. If these don’t meet your needs, or you want to download one of the models that are not already in an IR format, then you can use the Model Optimizer to convert your model for the Inference Engine and Intel® NCS 2.

How can choosing the right floating point precision improve performance with my application?

Half-precision floating point numbers (FP16) have a smaller range. FP16 can result in better performance where half-precision is enough.

Advantages of FP16

- FP16 improves speed (TFLOPS) and performance

- FP16 reduces memory usage of a neural network

- FP16 data transfers are faster than FP32

| Area |

Description |

| Memory Access |

FP16 is half the size |

| Cache |

Take up half the cache space - this frees up cache for other data |

| Bandwidth |

Requires half the memory bandwidth - this free up the bandwidth for other operations in the app |

| Storage and Disk IO |

Requires half the storage space and disk IO. |

Disadvantages

The disadvantage of half precision floats is that they must be converted to/from 32-bit floats before they’re operated on. However, because the new instructions for half-float conversion are very fast, they create several situations in which using half-floats for storing floating-point values can produce better performance than using 32-bit floats.

Using the Model Optimizer creates a more compact model for inference. This is done by merging convolutions

Calibration tool and Int8

The inference engine calibration tool is a Python* command line tool located in the following directory:

~/openvino/deployment_tools/tools

The Calibration tool is used to calibrate a FP32 model in low precision 8 bit integer mode while keeping the input data of this model in the original precision. The calibration tool reads the FP32 model , calibration dataset and creates a low precision model. This differentiates from the orginal model in the following ways:

1. Per channel statistics are defined.

2. Quantization_level layer attribute is defined. The attribute defines precision which is used during inference.

8-bit Inference Topologies

The 8-bit inference feature was validated on the following topologies listed below.

Classification models:

- Caffe Inception v1, Inception v4

- Caffe ResNet-50 v1, ResNet-101 v1

- Caffe MobileNet

- Caffe SqueezeNet v1.0, SqueezeNet v1.1

- Caffe VGG16, VGG19

- TensorFlow Inception v3, Inception v4, Inception ResNet v2

- Caffe DenseNet-121, DenseNet-161, DenseNet-169, DenseNet-201

Object detection models:

- Caffe SSD_SqueezeNet

- Caffe SSD_MobileNet

- Caffe SSD_Vgg16_300

- TensorFlow SSD Mobilenet v1, SSD Mobilenet v2

Semantic segmentation models:

- Unet2D

For more information about running inference with int8, visit Use the Calibration tool article.

"