We know how to protect data in transit; we know how to protect data in storage. Our focus with the Confidential Containers* project (CoCo) is how to protect data in use. Confidential Containers protect data in use at the pod level by combining trusted execution environments (TEEs) with Kata Containers*.

This talk was first presented at KubeCon + CloudNativeCon Europe 2023; catch the full 33-minute presentation and demo or download the slides.

From Kata to Confidential Containers

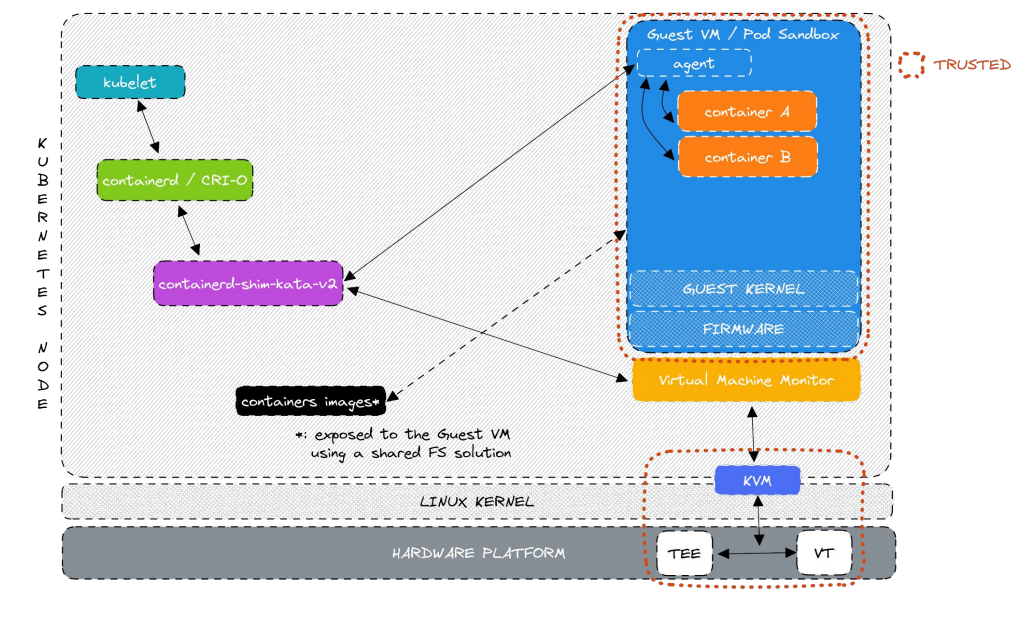

Kata Containers work just like traditional containers, but they add a lightweight virtual machine (VM) to protect workloads from each other and to protect the host from non-trusted workloads. Confidential Containers add one additional layer of protection—they protect the workload from non-trusted infrastructure.

In Kata Containers, container images are outside the VM and communicate with guest agents via a shared FX solution.

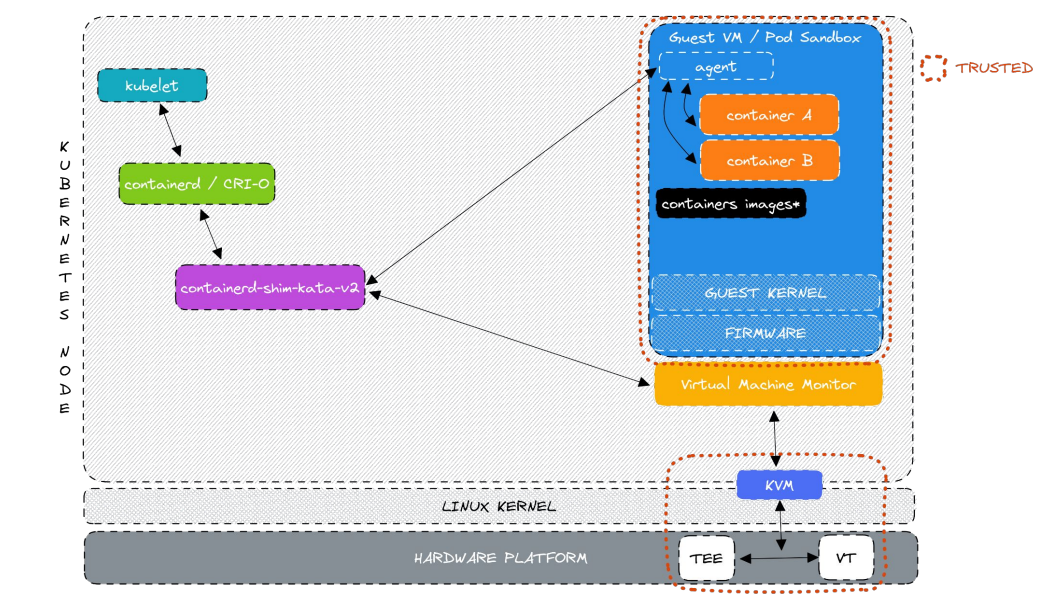

In a Confidential Container, TEEs are added to run the VM on encrypted memory. Container images are moved inside the guest VM to protect them. Then an attestation agent is added, which must communicate with a key broker service before running a workload.

By adding encryption and an attestation agent, Confidential Containers ensure a workload runs in a more secure environment with encrypted memory.

The Tech Driving Confidential Containers

CoCo is enabled by two key elements: process-based isolation and VM-based isolation. Process-based isolation is made possible with secure memory enclaves, enabled by Intel® Software Guard Extensions (Intel® SGX). VM-based isolation—a feature of Kata Containers—is enabled by Intel® Trust Domain Extensions (Intel® TDX), IBM SE*, or AMD SEV-ES*. The goal of CoCo is to unite them all under one project and make them usable with any software, so users can choose what kind of isolation they require.

Confidential Containers use TEEs to help isolate the workload from the host infrastructure in addition to virtualization technologies that isolate workloads from each other and isolate the host from the workload.

Extending CoCo to Cloud Service Providers (CSPs)

The cloud API adaptor, also known as the “peer pods” approach, is another flavor of confidential computing designed to work in a CSP environment. This approach uses the Kata remote hypervisor to launch the Kata VM outside the cluster, while the cloud API adaptor talks to the CSP API to create a VM. This VM can be a confidential VM enabled by TEE technology, and it’s attested and measured to provide a trusted base to run container workloads.

Using the cloud API adaptor, users can launch a confidential VM outside the CSP cluster.

Confidential Computing for Regulated Industries

Why Confidential Containers? Industries like financial services, government, and healthcare require intensive regulation and scrutiny of their data and their workloads. This is where confidential computing can help. Imagine a hospital running an application in the cloud and processing sensitive data like test results and patient information. How can they be sure it’s running a secure environment? If the application is running in a TEE, attestation confirms the identity of both the workload and the stack it’s running.

Jeremi Piotrowski’s talk on The Next Episode in Workload Isolation shows exactly how this works.

Confidential Computing for Machine Learning (ML)

Encrypted data in ML workloads provides another important use case. Imagine you’re running ML workloads using Apache Spark*. With the right configuration, one simple change to the runtime class name converts the runtime to a confidential container runtime. Now user data is always encrypted by a key provided by the user. When these pods come up, they start the attestation, fetch the key if the attestation is successful—if the software has not been modified or started in a different environment—and decrypt the data before it starts the actual work.

Confidential Containers enable machine learning workloads in Apache Spark to work on encrypted data.

Demo: Deploy Your Own Confidential Containers

This talk includes a quick demo of how to deploy the CoCo operator, hosted on OperatorHub.io*, and then run a simple workload. You can follow along by watching the KubeCon presentation, as well as the line-by-line code. You’ll need a running Kubernetes* cluster, and your nodes have to be ready for confidential computing, including hardware that’s configured with the right BIOS settings.

Get started and Get involved

As of this writing, the most recent Confidential Containers version, 0.5.0, is the largest to date. It features a generic key broker service (BKS), peer pods for Kata support with multiple CSPs, a URL for uniform attestation resources, and support for Intel® SGX enclaves. Get started here.

You can also get involved by joining the Slack* channel #confidential-containers in the CNCF* workspace. Continue your journey by watching the next talk in this sequence or past years’ Confidential Containers Explained (2022) and Trust No One: Bringing Confidential Computing to Containers (2021).

About the presenters

Fabiano Fidencio, a Cloud Orchestration Software Engineer at Intel, has a strong passion for easing the usability of the projects he works on. For the past two years, he’s served as an Architecture Committee member of the Kata Containers project and has been involved with Confidential Containers from its early stages.

Jens Freimann, a Software Engineering Manager at Red Hat, started his career working on firmware for I/O chipsets in IBM’s mainframes but soon transferred to work on a full-system simulator based on KVM. This led him to work on core KVM in the IBM Linux Technology Center before moving to Red Hat to continue working in virtualization, focusing on virtio(-net) features in QEMU, kernel, and DPDK.