Intel Neural Compressor is an open-source Python* library for model compression that reduces the model size and increases the speed of deep learning (DL) inference on CPUs or GPUs (Figure 1). It provides unified interfaces across multiple DL frameworks for popular network compression technologies, such as quantization, pruning, and knowledge distillation. This tool supports automatic accuracy-driven tuning strategies to help the user quickly find the best quantized model. It also implements different weight pruning algorithms to generate pruned models using a predefined sparsity goal and supports knowledge distillation from the teacher model to the student model. Intel Neural Compressor provides APIs for a range of frameworks including TensorFlow*, PyTorch*, and MXNet* in addition to ONNX* runtime for greater interoperability across frameworks. We will focus on the benefits of using the tool with a PyTorch model.

Figure 1. Intel Neural Compressor

Intel Neural Compressor extends PyTorch quantization by providing advanced recipes for quantization and automatic mixed precision, and accuracy-aware tuning. It takes a PyTorch model as input and yields an optimal model. The quantization capability is built on the standard PyTorch quantization API and makes its own modifications to support fine-grained quantization granularity from the model level to the operator level. This approach gives better accuracy without additional hand-tuning.

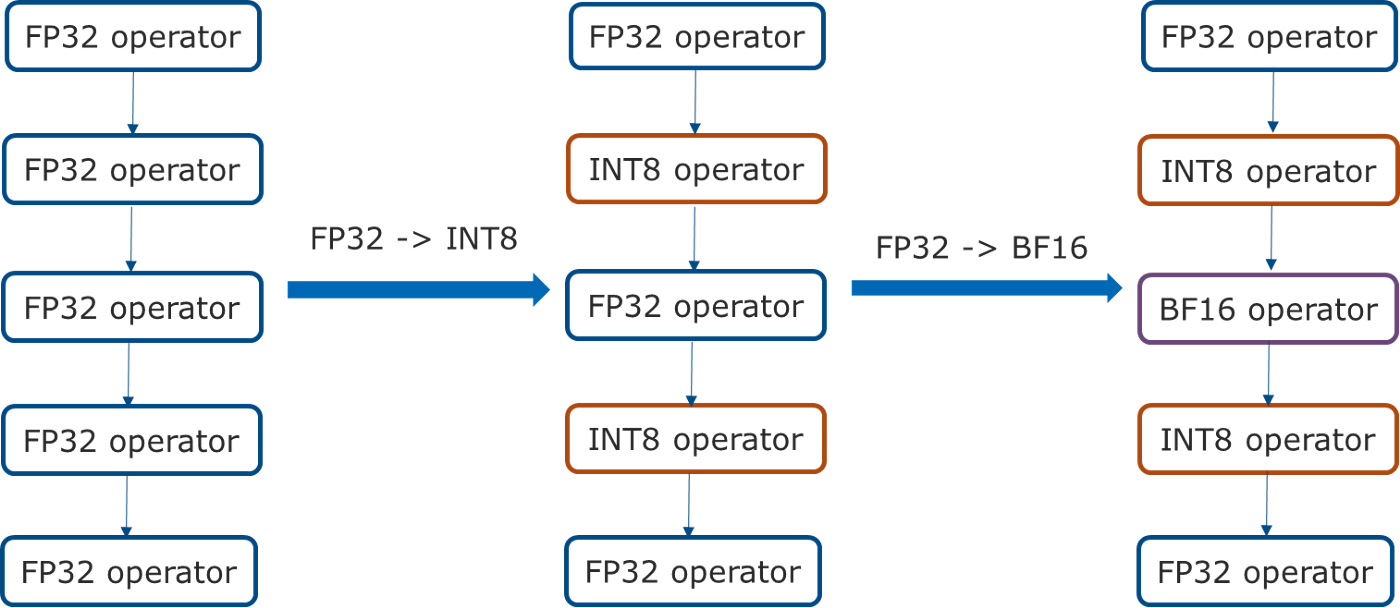

It further extends the PyTorch automatic mixed precision feature on 3rd Gen Intel® Xeon® Scalable Processors with support for INT8 in addition to BF16 and FP32. It first converts all the quantizable operators from FP32 to INT8, and then converts the remaining FP32 operators to BF16, if BF16 kernels are supported on PyTorch and accelerated by the underlying hardware (Figure 2).

Figure 2. Automatic mixed precision

Intel Neural Compressor also supports an automatic accuracy-aware tuning mechanism for better quantization productivity. It first queries the framework for the quantization capabilities, such as quantization granularity (per_tensor or per_channel), quantization scheme (symmetric or asymmetric), quantization data type (u8 or s8), and calibration approach (min-max or KL divergence) (Figure 3). Then it queries the supported data types for each operator. With these queried capabilities, the tool generates a whole tuning space of different sets of quantization configurations and starts the tuning iterations. For each set of quantization configurations, it performs calibration, quantization, and evaluation. Once the evaluation meets the accuracy goal, the tool terminates the tuning process and produces a quantized model.

Figure 3. Automatic accuracy-aware tuning

Pruning is mainly focused on unstructured and structured weight pruning and filter pruning. Unstructured pruning uses a magnitude algorithm to prune weights during training when their magnitude is below a predefined threshold. Structured pruning implements experimental tile-wise sparsity kernels to boost the performance of the sparsity model. Filter pruning implements a gradient-sensitivity algorithm that prunes the head, intermediate layers, and hidden states in the model according to the importance score calculated by the gradient.

Intel Neural Compressor also implements a knowledge distillation algorithm to transfer knowledge from a large “teacher” model to a smaller “student” model without loss of validity (Figure 4). The same input is fed to both models, and the student model learns by comparing its results to both the teacher and the ground-truth label.

Figure 4. Knowledge distillation algorithm

The following example shows how to quantize a natural language processing model with Intel Neural Compressor:

# config.yaml

model:

name: distilbert

framework: pytorch_fx

tuning:

accuracy_criterion:

relative: 0.01

# main.py

import torch

import numpy as np

from transformers import (

AutoModelForSequenceClassification,

AutoTokenizer

)

model_name = "distilbert-base-uncased-finetuned-sst-2-english"

model = AutoModelForSequenceClassification.from_pretrained(

model_name,

)

# Calibration dataloader

class CalibDataLoader(object):

def __init__(self):

self.tokenizer = AutoTokenizer.from_pretrained(model_name)

self.sequence = "Shanghai is a beautiful city!"

self.encoded_input = self.tokenizer(

self.sequence,

return_tensors='pt'

)

self.label = 1 # negative sentence: 0; positive sentence: 1

self.batch_size = 1

def __iter__(self):

yield self.encoded_input, self.label

# Evaluation function

def eval_func(model):

output = model(**calib_dataloader.encoded_input)

print("Output: ", output.logits.detach().numpy())

emotion_type = np.argmax(output.logits.detach().numpy())

return 1 if emotion_type == calib_dataloader.label else 0

# Enable quantization

from neural_compressor.experimental import Quantization

quantizer = Quantization('./config.yaml')

quantizer.model = model

quantizer.calib_dataloader = CalibDataLoader()

quantizer.eval_func = eval_func

q_model = quantizer.fit()

Note that the generated mixed-precision model may vary, depending on the capabilities of the low precision kernels and the underlying hardware (e.g., INT8/BF16/FP32 mixed-precision model on 3rd Gen Intel Xeon Scalable Processors).

Performance Results

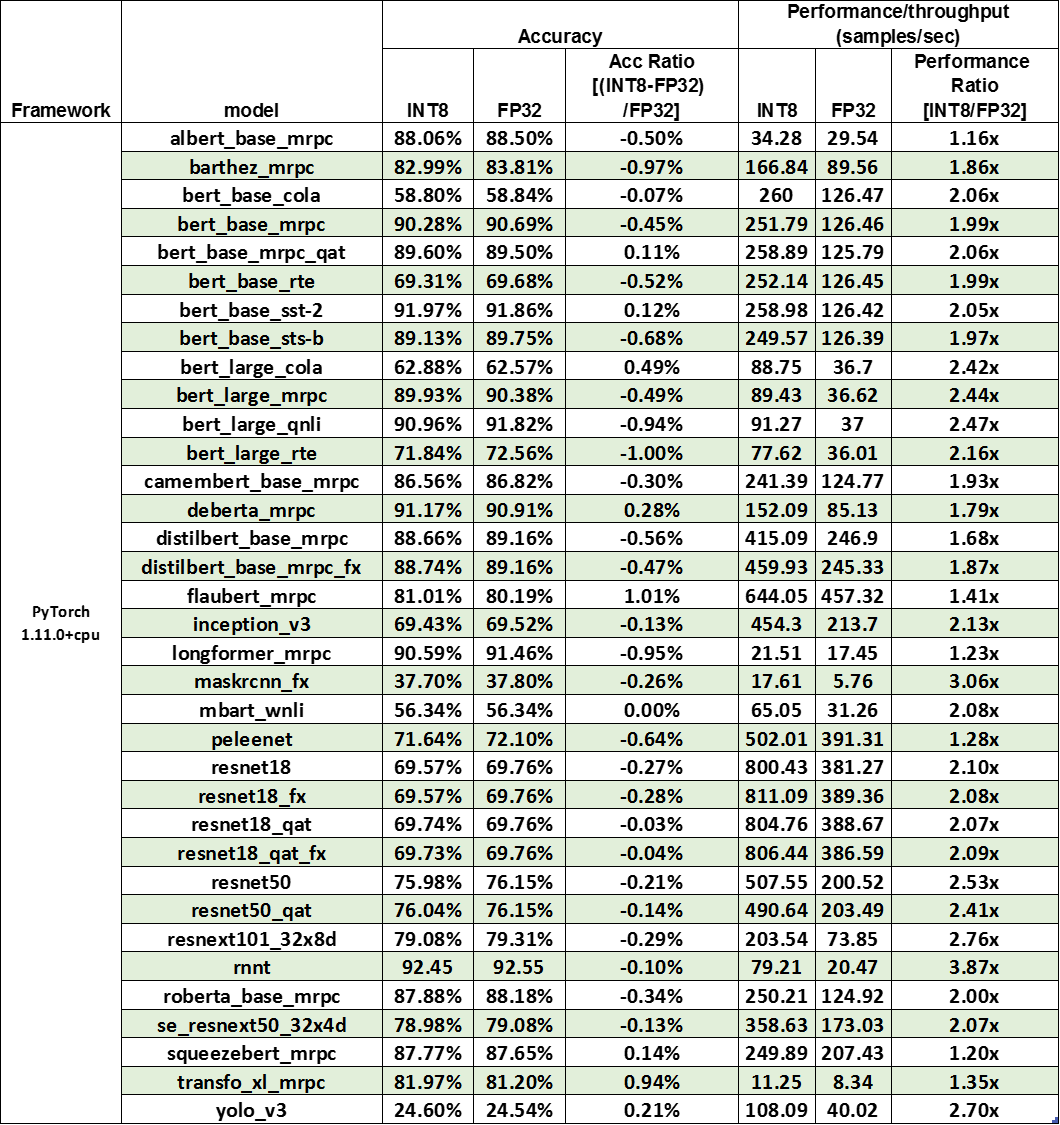

Intel Neural Compressor has validated 400+ examples with a performance speedup geomean of 2.2x on an Intel Xeon Platinum 8380 Processor with minimal accuracy loss (e.g., Table 1). More details for validated models are available here.

Table 1. Performance results for Intel® Neural Compressor

Testing Date: Performance results are based on testing by Intel as of June 10, 2022 and may not reflect all publicly available security updates.

Configuration Details and Workload Setup: 2S Intel® Xeon® Platinum 8380 CPU @ 2.30GHz, 40-core/80-thread, Turbo Boost on, Hyper-Threading on; memory: 256GB (16x16GB DDR4 3200MT/s); storage: Intel® SSD *1; NIC: 2x Ethernet Controller 10G X550T; BIOS: SE5C6200.86B.0022.D64.2105220049(ucode:0xd0002b1);OS: Ubuntu 20.04.1 LTS; Kernel: 5.4.0–42-generic; Batch Size: 1; Core per Instance: 4.

The vision of Intel Neural Compressor is to improve productivity and solve the issues of accuracy loss by an auto-tuning mechanism and an easy-to-use API when applying popular neural network compression approaches. We are continuously improving this tool by adding more compression recipes and combining those techniques to produce optimal models. We invite users to try Intel Neural Compressor and provide feedback and contributions via the GitHub repo.

Acknowledgment

We would like to thank Xin He, Chang Wang, Wenxin Zhang, Penghui Cheng, and Suyue Chen for their contributions to Intel® Neural Compressor. We also offer a special thanks to Eric Lin, Jianhui Li, and Jiong Gong for their technical discussions and insights, and collaborators from Meta for their professional support and guidance. Finally, we would like to thank Wei Li, Andres Rodriguez, and Honesty Young for their great support.