Today, we’re pleased to announce the Deep Learning Reference Stack (DLRS) v7.0 release that features Intel® Advanced Vector Extensions 512 (Intel® AVX-512) with Intel® Deep Learning Boost (Intel® DL Boost) and the bfloat16 (BF16) extension. Intel DL Boost accelerates AI training and inference performance. In response to customer feedback, release v7.0 offers an enhanced user experience with support for the 3rd Gen Intel® Xeon® Scalable processor, the 10th Gen Intel® Core™ processor, the Intel® Iris® Plus graphics, and the Intel® Gaussian & Neural Accelerator (Intel® GNA).

DLRS v7.0 further improves the ability to quickly prototype and deploy DL workloads, reducing complexity while allowing customization. This release features:

- TensorFlow* 1.15.3 and TensorFlow* 2.4.0 (2b8c0b1), end-to-end open source platform for machine learning (ML).

- PyTorch* 1.7(458ce5d) open source machine learning framework that accelerates the path from research prototyping to production deployment.

- PyTorch Lightning, lightweight wrapper for PyTorch designed to help researchers set up all the boilerplate state-of-the-art training.

- Transformers, state-of-the-art Natural Language Processing (NLP) for TensorFlow 2.4 and PyTorch.

- Flair, library for state-of-the-art Natural Language Processing using PyTorch

- OpenVINO™ model server version 2020.4, delivering improved neural network performance on Intel processors, helping unlock cost-effective, real-time vision applications [1]

- TensorFlow Serving 2.3.0, Deep Learning model serving solution for TensorFlow models.

- Horovod 0.20.0 framework for optimized distributed Deep Learning training for TensorFlow and PyTorch.

- oneAPI Deep Neural Network Library (OneDNN) 1.5.1 accelerated backends for TensorFlow, PyTorch and OpenVINO

- Intel DL Boost with Vector Neural Network Instruction (VNNI) and Intel AVX-512_BF16 designed to accelerate deep neural network-based algorithms.

- Deep Learning Compilers (TVM* 0.6), an end-to-end compiler stack.

- Seldon Core (1.2) and KFServing (0.4) integration examples with DLRS for deep learning model serving on a Kubernetes cluster.

Benefits of the Deep Learning Reference Stack

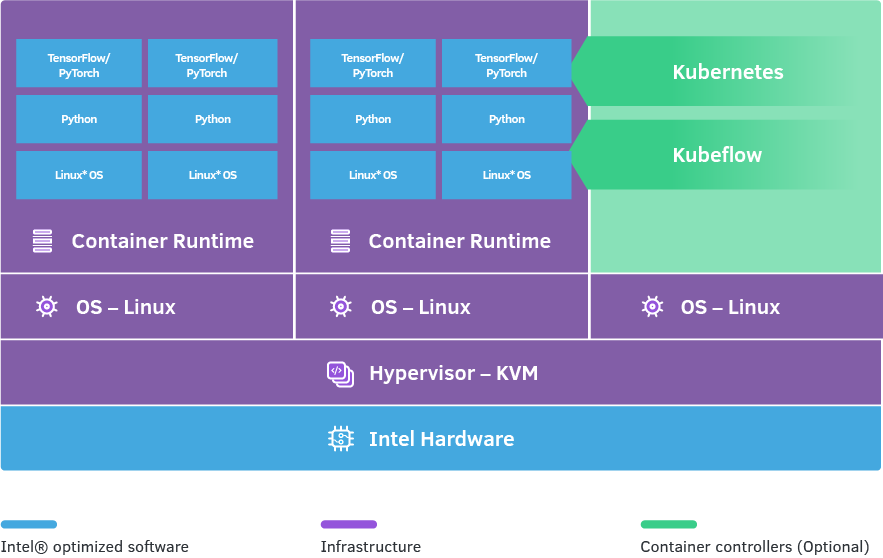

One highlight of DLRS v7 is its ability to use the new Intel AVX-512 extension for bfloat16 (AVX-512_BF16), which allows the user to accelerate deep learning training operations on 3rd Gen Intel® Xeon® Scalable processors. We have enabled this functionality for both Tensorflow and PyTorch frameworks (Figure 1).

Figure 1.

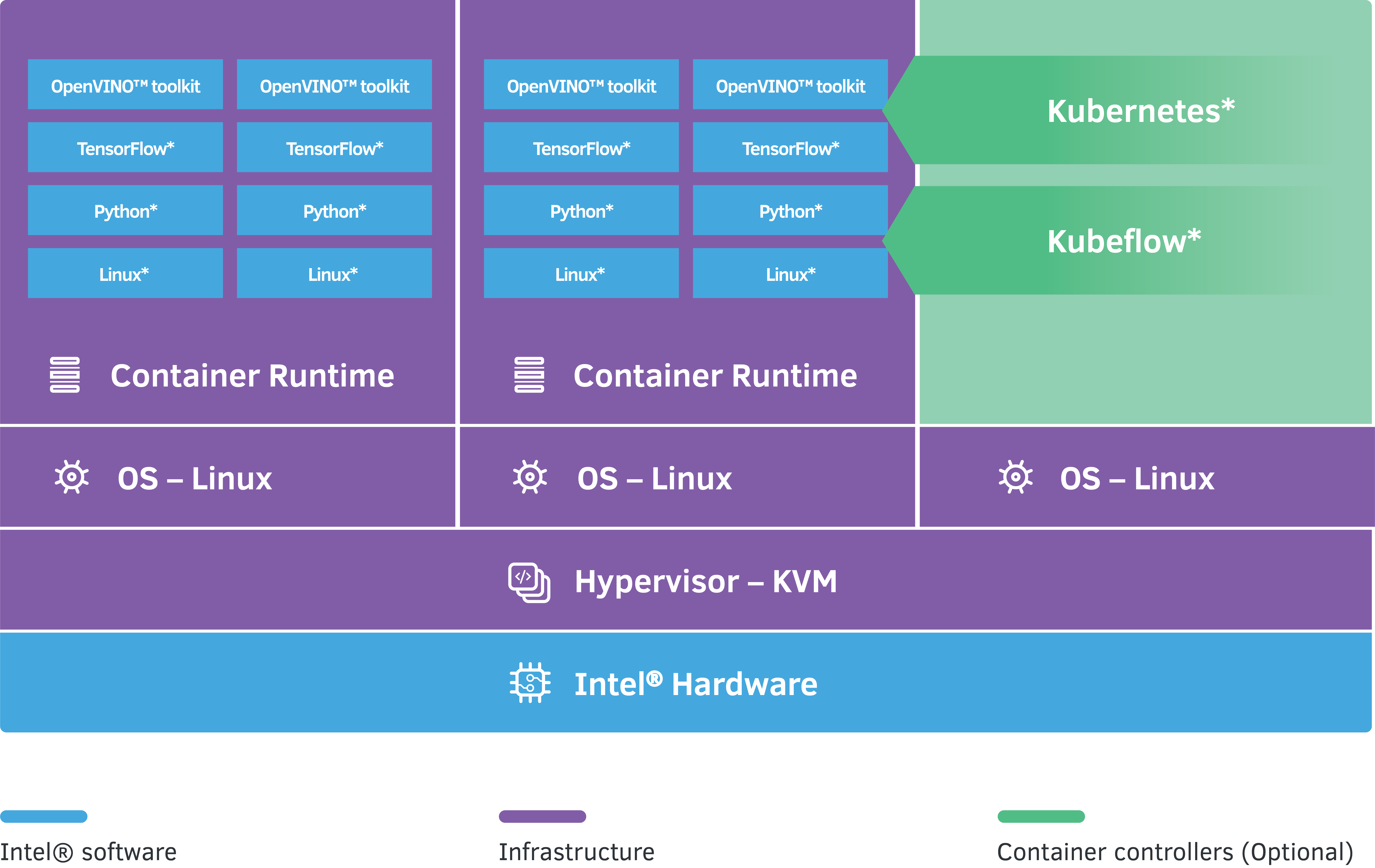

The 3rd Gen Intel® Xeon® Scalable processors also support accelerated INT8 convolutions with AVX 512 VNNI [2] instructions for higher levels of inference performance. In DLRS v7.0, we added a dedicated serving container to our suite of optimized stacks that includes hardware accelerated OpenVINO™ model server 2020.4 (Figure 2). This stack can utilize Intel DL Boost features on 10th Gen Intel® Core™ processors (IceLake) and is also optimized for Intel® Iris® Plus graphics. Additionally, the stack is also Intel GNA ready on Intel client processors. The Intel GNA provides power-efficient acceleration for AI applications such as neural noise cancellation while freeing up CPU resources for overall system responsiveness.

Figure 2.

Enabling Deep Learning Use Cases

As a platform for building and deploying portable, scalable, machine learning (ML) workflows, Kubeflow Pipelines* support deployment of DLRS images easily. This enables orchestration of machine learning pipelines, simplifying ML Ops, enabling the management of numerous deep learning use cases and applications. DLRS v7.0 also incorporates Transformers , a state-of-the-art general-purpose library that includes a number of pretrained models for Natural Language Understanding (NLU) and Natural Language Generation (NLG). The library helps to seamlessly move from pre-trained or fine-tuned models to productization. DLRS v7.0 incorporates Natural Language Processing (NLP) libraries to demonstrate that pretrained language models can be used to achieve state-of-the-art results [3] with ease. NLP Libraries included can be used for natural language processing, machine translation, and building embedded layers for transfer learning. For the PyTorch-based DLRS, this release also extends support for Flair [4], which includes the ability of Transformers, to apply natural language processing (NLP) models to text, such as named entity recognition (NER), part-of-speech tagging (PoS), sense disambiguation, and classification. In addition to the Docker* deployment model, DLRS is integrated with Function-as-a-Service (FaaS) technologies, which are scalable event-driven compute platforms. One can use frameworks such as Fn [5] and OpenFaaS [6] to dynamically manage and deploy event-driven, independent inference functions. We have created an OpenFaaS template (available already in the OpenFaaS template store) that integrates DLRS capabilities with this popular FaaS project. For DLRS v7, we have updated it to use the latest version of DLRS with Tensorflow 1.15 and Ubuntu 20.04, but it can be extended to any of the other DLRS flavours. One can use end-to-end use cases for the Deep Learning Reference Stack to help developers quickly prototype and bring up the stack in their environments. Some end to end use-cases of such deployments are:

- Galaxies Identification: Demonstrates the use of the Deep Learning Reference Stack to detect and classify galaxies by their morphology using image processing and computer vision algorithms on Intel® Xeon® processors.

- Using AI to Help Save Lives: A Data Driven Approach for Intracranial Hemorrhage Detection: AI training pipeline to help detect intracranial hemorrhage (ICH)

- Github Issue Classification Use Case: Used to auto-classify and tag issues using the Deep Learning Reference Stack for deep learning workloads

- Pix2pix: Can be used to perform image to image translation using end-to-end system stack

Supported Tools and Frameworks

This release also supports the latest versions of popular developer tools and frameworks:

-

Operating System: Ubuntu* 20.04, Ubuntu* 18.04, and CentOS* 8.0 Linux*

distributions.

-

Orchestration: Kubernetes* to manage and orchestrate containerized

applications for multi-node clusters with Intel platform awareness.

-

Containers: Docker* Containers and Kata* Containers with Intel® VT

Technology (Intel® VT) for enhanced protection.

-

Libraries: oneAPI Deep Neural Network Library (oneDNN), an open-source

performance library for deep learning applications. The library includes basic building blocks for neural networks optimized for Intel Architecture Processors and Intel Processor Graphics.

-

Runtimes: Python and C++

-

Deployment: Kubeflow Seldon* and Kubeflow Pipelines* support the

deployment of the Deep Learning Reference Stack.

-

User Experience: JupyterLab*, a popular and easy to use web-based

editing tool.

Performance Gains

Multiple layers of the Deep Learning Reference Stack are performance-tuned for Intel® architecture, offering significant advantages over other stacks.

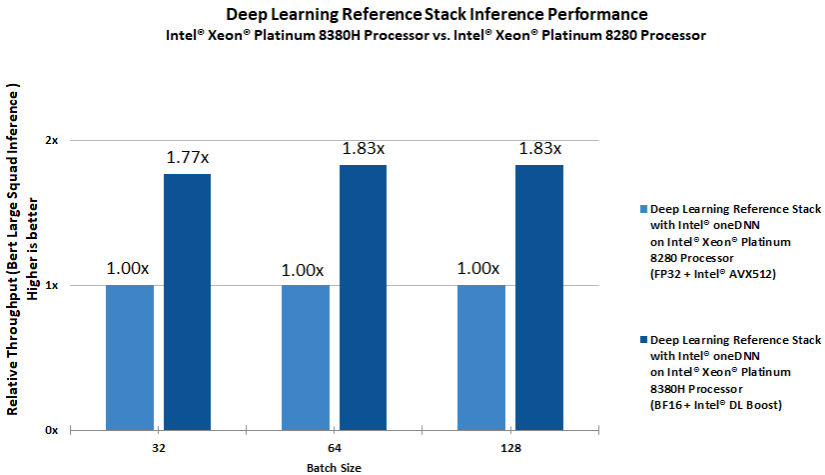

Performance gains on Inference for the Deep Learning Reference Stack with Intel Optimized TensorFlow* and Bert model (Large, Dataset: SQuAD):

3rd Generation Intel® Xeon® Scalable platform –4 socket Intel® Xeon® Platinum 8380H Processor (2.9GHz, 28 cores), HT On, Turbo On, Total Memory 1536 GB (24 slots/ 64GB/ 3200MHz), BIOS: WLYDCRB1.SYS.0016.P83.2007290544 (ucode:0x700001d), Ubuntu 20.04 LTS, Kernel 5.4.0-42-generic, Deep Learning Framework: Intel Optimized TensorFlow* (https://github.com/Intel-tensorflow/tensorflow/tree/bf16/base), Bert Large Squad (https://github.com/IntelAI/models/blob/v1.6.1/benchmarks/language_modeling/tensorflow/bert_large/README.md#bfloat16-inference-instructions), Compiler: gcc v9.3.0,oneDNN version: v1.4.0, BS=32,64,128, Squad v1.1 dataset, 4 inference instance/4 socket, Datatype: BF16

2nd Generation Intel® Xeon® Scalable platform –4 socket Intel® Xeon® Platinum 8280 Processor (2.7GHz, 28 cores), HT On, Turbo On, Total Memory 768 GB (24 slots/ 32GB/ 2933MHz), BIOS: PLYXCRB1.86B.0602.D02.2002011026 (ucode:0x4002f00), Ubuntu 20.04 LTS, Kernel 5.4.0-42-generic, Deep Learning Framework: Intel Optimized TensorFlow*(https://github.com/Intel-tensorflow/tensorflow/tree/bf16/base), Bert Large Squad (https://github.com/IntelAI/models/blob/v1.6.1/benchmarks/language_modeling/tensorflow/bert_large/README.md#fp32-inference-instructions), Compiler: gcc v9.3.0,oneDNN version: v1.4.0, BS=32,64,128, Squad v1.1 dataset, 4 inference instance/4 socket, Datatype: FP32

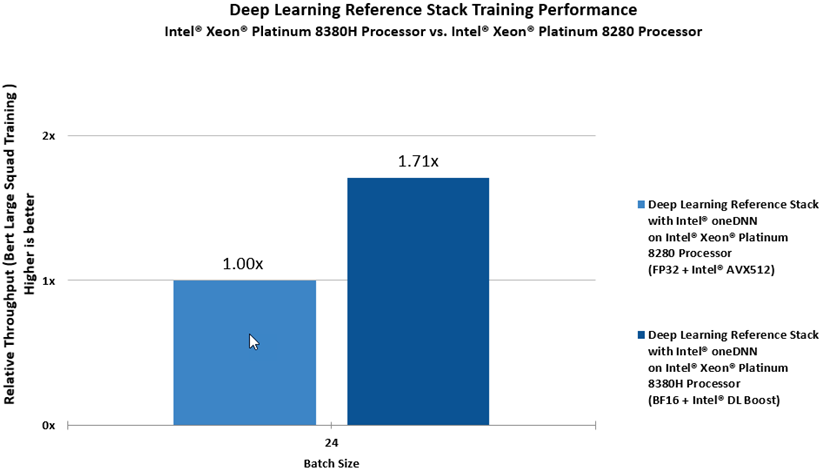

Performance gains on Training for the Deep Learning Reference Stack with Intel Optimized TensorFlow * and Bert model (Large, Dataset: SQuAD):

3rd Generation Intel® Xeon® Scalable platform –4 socket Intel® Xeon® Platinum 8380H Processor (2.9GHz, 28 cores), HT On, Turbo On, Total Memory 1536 GB (24 slots/ 64GB/ 3200MHz), BIOS: WLYDCRB1.SYS.0016.P83.2007290544 (ucode:0x700001d), Ubuntu 20.04 LTS, Kernel 5.4.0-42-generic, Deep Learning Framework: Intel Optimized TensorFlow*(https://github.com/Intel-tensorflow/tensorflow/tree/bf16/base), Bert Large Squad (https://github.com/IntelAI/models/blob/v1.6.1/benchmarks/language_modeling/tensorflow/bert_large/README.md#bfloat16-training-instructions), Compiler: gcc v9.3.0,oneDNN version: v1.4.0, BS=24, Squad v1.1dataset, 4 MPI Process/4 socket, Datatype: BF16

2nd Generation Intel® Xeon® Scalable platform –4 socket Intel® Xeon® Platinum 8280 Processor (2.7GHz, 28 cores), HT On, Turbo On, Total Memory 768 GB (24 slots/ 32GB/ 2933MHz), BIOS: PLYXCRB1.86B.0602.D02.2002011026 (ucode:0x4002f00), Ubuntu 20.04 LTS, Kernel 5.4.0-42-generic, Deep Learning Framework: Intel Optimized TensorFlow*(https://github.com/Intel-tensorflow/tensorflow/tree/bf16/base), Bert Large Squad (https://github.com/IntelAI/models/blob/v1.6.1/benchmarks/language_modeling/tensorflow/bert_large/README.md#fp32-training-instructions), Compiler: gcc v9.3.0,oneDNN version: v1.4.0, BS=24, Squad v1.1 dataset, 4 MPI Process/4 socket, Datatype: FP32

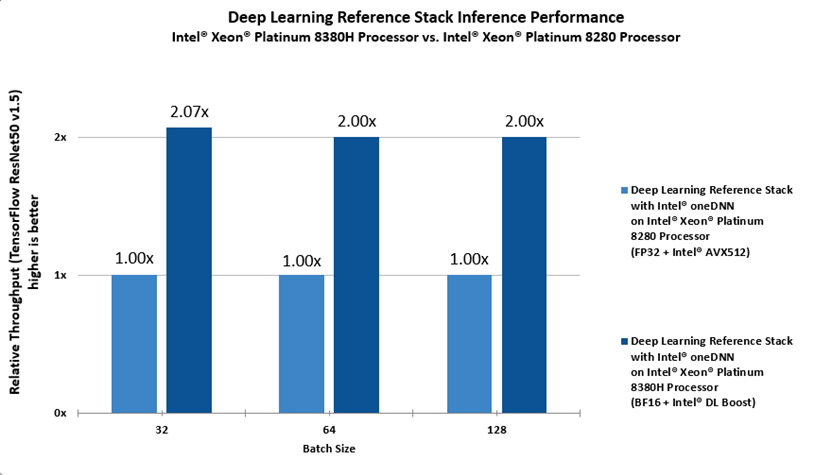

Performance gains on Inference for the Deep Learning Reference Stack with TensorFlow 2.4.0(2b8c0b1) and ResNet50 v1.5 as follows:

3rd Generation Intel® Xeon® Scalable platform – 4 socket Intel® Xeon® Platinum 8380H Processor (2.9GHz, 28 cores), HT On, Turbo On, Total Memory 1536 GB (24 slots/ 64GB/ 3200MHz), BIOS: WLYDCRB1.SYS.0016.P83.2007290544 (ucode:0x700001d), Ubuntu 20.04 LTS, Kernel 5.4.0-42-generic, Deep Learning Framework: TensorFlow* v2.4.0* hash id 2b8c0b1, ResNet50 v1.5, Compiler: gcc v9.3.0,oneDNN version: v1.5.1, BS=32,64,128, synthetic data, 4 inference instance/4 socket, Datatype: BF16

2nd Generation Intel® Xeon® Scalable platform –4 socket Intel® Xeon® Platinum 8280 Processor (2.7GHz, 28 cores), HT On, Turbo On, Total Memory 768 GB (24 slots/ 32GB/ 2933MHz), BIOS: PLYXCRB1.86B.0602.D02.2002011026 (ucode:0x4002f00), Ubuntu 20.04 LTS, Kernel 5.4.0-42-generic, Deep Learning Framework: TensorFlow* v2.4.0 hash id 2b8c0b1, ResNet50 v1.5 (https://github.com/IntelAI/models/blob/v1.8.0/benchmarks/image_recognition/tensorflow/resnet50v1_5/README.md#fp32-inference-instructions), Compiler: gcc v9.3.0,oneDNN version: v1.5.1, BS=32,64,128, synthetic data, 4 inference instance/4 socket, Datatype: FP32

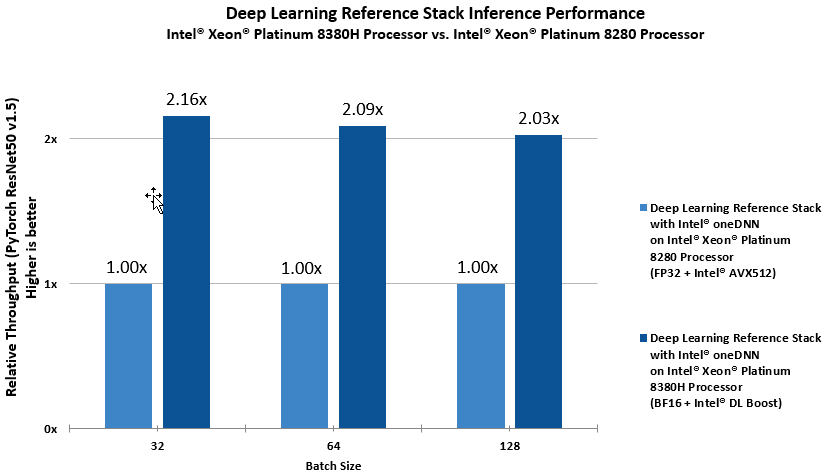

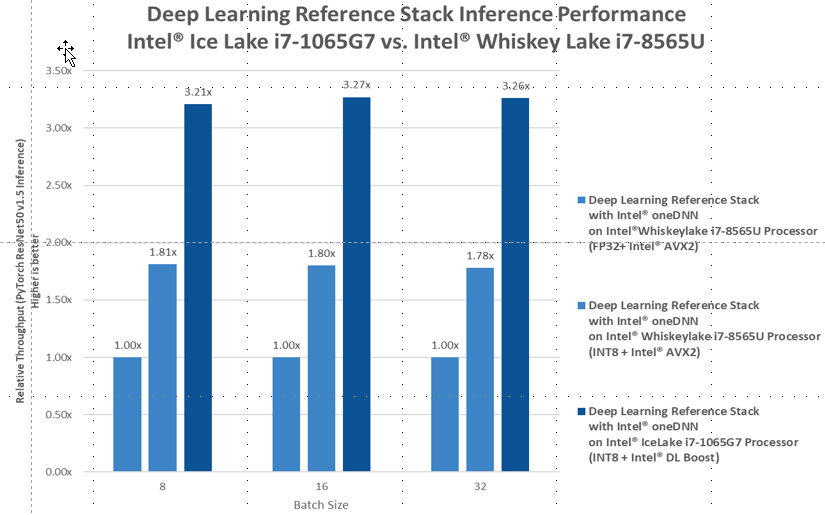

Performance gains on Inference for the Deep Learning Reference Stack with PyTorch 1.7.0(458ce5d) and ResNet50 v1.5 as follows:

3rd Generation Intel® Xeon® Scalable platform –4 socket Intel® Xeon® Platinum 8380H Processor (2.9GHz, 28 cores), HT On, Turbo On, Total Memory 1536 GB (24 slots/ 64GB/ 3200MHz), BIOS: WLYDCRB1.SYS.0016.P83.2007290544 (ucode:0x700001d), Ubuntu 20.04 LTS, Kernel 5.4.0-42-generic, Deep Learning Framework: PyTorch* v1.7.0, hash id 458ce5d, ResNet50 v1.5 (https://github.com/intel/optimized-models/tree/master/pytorch/ResNet50#BF16), Compiler: gcc v9.3.0,oneDNN version: v1.4.0, BS=32,64,128, synthetic data, 4 inference instance/4 socket, Datatype: BF16

2nd Generation Intel® Xeon® Scalable platform –4 socket Intel® Xeon® Platinum 8280 Processor (2.7GHz, 28 cores), HT On, Turbo On, Total Memory 768 GB (24 slots/ 32GB/ 2933MHz), BIOS: PLYXCRB1.86B.0602.D02.2002011026 (ucode:0x4002f00), Ubuntu 20.04 LTS, Kernel 5.4.0-42-generic, Deep Learning Framework: PyTorch* v1.7.0, hash id 458ce5d, ResNet50 v1.5 (https://github.com/intel/optimized-models/tree/master/pytorch/ResNet50#FP32), Compiler: gcc v9.3.0,oneDNN version: v1.4.0, BS=32,64,128, synthetic data, 4 inference instance/4 socket, Datatype: FP32 Legal review needed. perf claim

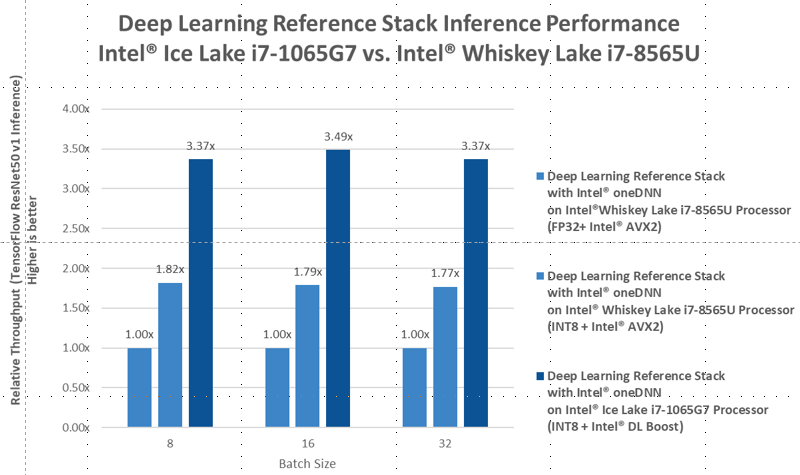

Performance gains for the Deep Learning Reference Stack with TensorFlow 2.4.0 and ResNet50 v1 on Client systems as follows:

10th Gen Intel® Core™ processor –Intel® Core™ i7-1065G7 Processor (1.3GHz, 4 cores), Performance Mode, Total Memory 16 GB (2 slots/ 8GB/ 2666MHz), Ubuntu18.04 LTS, Kernel 5.4.0-47-generic, Deep Learning Framework: TensorFlow* v2.4.0 hash id 2b8c0b1, ResNet50 v1.0, Compiler: gcc version 9.3.0,OneDNN v1.5.1, BS=8,16,32, synthetic data, 1 inference instance, Datatype: INT8

8th Gen Intel® Core™ processor–Intel® Core™ i7-8565U Processor(1.8GHz, 4 cores), Performance Mode, Total Memory 16 GB (1 slot/ 16GB/ 2400MHz), Ubuntu18.04 LTS, Kernel 5.4.0-47-generic, Deep Learning Framework: TensorFlow* v2.4.0 hash id 2b8c0b1, ResNet50 v1.0, Compiler: gcc version 9.3.0,OneDNN v1.5.1, BS=8,16,32, synthetic data, 1 inference instance, Datatype: INT8 and FP32

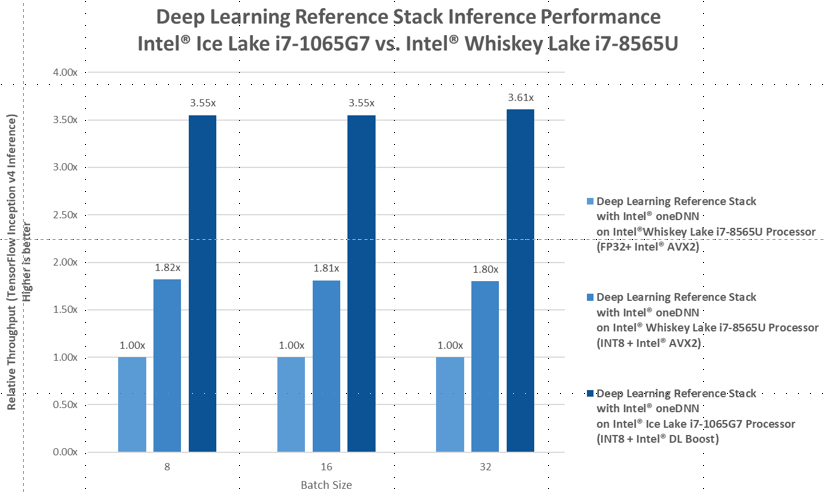

Performance gains for the Deep Learning Reference Stack with TensorFlow 2.4.0 and Inception v4 on Client systems as follows:

10th Gen Intel® Core™ processor –Intel® Core™ i7-1065G7 Processor (1.3GHz, 4 cores), Performance Mode, Total Memory 16 GB (2 slots/ 8GB/ 2666MHz), Ubuntu18.04 LTS, Kernel 5.4.0-47-generic, Deep Learning Framework: TensorFlow* v2.4.0 hash id 2b8c0b1, Inception v4, Compiler: gcc version 9.3.0,OneDNN v1.5.1, BS=8,16,32, synthetic data, 4 inference instance/4 socket, Datatype: INT8

8th Gen Intel® Core™ processor–Intel® Core™ i7-8565U Processor(1.8GHz, 4 cores), Performance Mode, Total Memory 16 GB (1 slot/ 16GB/ 2400MHz), Ubuntu18.04 LTS, Kernel 5.4.0-47-generic, Deep Learning Framework: TensorFlow* v2.4.0 hash id 2b8c0b1, Inception v4, Compiler: gcc version 9.3.0,OneDNN v1.5.1, BS=8,16,32, synthetic data, 1 inference instance, Datatype: INT8 and FP32 Performance gains for the Deep Learning

Reference Stack with PyTorch 1.7.0 and ResNet50 v1.5 on Client systems as follows:

10th Gen Intel® Core™ processor–Intel® Core™ i7-1065G7 Processor (1.3GHz, 4 cores), Performance Mode, Total Memory 16 GB (2 slots/ 8GB/ 2666MHz), Ubuntu18.04 LTS, Kernel 5.4.0-47-generic, Deep Learning Framework: PyTorch* v1.7.0, hash id 458ce5d, ResNet50 v1.5 (https://github.com/intel/optimized-models/tree/master/pytorch), Compiler: gcc version 9.3.0,OneDNN v1.5.1, BS=8,16,32, synthetic data, 1 inference instance, Datatype: INT8

8th Gen Intel® Core™ processor–Intel® Core™ i7-8565U Processor(1.8GHz, 4 cores), Performance Mode, Total Memory 16 GB (1 slot/ 16GB/ 2400MHz), Ubuntu18.04 LTS, Kernel 5.4.0-47-generic, Deep Learning Framework: PyTorch* v1.7.0, hash id 458ce5d, ResNet50 v1.5 (https://github.com/intel/optimized-models/tree/master/pytorch), Compiler: gcc version 9.3.0,OneDNN v1.5.1, BS=8,16,32, synthetic data, 1 inference instance, Datatype: INT8 and FP32

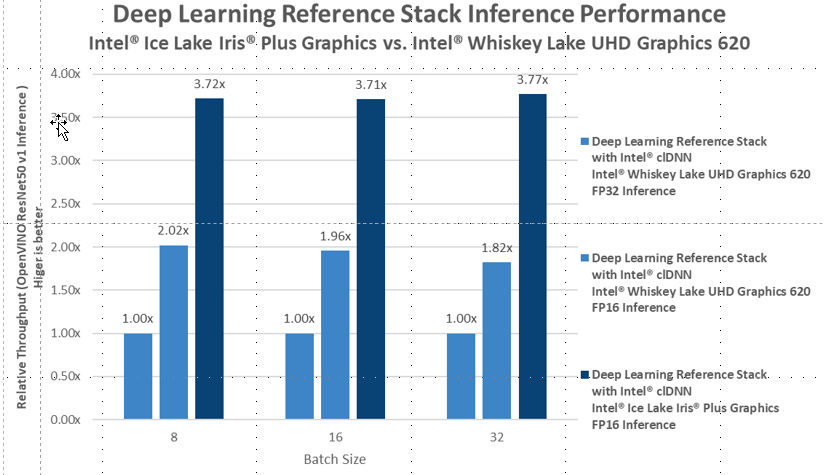

Performance gains for the Deep Learning Reference Stack with Intel® Distribution of OpenVINO™ Toolkit 2020.4 and ResNet50 v1 on Intel® Graphics as follows:

10th Gen Intel® Core™ processor–Intel® Core™ i7-1065G7 Processor(1.3GHz, 4 cores) w/ Intel® Iris Plus Graphics, Performance Mode, Total Memory 16 GB (2 slots/ 8GB/ 3733MHz), Ubuntu18.04 LTS, Kernel 5.4.0-47-generic, Deep Learning Toolkit: Intel® Distribution of OpenVINO™ Toolkit for Linux* 2020.4, ResNet50 v1.0 (https://docs.openvinotoolkit.org/latest/openvino_inference_engine_samples_benchmark_app_README.html), Compiler: gcc version 9.3.0,clDNN v2020.4, BS=8,16,32, ImageNet data, 1 inference instance, Datatype: FP16

8th Gen Intel® Core™ processor–Intel® Core™ i7-8565U Processor(1.8GHz, 4 cores) w/ Intel® UHD Graphics 620, Performance Mode, Total Memory 16 GB (1 slot/ 16GB/ 2400MHz), Ubuntu18.04 LTS, Kernel 5.4.0-47-generic, Deep Learning Toolkit: Intel® Distribution of OpenVINO™ Toolkit for Linux* 2020.4, ResNet50 v1.0 (https://docs.openvinotoolkit.org/latest/openvino_inference_engine_samples_benchmark_app_README.html), Compiler: gcc version 9.3.0,clDNN v2020.4, BS=8,16,32, ImageNet data, 1 inference instance, Datatype: FP16 and FP32

Intel is dedicated to ensure popular frameworks and topologies run best on Intel® architecture, giving you a choice in the right solution for your needs. DLRS also drives innovation on our current Intel® Xeon® Scalable processors, and we plan to continue performance optimizations for coming generations.

Visit the Intel® Developer Zone page to learn more and download the Deep Learning Reference Stack code, and contribute feedback. As always, we welcome ideas for further enhancements through the stacks mailing list.

[1] https://software.intel.com/en-us/articles/OpenVINO-RelNotes

[2] https://software.intel.com/content/www/cn/zh/develop/articles/introduction-to-intel-deep-learning-boost-on-second-generation-intel-xeon-scalable.html

[3] https://ruder.io/nlp-imagenet/

[4] https://github.com/flairNLP/flair

[5] https://github.com/intel/stacks-usecase/tree/master/pix2pix/fn

[6] https://github.com/intel/stacks-usecase/tree/master/pix2pix/openfaas

Notices and Disclaimers

Software and workloads used in performance tests may have been optimized for performance only on Intel microprocessors. Performance tests, such as SYSmark and MobileMark, are measured using specific computer systems, components, software, operations and functions. Any change to any of those factors may cause the results to vary. You should consult other information and performance tests to assist you in fully evaluating your contemplated purchases, including the performance of that product when combined with other products. For more complete information, visit https://www.intel.com/benchmarks.

Performance results are based on testing as of 09/20/2020 and may not reflect all publicly available updates. No product or component can be absolutely secure.

Optimization Notice: Intel’s compilers may or may not optimize to the same degree for non-Intel microprocessors for optimizations that are not unique to Intel microprocessors. These optimizations include SSE2, SSE3, and SSSE3 instruction sets and other optimizations. Intel does not guarantee the availability, functionality, or effectiveness of any optimization on microprocessors not manufactured by Intel. Microprocessor-dependent optimizations in this product are intended for use with Intel microprocessors. Certain optimizations not specific to Intel microarchitecture are reserved for Intel microprocessors. Please refer to the applicable product User and Reference Guides for more information regarding the specific instruction sets covered by this notice.

Your costs and results may vary.

Intel technologies may require enabled hardware, software or service activation.

Intel, the Intel logo, OpenVINO and Intel Xeon are trademarks of Intel Corporation or its subsidiaries in the U.S. and/or other countries. Intel and Iris are trademarks of Intel Corporation or its subsidiaries. *Other names and brands may be claimed as the property of others.

© Intel Corporation