Introduction

Measuring the performance of an Open vSwitch* (OvS) or any vSwitch can be difficult without resorting to expensive commercial traffic generators, or at least some extra network nodes on which to run a software traffic generator.

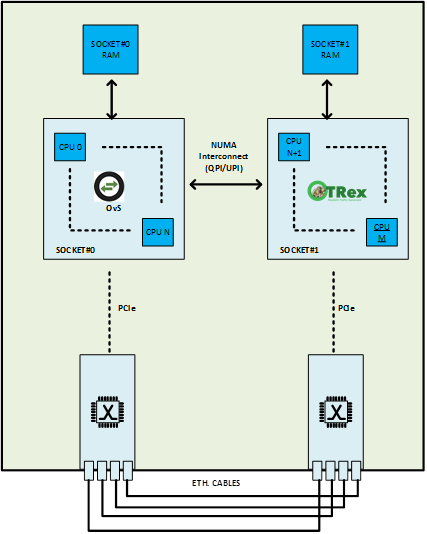

However, on dual-socket or multi-socket systems there is another option. The software traffic generator can be run on one socket while OvS as the System Under Test (SUT) can reside on the other socket. Being on separate sockets, the two will work well without interfering with each other. Each will use memory from its own socket’s RAM and will use its own PCIe bus. The only extra hardware requirement is a second network interface card (NIC) to drive the generated traffic without interfering with the NIC and PCI-bus of the SUT.

This article shows you how to set up this configuration using the TRex Realistic Traffic Generator.

The overall system looks like this:

Figure 1. Overall system configuration.

Setting up the Hardware

It is important to choose the correct PCIe* slots for your NICs. For instance, to be able to handle the incoming traffic from even one 10 Gbps NIC you need a PCIe Gen3 NIC on an 8-lane bus. See Understanding Performance of PCI Express Systems.

For this article, I used a system based on an Intel® Server Board S2600WT.

sudo dmidecode -t system

# dmidecode 3.0

Getting SMBIOS data from sysfs.

SMBIOS 2.7 present.

Handle 0x0001, DMI type 1, 27 bytes

System Information

Manufacturer: Intel Corporation

Product Name: S2600WT2

…

I can use this information to look up the technical specification for the board and see exactly which PCIe slots will give sufficient bandwidth while placing the two NICs on different sockets.

The system will be functional even if the PCIe placement is wrong; however, the direct memory access (DMA) will have to cross the socket interconnect, which will become a bottleneck and the performance observed by TRex will not be accurate.

Host Configuration

You should also be aware of the implications of other host configuration options that affect Data Plane Development Kit (DPDK) applications: hyper-threading and core ID to socket mapping, core isolation, and so on. These are covered in Open vSwitch with DPDK (Ovs-DPDK).

Care has to be taken to ensure that the cores assigned to TRex and the cores assigned to OvS-DPDK are on the correct sockets (i.e., the same socket as their assigned NICs). Be aware if hyper-threading is enabled then the core (strictly speaking, the “logical CPU”) to socket mapping is more complex. If you are using a program such as htop as a way to verify which cores are running poll-mode drivers (PMDs) then also be aware that TRex uses virtually no CPU resources to receive packets (as they actually terminate on the NIC) and only uses a noticeable amount of the CPU to transmit packets. So, if TRex is not actively transmitting, htop will not report its CPUs as being under load.

Setting up TRex

The TRex manual has a good sanity-check tutorial to ensure TRex is installed and working correctly in the “First time Running” section. There is also a list of other TRex documentation that should be scanned to get an idea of its other functionality.

Once TRex is running successfully in loop-back mode, connect the OvS and TRex NICs directly.

This article assumes you are already comfortable setting up Open vSwitch with DPDK (Ovs-DPDK). If not, the process is documented within OvS at Open vSwitch with DPDK. Only changes to the standard OvS-DPDK configuration are described below.

Hugepages

TRex uses DPDK, which requires hugepages. If hugepages are not configured on your system TRex will do this configuration for you auto-magically. Unfortunately, it does not account for other users of hugepages such as OvS-DPDK, so we need to set up and mount the hugepages manually beforehand in order to prevent TRex from reconfiguring everything when it starts up.

Create 2 GB of 2 MB hugepages (i.e., 2048 x 2 MB hugepages) on both sockets:

root# cat 2048 > /sys/devices/system/node/node0/hugepages/hugepages-2048kB/nr_hugepages

root# cat 2048 > /sys/devices/system/node/node1/hugepages/hugepages-2048kB/nr_hugepages

If not already mounted, mount the hugepages:

root# mkdir /dev/hugepages

root# sudo mount -t hugetlbfs nodev $HUGE_DIR

As both TRex and OvS-DPDK create hugepages via DPDK, they need to change from the default hugepage-backed filenames.

For OvS, when ovsdb is running but before vswitchd is started:

ovs-vsctl --no-wait set Open_vSwitch . other_config:dpdk-extra=”--file-prefix=ovs”

For TRex, edit trex_cfg.yaml:

- Port_limit : 4

Prefix: : trex

Without this step you will get errors such as "EAL: Can only reserve 1857 pages from 4096 requested. Current CONFIG_RTE_MAX_MEMSEG=256 is not enough” depending on whether you start OvS or TRex first.

You will be able to see the different hugepage-backed memory-mapped files that DPDK created for each application in /dev/hugepages as ovsmap_NNN and trexmap_NNN.

Other TRex Configurations

Although marked as optional in the TRex documentation, when TRex is used with OvS-DPDK you will need to limit its memory use.

limit_memory : 1024 #MB.

When using more than one core per interface to generate traffic I found:

- Configuration item “c” must be configured.

- The master and latency thread IDs must be set to ensure they run on the “TRex” socket and not the “OvS-DPDK” socket. I have used socket#1 as the TRex socket.

- The length of the “threads” lists must be equal to the value of c.

- Multiple cores are assigned to an interface by using multiple threads lists. The configuration below assigns cores 16 and 20 to the first interface, 17 and 21 to the second, and so on.

Therefore, you will need a configuration somewhat like:

c : 4

…

platform :

master_thread_id : 14

latency_thread_id : 15

dual_if :

- socket : 1

threads : [16, 17, 18, 19]

- socket : 1

threads : [20, 21, 22, 23]

For the wiring and OvS forwarding configuration listed above I also used the port_bandwidth_gb and explicitly set the src and dest_macs of the ports so that the outgoing src mac address for each port matched the incoming dest mac. These items may or may not be required:

port_bandwidth_gb : 10

port_info :

- dest_mac : 00:03:47:00:01:02

src_mac : 00:03:47:00:01:01

- dest_mac : 00:03:47:00:01:01

src_mac : 00:03:47:00:01:02

- dest_mac : 00:03:47:00:00:02

src_mac : 00:03:47:00:00:01

- dest_mac : 00:03:47:00:00:01

src_mac : 00:03:47:00:00:02

Running TRex

TRex can now be run in the usual ways, such as:

sudo ./t-rex-64 -f cap2/dns.yaml -m 250kpps

Or the TRex Python* bindings can be used to exert much more fine-grained control and create your own application-specific traffic generator.

Creating Your Own Traffic Generator

By using the Python bindings, it becomes very simple to write your own traffic generator command-line interface (CLI) that is tailored to your own use case. This can make tasks that are slow via a traditional GUI easily repeatable and modifiable. For instance, when I needed to change the total load offered to OvS but at the same time maintain a ratio of offered load across several ports it was straightforward to write a CLI on top of TRex that looked like this:

$ ./mytest.py

(Cmd) dist 1 2 3 4 <<< per port load ratio 1:2:3:4

Dist ratio is [1, 2, 3, 4]

(Cmd) start 1000 <<< total load 1000 kpps

-f stl/traffic.py -m 100kpps --port 0 --force

-f stl/traffic.py -m 200kpps --port 1 --force

-f stl/traffic.py -m 300kpps --port 2 --force

-f stl/traffic.py -m 400kpps --port 3 –force

(Cmd) stats <<< check loss & latency

Stats from last 4.5s

0->1 offered 98 dropped 0 rxd 98 (kpps) => 0% loss

1->0 offered 196 dropped 0 rxd 196 (kpps) => 0% loss

2->3 offered 295 dropped 0 rxd 295 (kpps) => 0% loss

3->2 offered 393 dropped 0 rxd 393 (kpps) => 0% loss

0->1 average 8 jitter 0 total_max 13 (us)

1->0 average 13 jitter 1 total_max 20 (us)

2->3 average 15 jitter 3 total_max 46 (us)

3->2 average 12 jitter 3 total_max 88 (us)

18 3 25 29 7 48 931 4 984 948 5 985 <<< excel pastable format!

(Cmd) start 10000 <<< increase total load while keeping distribution.

-f stl/traffic.py -m 1000kpps --port 0 --force

-f stl/traffic.py -m 2000kpps --port 1 --force

-f stl/traffic.py -m 3000kpps --port 2 --force

-f stl/traffic.py -m 4000kpps --port 3 –force

(Cmd) stats

Stats from last 2.3s

0->1 offered 987 dropped 0 rxd 988 (kpps) => 0% loss

1->0 offered 1964 dropped 0 rxd 1964 (kpps) => 0% loss

2->3 offered 2927 dropped 886 rxd 2040 (kpps) => 30% loss

3->2 offered 3879 dropped 1847 rxd 2031 (kpps) => 47% loss

0->1 average 18 jitter 3 total_max 25 (us)

1->0 average 29 jitter 7 total_max 48 (us)

2->3 average 931 jitter 4 total_max 984 (us)

3->2 average 948 jitter 5 total_max 985 (us)

Something like this happened to be very slow and error-prone to do on a commercial traffic generator, but simple using TRex and a small amount of Python.

An elided version of the script with additional comments explaining the use of the TRex API and the Python cmd module follows:

from trex_stl_lib.api import *

import cmd <<< See https://docs.python.org/2/library/cmd.html

def main():

# connect to the server

c = STLClient(server = '127.0.0.1')

c.connect()

# enter the cli loop

MyCmd(c).cmdloop()

...

class MyCmd(cmd.Cmd):

def __init__(self, client):

# standard cmd boilerplate

cmd.Cmd.__init__(self)

def do_start(self, line): <<< cmd invokes this when you type 'start ...' on cli

"""start <total offered rate kpps>

Start traffic. Total kpps across all ports. e.g 'start 10'

<<< This doc string is also the help string for the 'start' command!

"""

(argc, argv) = self._parse(line)

if (argc != 1):

self.do_help('start')

return 0 <<< 1 halt cmd loop; 0 get another command

... <<< port to rate mapping set up here

for port, rate in enumerate(rates):

start_line = "-f stl/traffic.py \ <<< traffic.py is based on the TRex sample stl/cap.py

-m %skpps --port %d --force" % (rate, port)

rc = self.client.start_line(start_line) <<< tell TRex server to start stream on port at rate

def do_dist(self, line): <<< cmd invokes this when you type 'dist ...' on cli

"""Set traffic dist across 4 ports e.g. 'dist 2 1 1 1' """ <<< help string for command

... <<< rate distribution is parsed and stored here

def do_stop(self, line):

"""Stop all traffic"""

rc = self.client.stop(rx_delay_ms=100) #returns None

def do_stats(self, line):

"""Display pertinent stats"""

pp = pprint.PrettyPrinter(indent=4)

stats = self.client.get_stats()

pp.pprint(stats) <<< using pretty print is a fast

<<< way to see stats' complicated internals!

... <<< Skip the gory details of parsing stats

def do_quit(self, line):

return 1 <<< return 1 tells cmd base class to exit

Summary

A software-based traffic generator such as TRex can be used to test vSwitches with very little extra hardware – just a DPDK-compatible NIC. Also, by using the TRex Python bindings it is simple to write a traffic generator CLI that is tailored to your own use case. This can make test scenarios that are slow and error-prone to do via a traffic generator GUI easily repeatable and modifiable, enabling fast exploratory performance testing.

About the Author

Billy O’Mahony is a network software engineer with Intel. He has worked on the Open Platform for Network Functions Virtualization (OPNFV) project and accelerated software switching solutions in the user space running on Intel® architecture. His contributions to Open vSwitch with DPDK include Ingress Scheduling and RXQ/PMD Assignment.

References

Understanding Performance of PCI Express Systems

Intel® Server Board S2600WT - Technical Product Specification

"