As JavaScript* applications become more sophisticated, developers are increasingly looking for ways to optimize performance. Single Instruction Multiple Data (SIMD) operations enable you to process multiple data items at the same time when “data-parallelism,” the mutual independence of data, exists. In the past, these operations have been limited to low-level languages and languages that can map closely to the architecture, such as C/C++. Using SIMD.js, these operations are now available to use directly from JavaScript code. This enables JavaScript developers to easily exploit the hardware capabilities of the underlying architecture to significantly improve the performance of code that can benefit from data parallelism. Developers can also easily translate SIMD-optimized algorithms from languages like C/C++ to JavaScript.

In this article, we provide examples of SIMD operations, show you how to enable SIMD.js in Microsoft Edge* and ChakraCore*, and provide tips for writing JavaScript code that will avoid performance cliffs.

Understanding SIMD

Since 1997, Intel has been adding instructions to its processors to perform the same operation on multiple data items in parallel. These SIMD operations can accelerate performance in applications such as rendering calculations, 3D object manipulations, encryption, physics engines, compression/decompression algorithms, and image processing.

As a basic example of how SIMD works, consider adding two arrays so that C[i] = A[i] + B[i] for the entire dataset. A simple solution is to iterate over each pair of elements and perform the addition sequentially. The processor has a SIMD operation, though, that can enable you to perform an addition on multiple independent data chunks at the same time. If you process four data items at the same time, the process could be made up to four times faster. Essentially, the array is divided up into smaller fixed-size arrays, sometimes referred to as vectors.

SIMD operations are commonly used in C/C++, and in certain cases the compiler can automatically vectorize the code. The GCC* and Clang* compilers provide “vector_size” and “ext_vector_type” attributes to auto-vectorize C/C++ code that has data parallelism. The Intel® C++ Compiler and Intel® Fortran Compiler offer the “#pragma SIMD” directive for trying to auto-vectorize loops. Array notation is another Intel-specific language extension in Intel C++ Compilers that enables users to point to the data parallel code that the compiler should try to auto-vectorize. In other cases, intrinsics can be added in the source code to explicitly indicate where vectorization should be used. Intrinsics are language constructs that map directly to sequences of instructions on the underlying architecture. Because intrinsics are specific to a particular architecture, they should only be used if other approaches are not possible.

Intel® architecture supports a range of SIMD operation sets, starting with MMX™ technology (introduced in 1997), going through Intel® Streaming SIMD Extensions (Intel® SSE, Intel® SSE2, Intel® SSE3, Intel® SSE4.1, and Intel® SSE4.2) and Supplemental Streaming SIMD Extensions 3, and most recently with Intel® Advanced Vector Extensions, Intel® Advanced Extensions 2, and Intel® Advanced Extensions 512. SIMD operations are supported on both Intel® Core™ and Intel® Atom™ processors today.

Why create SIMD.js?

Over the years, improvements in compilers and managed runtimes have significantly reduced the performance gap between JavaScript and native apps. One limitation, though, is that JavaScript is a managed language and so does not have direct access to the hardware. The execution engine abstracts away the hardware details, preventing access to the SIMD operations.

JavaScript expressions map less closely to the architecture than C expressions and the JavaScript language is dynamically typed, both of which make it difficult for a compiler to automatically vectorize loops.

To enable JavaScript programmers to use SIMD, Intel has worked with Mozilla, Google, Microsoft, and ARM to bring SIMD.js to JavaScript. SIMD.js is a set of JavaScript extension APIs that expose the SIMD operations of the underlying architecture. This makes it possible for existing C/C++ programs with intrinsics to be easily translated (by hand) to JavaScript and run in the browser, or for new JavaScript code to be written using SIMD operations.

SIMD.js: A simple array addition example

SIMD.js has been designed to cover the common overlap between major infrastructures supporting SIMD instructions: Intel SSE2 and ARM Neon*. Architectures that do not support the SIMD operations are still supported by SIMD.js, but they will not be able to match the performance of a SIMD architecture.

Table 1 shows some example JavaScript code for adding two arrays, and Table 2 shows how the same can be achieved using SIMD.js. SIMD.js supports 128-bit vectors, and since we are operating on 32-bit integer values, we can perform four addition operations at the same time. This potentially accelerates the computation by a factor of four.

/* Set up the integer arrays A, B, C */

var A = new Int32Array(size);

var B = new Int32Array(size);

var C = new Int32Array(size);

for (i = 0; i < size; i++)

{

C[i] = A[i] + B[i];

}Table 1: JavaScript* scalar/sequential array addition.

/* Set up the integer arrays A, B, C */

var A = new Int32Array(size);

var B = new Int32Array(size);

var C = new Int32Array(size);

/* Note that i increases by 4 each iteration */

for (i = 0; i < size; i += 4)

{

/* load vector of four integers from A, starting at index i, into variable x */

var x = SIMD.Int32x4.load(A, i);

/* load vector of four integers from B, starting at index i, into variable y */

var y = SIMD.Int32x4.load(B, i);

/* SIMD addition operation on vectors x and y */

var z = SIMD.Int32x4.add(x, y);

/* SIMD operation to store the vector z results in array C */

SIMD.Int32x4.store(C, i, z);

}Table 2: SIMD.js example of array addition.

SIMD.js availability and performance

SIMD.js is currently at stage 3 approval of TC39. As a result of a close collaboration between Intel engineers and Microsoft, SIMD.js is available on the Windows® 10 Microsoft Edge browser as an experimental feature for developers to try. It is also included in the ChakraCore open source version of the browser's engine.

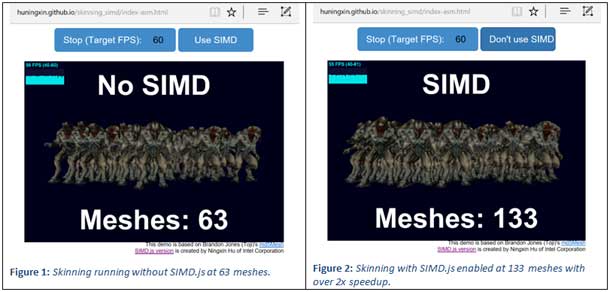

Figures 1 and 2 show the impact of SIMD.js in the latest Microsoft Edge browser. The figures show that the number of meshes (or 3D images) that can be supported at 60 frames per second more than doubles from 63 to 133 when using SIMD.js. This demo, called Skinning SIMD.js, was handwritten in asm.js and simd.js. (Asm.js code is usually cross-compiled and rarely written by hand, but in this case, the module was simple enough to be handwritten).

Enabling SIMD.js

You can use SIMD.js in ChakraCore (which we have used for preparing this paper) or in the Microsoft Edge browser.

Enabling SIMD.js in Microsoft ChakraCore

ChakraCore is the core part of Chakra, the high-performance JavaScript engine that powers the Microsoft Edge browser and Windows applications written in HTML/CSS/JavaScript. ChakraCore also supports the JavaScript Runtime (JSRT) APIs, which allow you to easily embed ChakraCore in independent applications. ChakraCore is currently verified to be working on Windows platforms. For details on how to get, build, and use ChakraCore, seehttps://github.com/Microsoft/ChakraCore.

ChakraCore can run directly from the command line or as part of node.js. ChakraCore has the latest SIMD.js features and optimizations that are yet to be merged into Microsoft Edge. To run SIMD.js code from the command line, make sure to include the following flags “-simdjs -simd128typespec”.

Enabling SIMD.js in Microsoft Edge

Microsoft Edge ships as part of Windows 10. SIMD.js is available in the Microsoft Windows 10 Anniversary update. For the latest features, install the latest version of the Windows 10 release. To enable SIMD.js in the Microsoft Edge browser:

- Navigate to “about:flags”.

- Under “JavaScript”, check “Enable experimental JavaScript features”.

- Restart the browser.

Writing performant SIMD.js code for ChakraCore

SIMD.js offers an extensive set of data types (see Table 3) and operations that are all geared towards boosting performance. There is a lot of flexibility in how the SIMD.js operations could be used. For that reason, JavaScript guarantees that every valid use will work, but does not guarantee the performance. The speed will depend on the implementation of both the JavaScript engine and your own code. In some cases, there could be hidden performance cliffs when using SIMD.js.

This section explains how SIMD.js is implemented in ChakraCore and offers some guidelines for writing SIMD.js code that will work with the Full JIT (just-In-time compiler) for optimal performance.

Supported data types in SIMD.js:

* Float32x4 (32-bit float x 4 data items)

* Int32x4 (32-bit integer x 4 data items)

* Int16x8 (16-bit integer x 8 data items)

* Int8x16 (8-bit integer x 16 data items)

* Uint32x4 (32-bit unsigned x 4 data items)

* Uint16x8 (16-bit unsigned x 8 data items)

* Uint8x16 (8-bit unsigned x 16 data items)

* Bool32x4 (32-bit bool x 4 data items)

* Bool16x8 (16-bit bool x 8 data items)

* Bool8x16 (8-bit bool x 16 data items)

Table 3: SIMD.js supports a range of data types.

Understanding the three versions of SIMD.js in ChakraCore

There are three versions of SIMD.js implemented in ChakraCore:

- Runtime library. To improve start-up times, ChakraCore starts to interpret the code without compilation using the runtime library. The runtime library is also used by the ChakraCore interpreter and as a fallback when the Full JIT fails to optimize SIMD.js operations, whether that is due to ineffective or ambiguous code, or incompatible hardware. The runtime library is unoptimized, so it guarantees the code will execute but does not have any of the performance gain associated with using SIMD operations. Any performant code should spend as little time as possible in the library. The SIMD.js runtime library may offer a lower performance than code that does not use SIMD.js, so some developers may choose to offer a sequential version of their code for times when SIMD cannot be used.

- Full JIT. The Full JIT is a type-specializing compiler that attempts to bridge the gap between the high-level data types used in JavaScript and the low-level data types used by the architecture. It will, for example, attempt to make numbers 32-bit integers (rather than JavaScript's default 64-bit floats) where possible to improve vectorization. Where this causes correctness issues, the implementation falls back to the runtime library. Writing efficient SIMD.js code that targets this compiler is the focus of this paper.

- Asm.js. Asm.js is a strict subset of JavaScript that utilizes JavaScript syntax to distinguish between integer and floating point types and provides other rules that ensure highly effective ahead-of-time compilation, enabling near-native performance. Asm.js code is usually created by compiling from C/C++ using the Emscripten LLVM* compiler. SIMD.js is part of the Asm.js specification and is used either when translating SIMD intrinsics or if the translating compiler does auto-vectorization (something that Emscripten currently supports). Asm.js is not designed to be written by hand, and developers should write their apps in C/C++ and compile to JavaScript to use this implementation. The details of this are outside the scope of this paper. For more information see http://asmjs.org/spec/latest/ and http://kripken.github.io/mloc_emscripten_talk/.

Tip #1: Use SIMD for hot code

Chakra is a multi-tiered execution engine. To achieve a good start-up time, the code runs first through the unoptimized Runtime library. If the code runs a few times, the interpreter will recognize it is repeating code and run it through the Full JIT. Only the Full JIT will carry out SIMD.js optimizations that yield a performance boost. For that reason, it is advisable to only use SIMD.js for code in your applications that is repeated often or consumes a lot of processor time (hot code).

Avoid using SIMD.js in start-up code or loops/functions that don’t run for a long time; otherwise the Full JIT might not kick in, and you may see a degradation of performance compared to sequential code when your SIMD.js code runs in the Runtime library. Some performance testing and code tweaking might be required to achieve performance improvements.

One caveat for this tip: it is important to extract out initializations of SIMD constants into cold sections to avoid SIMD constants from being constructed over and over again.

Tip #2: Explicitly convert strings to numbers

The SIMD constructors and splat operations are type-generic, so they’ll accept both strings and numbers, as shown in Table 4. However, if strings are found they will not be optimized as arguments for the SIMD APIs. As a result, execution will fall back to the Runtime library, causing a loss of performance.

To guarantee performance, always use arguments of Number or Bool types. If your code depends on non-Number types, introduce the conversion explicitly in your code, as shown in Table 5.

The majority of SIMD operations throw a TypeError on unexpected types, except for a few that expect any JavaScript type and coerce it to a Number or Bool.

/* Set up variables */

var s = myString; // string

var n = myVar // could be Null

/* Set up f4 as vector of s,s,n,n */

var f4 = SIMD.Float32x4(s, s, n, n);

/* After splat, i4 = vector of s,s,s,s */

var i4 = SIMD.Int32x4.splat(s);

Table 4: SIMD constructor with arbitrary types.

var s = Number(myString); // explicitly converted to number

var n = Number(myVar); // explicitly converted to number

var f4 = SIMD.Float32x4(s, s, n, n);

var i4 = SIMD.Int32x4.splat(s);

Table 5: SIMD constructors with explicit coercions.

Tip #3: Optimize vector lane access

There are fast instructions that can be used to extract one of the data items (or lanes) from a vector. However, the Full JIT implementation is not able to handle variable index values in these commands, so execution will fall back to the Runtime library in that case. To avoid that situation, use integer literals for lane indices to remove the uncertainty so the compiler can optimize it. Tables 6 and 7 show how code should be rewritten to ensure Full JIT execution.

This guideline also applies to shuffle and swizzle commands, which can be used to rearrange the order of the lanes.

/* function accepts a vector of Int32x4 and puts it in v */

function SumAllLanes(v)

{

var sum = 0;

for (var i = 0; i < 4; i ++)

{

/* Extract lane I from vector v */

sum += SIMD.Int32x4.extractLane(v, i);

}

return sum;

}

Table 6: Extract lane with variable lane index.

function SumAllLanes(v)

{

var sum = 0;

/* Use literals to extract each lane for Full JIT optimization */

sum += SIMD.Int32x4.extractLane(v, 0);

sum += SIMD.Int32x4.extractLane(v, 1);

sum += SIMD.Int32x4.extractLane(v, 2);

sum += SIMD.Int32x4.extractLane(v, 3);

return sum;

}

Table 7: Extract lane with literals.

Tip #4: Consistently define variables

If the argument types for SIMD operations do not match or are ambiguous, the Full JIT will decide that a TypeError exception is likely to occur and will not type-specialize, passing execution back to the Runtime library.

Table 8 shows an example of this in practice. In this case, the programmer knows that condition1 == condition2 (if one is true, so is the other, always). The compiler can’t know or infer that, though. In the second if condition, the compiler will be conservative and assume that x could be a Number or Float32x4, because the previous condition creates that possibility. Passing a Number argument to a Float32x4.add operation will cause a TypeError, so the Full JIT will not optimize.

Additionally, y is not defined on every execution path, so in the second if condition, y could be Undefined or Float32x4. Again, that would cause the Full JIT to give up on optimizing.

To avoid these cases, follow these guidelines:

- Always define your variables on all execution paths. Never leave SIMD variables undefined. In Table 8, for example, where x is defined as 0, y should also be defined.

- Avoid assigning values of different types to a variable. In Table 8, defining x as a Float32x4 and as Number creates uncertainty that prevents optimization.

- If (2) is not possible, try to only mix valid SIMD types. For example, using Int32x4 and Float32x4 types would be okay, but don’t mix strings and SIMD types.

- If (2) and (3) are not possible, guard any SIMD code using ambiguous variables with a check operation. Table 9 shows an example of how to do that. The check operation will enable the compiler to confirm that a variable is of the expected type, so it can continue optimizing. If a variable is not of the right type, a TypeError is thrown and execution reverts to the Runtime library. Including the check ensures that the Full JIT can attempt optimization. Without it, the uncertainty would result in execution falling immediately back to the Runtime library. There may be a small overhead associated with using a check, but there is a drastic slowdown if code that could be optimized executes in the Runtime library instead.

function Compute()

{

var x, y;

if (condition1)

{

x = SIMD.Float32x4(1,2,3,4);

y = SIMD.Float32x4(-1,-2,-3,-4);

}

else

{

x = 0;

}

/* developer knows that condition1 == condition2 always */

if (condition2)

{

/* add vector x to itself. So (1,2,3,4) -> (2,4,6,8) */

x = SIMD.Float32x4.add(x, x);

y = SIMD.Float32x4.add(y, y);

}

}

Table 8: Example of polymorphic variables.

function Compute()

{

var x, y;

if (condition1)

{

x = SIMD.Float32x4(1,2,3,4);

y = SIMD.Float32x4(-1,-2,-3,-4);

}

else

{

x = 0;

}

/* developer knows that condition1 == condition2 always */

if (condition2)

{

/* check x is Float32x4 */

x = SIMD.Float32x4.check(x);

/* add vector x to itself. So (1,2,3,4) -> (2,4,6,8) */

x = SIMD.Float32x4.add(x, x);

y = SIMD.Float32x4.check(y);

y = SIMD.Float32x4.add(y, y);

}

}

Table 9: Using check operations to ensure SIMD code optimization.

Conclusion

We hope that this paper helps you to produce better and faster code and showed you how SIMD operations can improve the performance of data-intensive work in JavaScript. Although the performance gain may vary depending on implementation, the coding patterns and SIMD use cases presented here will also apply to other browsers.

References

SIMD.js specification page: http://tc39.github.io/ecmascript_simd/

ChakraCore GitHub* Repo: https://github.com/Microsoft/ChakraCore

Node.js on ChakraCore: https://github.com/nodejs/node-chakracore

Skinning SIMD.js demo: http://huningxin.github.io/skinning_simd/

"