Bring Standardized APIs across Multiple Math Domains Using Intel® oneAPI Math Kernel Library

Mehdi Goli, principal software engineer, Codeplay Software*, and Maria Kraynyuk, software engineer, Intel Corporation

@IntelDevTools

Get the Latest on All Things CODE

Sign Up

The Basic Linear Algebra Subprograms (BLAS) provide key functionality across CPUs, GPUs, and other accelerators for high-performance computing and AI. Historically, developers needed to write code for each hardware platform, and there was no easy way to port source code from one accelerator to another. For the first time, the Intel® oneAPI Math Kernel Library (oneMKL) open-source interface project bridges the gap to support x86 CPU, Intel® GPU, and NVIDIA* GPU linear algebra functionality by creating a single open-source interface that can use highly optimized third-party libraries for different accelerators underneath. This project also opens BLAS functions to other industry accelerators. Learn how the BLAS interface implementation is just the first step to supporting math functionality of other oneMKL domains beyond BLAS.

oneMKL Open-Source Interface

Libraries play a crucial role in software development:

- First, libraries provide functionality that can be reused across applications to reduce both development efforts and the possibility of errors.

- Second, some libraries are highly optimized for target hardware platforms, allowing developers to achieve the best application performance with less effort.

As the industry has developed, the selection of hardware has become more diverse with the rise of accelerators including GPUs, FPGAs, and specialized hardware. Most vendor libraries only function on specific hardware platforms. These libraries are sometimes implemented in proprietary languages that aren’t portable to other architectures. For large applications, this means developers are locked into a specific hardware architecture—and can't benefit from different hardware solutions without a major new development effort.

The oneMKL open-source interface project aims to solve this cross-architecture issue by taking advantage of the Data Parallel C++ (DPC++) language. It addresses:

- Standardization by enabling implementation of the vendor-agnostic DPC++ API defined in the oneMKL specification

- Portability by using existing, well-known, hardware-specific libraries underneath (Figure 1)

Figure 1. High-level overview of oneMKL open-source interfaces

Use Model and Third-Party Library Selection

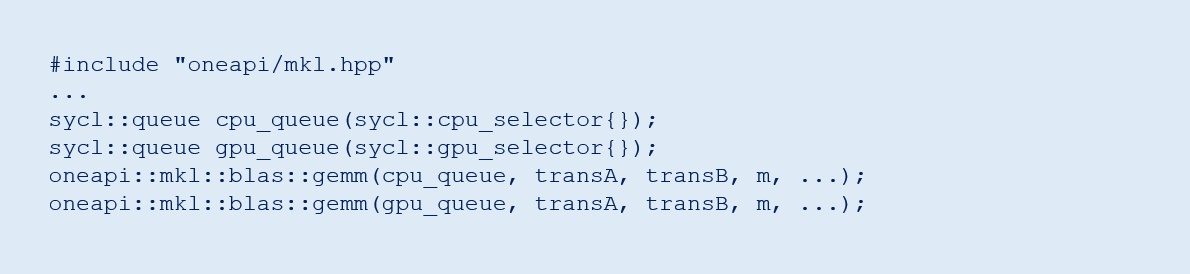

The oneMKL open-source interfaces project provides a DPC++ API common for all hardware devices. For example, it runs the general matrix multiplication (GEMM) function on Intel and NVIDIA GPUs identically, except for the step that creates the SYCL* queue. In the code snapshot in Figure 2, we create two different queues targeted for CPU and GPU devices and call GEMM for each queue to run the function on the targeted devices. Compilation is shown in Figure 3.

Figure 2. Code snapshot of application app.cpp

Figure 3. Compile and link line for application app.cpp

When the application runs, oneMKL:

- Selects the third-party library based on the device information provided by the SYCL queue

- Converts input parameters to the proper third-party library input data

- Calls functions from the third-party library to run on the targeted device

Figure 4 shows the execution flow, where oneMKL is divided into two main parts:

- Dispatcher library: Identifies the targeted device and loads the appropriate backend library for that device.

- Back end library: Wrappers that convert DPC++ runtime objects from the application to third-party library-specific objects, then calls the third-party function. Each supported third-party library has this wrapper.

Figure 4. The oneMKL flow

For the given example, if the targeted device is an Intel® processor, oneMKL uses the Intel oneMKL library underneath. If the targeted device is an NVIDIA GPU, oneMKL uses cuBLAS for NVIDIA. This approach allows a oneMKL project to achieve the level of performance third-party libraries provide with minimal overhead for object conversion.

Enable a Third-Party cuBLAS Library

Integrating CUDA on the oneMKL SYCL back end relies on the existing CUDA for SYCL support integrated with the DPC++ CUDA back end. It uses the SYCL 1.2.1 interface with some additional extensions to expose the CUDA interoperability objects. The SYCL interoperability with existing native objects is the interop_task interface inside the command group scope. Codeplay contributed the interop_task extension to the SYCL programming model for interfacing with the native CUDA libraries (in this case, cuBLAS). A SYCL application using interoperability with CUDA functions uses the same SYCL programming model. It’s composed of three scopes to control the construction and lifetime of different objects used in the applications:

- The kernel scope represents a single kernel function interfacing with native objects executing on a device.

- The command group scope determines a unit of work comprised of a kernel function and accessors.

- The application scope includes all other code outside of a command group scope.

Once invoked, interop_task is injected into the SYCL runtime acyclic dependency graph (DAG) to preserve data dependencies among kernels on the selected device.

The cuBLAS library contains BLAS routines integrated with an explicit scheduling control object, called a handle. The handle enables you to assign different routines to different devices or streams with different or the same contexts.

Figure 5. Architectural view of cuBLAS integration in oneMKL

Figure 5 shows the architectural view of mapping the cuBLAS backend to oneMKL. It has three parts:

- Constructing the cuBLAS handle: Creating a cuBLAS handle is costly. Create one handle per thread per CUDA context to avoid performance overhead and to maintain correctness. Since there's one-to-one mapping between the SYCL context and the underlying CUDA context, a user-created SYCL contex automatically creates one cuBLAS handle per thread to minimize the performance overhead and to guarantee correctness. Because you can create multiple SYCL queues for a single SYCL context, you can submit different BLAS routines to different streams with the same context using the same cuBLAS handle.

- Extracting CUDA runtime API objects from the SYCL runtime API: The SYCL queue embeds the SYCL context used to create the queue. Using the SYCL interoperability features, the underlying native CUDA context and stream are extracted from the user-created SYCL queue to create and retrieve the cuBLAS handle required to invoke cuBLAS routines. Also, the allocated CUDA memories for a BLAS routine are obtained from accessors, created from SYCL buffers, or the Unified Shared Memory (USM) model for SYCL. Interoperability with CUDA runtime API objects is only accessible from host_task/interop_task inside the command group scope.

- Interfacing with cuBLAS routines inside SYCL interoperability functions: For a BLAS routine you request from the oneMKL BLAS interface, the retrieved cuBLAS handle and allocated memories invoke an equivalent cuBLAS routine from the host_task/interop_task. While the cuBLAS routine runs, the CUDA context that creates a cuBLAS handle must be the active CUDA runtime context. Therefore, at the beginning of each routine invocation, the CUDA context associated with the selected cuBLAS handle activates.

Conclusion

The oneMKL open-source interface project is available on GitHub* and open for contributions from anyone. Currently, it includes all levels of BLAS and BLAS-like extensions. Intel developers built the initial project for x86 CPUs and Intel® GPUs using oneMKL. From the open-source community, Codeplay contributed support for GPUs from NVIDIA via cuBLAS from NVIDIA.

While API standardization exists for math domains like linear algebra, and de facto standards like the Fastest Fourier Transform in the West (FFTW) exist for discrete Fourier transforms, standard APIs are harder to come by in other domains like sparse solvers and vector statistics. The goal of the oneMKL project is to bring standardized APIs to multiple math domains. For example, the Intel team plans to introduce an API for Random Number Generators (RNG). The oneMKL project is a place for community-driven standardization of math APIs.

_______

You May Also Like

Intel oneAPI Math Kernel Library

Accelerate math processing routines, including matrix algebra, fast Fourier transforms (FFT), and vector math. Part of the Intel® oneAPI Base Toolkit.