Introduction

Zero-packet-loss rate is an important indicator of network-forwarding performance as well as the focus of the Data Plane Development Kit (DPDK) development team. This paper introduces the test bench settings and test methods used for zero-packet-loss testing in DPDK virtualization functions. While the topology of a vhost/virtio zero-packet-loss test is the same with the north-south physical-VM-physical (PVP) throughput test, it requires special platform configuration. The real-time configuration of the operating system and the virtio and vring lengths have great impact on the real-time processing of packets.

To gain better zero-packet-loss performance, we need to optimize vring size and real-time configuration of the host and virtual machine (VM).

Vring Size

A larger vring size offers more data-buffer space, which should reduce the packet loss rate. In previous versions of QEMU*, the rx_queue_size and tx_queue_size of the virtio device provided by QEMU are a fixed value of 256 descriptors, which is not enough buffer space. In the new version of QEMU (2.10), these parameters are configurable up to 1024 descriptors. Configure as follows:

qemu_2.10/bin/qemu-system-x86_64

......

-device virtio-net-pci,…… ,rx_queue_size=1024,tx_queue_size=1024

......During our performance test, we observed around 74% performance gain when we increased the queue size from 256 to 1024.

Real-time Configuration of the Host and PM

The zero-packet-loss feature requires higher stability and real-time performance. Existing servers are mostly multi-core systems. Linux* system interruptions and multitask scheduling greatly affect the real-time performance of the system, resulting in a higher possibility of packet-loss. Here are a few cases that can be optimized:

- Core isolation: In the Linux GRUB configuration file, the cores which would be used in testing, for example isolcpus = 18-22, are isolated to ensure that the test command occupies the corresponding core.

- No-clock setting: Linux assigns the scheduling-interrupt clock to different cores, and the core response will cause latency. This configuration moves the system’s timing function to cores that are not isolated. Set Nohz_full=18-22 in GRUB: cores 18-22 will be marked as adaptive-ticks CPU.

- Change task-scheduling priority: When we start the test command, we can change the system's speed-control strategy to sched_fifo through the chart - f 95 prefix and make the priority 95 so that the system will run our test command first. At the same time, by implementing the following configuration, we can prevent the test command from being scheduled because of timeout:

echo -1 > /proc/sys/kernel/sched_rt_period_us echo -1 > /proc/sys/kernel/sched_rt_runtime_us - Change

/proc/irq/irq_number/smp_affinity_listto ensure that interrupts are not assigned to isolated cores. - Close Watchdog:

echo 0 > /proc/sys/kernel/watchdog_thresh.

Throughout the test, these real-time settings can bring four to five times greater zero-packet-loss performance.

In DPDK 17.05, the DPDK test team released a performance report for vhost/virtio on X86 XEON platform.

Test-platform information:

| Item | Description |

|---|---|

| Server Platform | Intel® Server Board S2600GZ Intel® Server Board S2600GZ Family |

| CPU | Intel® Xeon® Platinum 8180 processor (38.5 MB L3 cache, 2.50 GHz) 56 cores, 112 threads |

| Memory | 96 GB over 8 channels, DDR4 @2666 MHz |

| PCIe | 1 x PCIe Generation 3 x 8 |

| NICs | Intel® Ethernet Converged Network Adapter (Intel® Ethernet CNA) XL710-QDA2 (2 x 40 Gbps) |

| BIOS | SE5C620.86B.01.00.0013 |

| Host Operating System | Ubuntu* 18.04 LTS |

| Host Linux kernel version | 4.15.0-20-generic |

| Host GCC version | GCC (Ubuntu* 7.3.0-16ubuntu3) 7.3.0 |

| Host DPDK version | 18.05 |

| Guest Operating System | Ubuntu 16.04 LTS |

| Guest GCC version | GCC (Ubuntu 5.4.0-6ubuntu1~16.04.4) 5.4.0 20160609 |

| Guest DPDK version | 18.05 |

| Guest Linux kernel version | 4.4.0-62-generic |

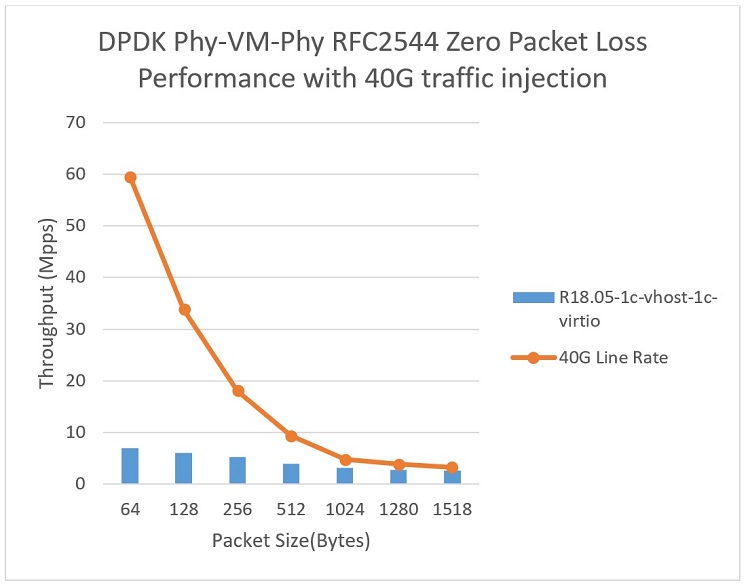

In DPDK 18.05, zero packet loss test results are as follows:

| Packet Size (Bytes) | Throughput (Mpps) |

|---|---|

| 64 | 6.93 |

| 128 | 6.05 |

| 256 | 5.31 |

| 512 | 3.93 |

| 1024 | 3.14 |

| 1280 | 2.83 |

| 1518 | 2.64 |

For more information on the latest performance configuration and test command set for vhost/virtio, refer to the document below:

DPDK Vhost/Virtio Performance Report Release 18.05

Summary

Zero-packet-loss performance is one of the key indicators for network products. This article introduced test bench settings and test methods used for zero-packet-loss testing in DPDK virtualization functions, and highlighted scenarios for optimization.

We also showed vhost/virtio PVP Zero-packet-loss test results using DPDK 18.05 release.

About the Author

Yao Lei is an Intel software engineer who is mainly responsible for work related to DPDK virtualization testing.

"